How to Configure Global Rate Limits by Path in Istio

This article is for those who are starting with Istio rate limit feature aiming to understand how rate limit based on request path works

This article is for those who are starting with Istio rate limit feature aiming to understand how rate limit based on request path works. It derives from my own practice and clarifies a confusion about AND/OR operations within the rate_limit actions. I spent some extra hours than expected figuring out what I’ll condense here for you to learn in a few minutes.

The Basics

Istio works on top of Envoy, and it is envoy the main technology we will be talking about. Envoy has the option of implementing local (in the proxy itself) or global (calling external service) rate limits, at L4 or L7.

External Rate Limit Service

An external rate limit service (RLS) works in conjunction with a Redis database and is connected via gRPC with envoy instances. This RLS is called due to a filter added in the listener chain before the HTTP routing filter.

This external filter organizes descriptors in groups of domains. Each descriptor is a key-value(s) pair which is populated by the rate limit filter and passed on to the RLS for it to use in the rules enforcement logic. Please refer to https://github.com/envoyproxy/ratelimit#overview for an implementation.

The RLS needs to be installed and managed by the cluster operator (you) and does not come out of the box with Istio, while it can be found in the samples directory at the Istio bundle you downloaded at install time.

Envoy HTTP Rate Limit Filter

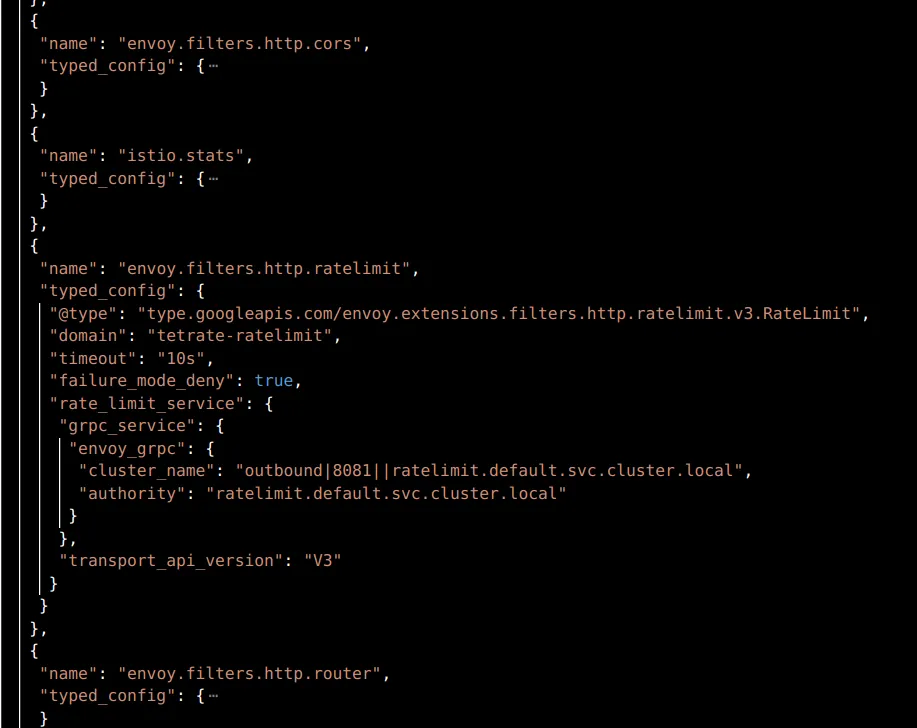

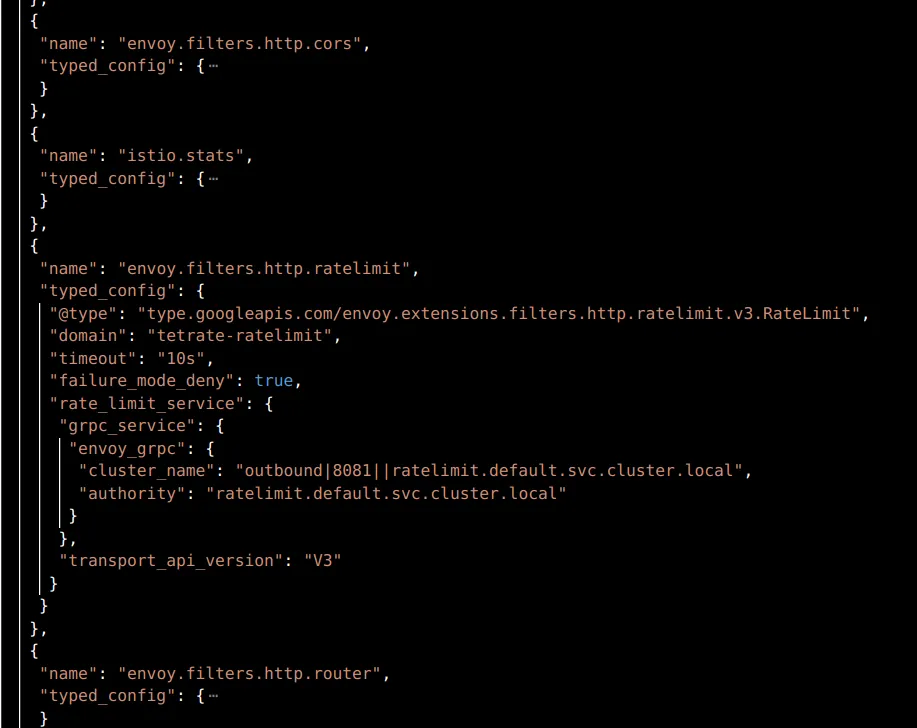

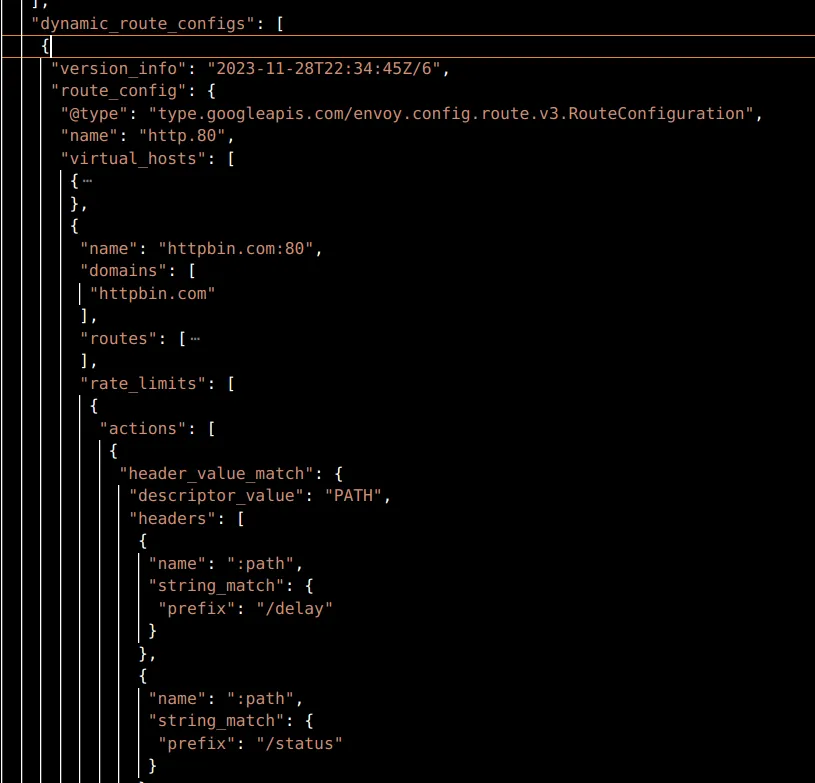

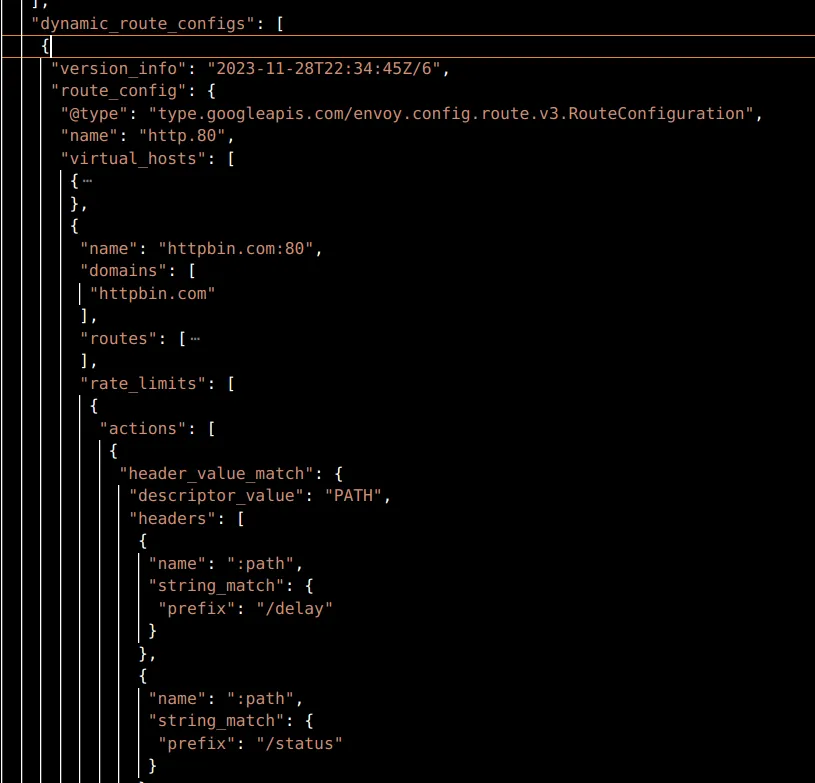

The envoy setup is made of two Envoy configurations applied to an Ingress Gateway, one that adds the rate limit filter in the Listeners component and the other that defines the actions at the Virtual Host level in the Dynamic Routes component.

Note: you can get this dump from any envoy proxy (gateways of course) with the following:

k exec <POD> -c istio-proxy -- curl 'localhost:15000/config_dump' > config_dump.jsonThe listener filter configures how to reach out to the RLS, the domain it is watching for, and some other settings. This filter accesses the actions defined in the routes and when those are met, it triggers a gRPC call to the RLS upstream with the domain, descriptor key and value for it to return a verdict.

An Example of Rate Limit by Request Path with Istio

You need to follow this in order to get started. Take a moment to analyze the configs based on the previous introduction. For your reference, I am using httpbin service which you can also access in the Istio bundle’s samples directory.

The Wrong Way

So, I wish to rate limit based on two paths, /delay and /status . Seemed fairly easy, so I configured my Envoy Filter and RLS like:

# EnvoyFilter configuring route actions

patch:

operation: MERGE

value:

rate_limits:

- actions:

- header_value_match:

descriptor_key: "PATH"

descriptor_value: "yes"

headers:

- name: ":path"

safe_regex_match:

google_re2: {}

regex: ".*delay.*"

- header_value_match:

descriptor_key: "PATH"

descriptor_value: "no"

headers:

- name: ":path"

safe_regex_match:

google_re2: {}

regex: ".*status.*"# RLS configmap

...

data:

config.yaml: |

domain: tetrate-ratelimit

descriptors:

- key: PATH

value: "yes"

rate_limit:

unit: minute

requests_per_unit: 3

- key: PATH

value: "no"

rate_limit:

unit: minute

requests_per_unit: 1With this, I was expecting to have it working by calling:

curl http://127.0.0.1:8080/delay/1 -H"host: httpbin.com" -v -o /dev/nullbut the RLS wasn’t even noticing the call, so the rate limit was not happening:

# k logs -n default -f ratelimit-57bf5688c-f8q5k

time="2023-11-29T17:34:10Z" level=debug msg="[gostats] Flush() called, all stats would be flushed"

time="2023-11-29T17:34:20Z" level=debug msg="[gostats] flushing counter ratelimit.go.mallocs: 460"

time="2023-11-29T17:34:20Z" level=debug msg="[gostats] flushing counter ratelimit.go.frees: 26"

time="2023-11-29T17:34:20Z" level=debug msg="[gostats] flushing counter ratelimit.go.totalAlloc: 15624"

time="2023-11-29T17:34:20Z" level=debug msg="[gostats] flushing gauge ratelimit.go.sys: 0"In order to set debug mode, you can edit the ratelimit-server deployment at the container arguments. There you’ll find the log level flag.

I tried all sorts of combinations in the rate_limits.actions settings, using string_match and prefix_match instead of safe_regex_match with no luck.

Also, tried to use a single descriptor_key with different values and even not setting it and using the default header_match.

Eureka Moment

I identified that the filter was not sending anything to the RLS, because the former was going silent on any request. Then, going through the docs I read:

“If an action cannot append a descriptor entry, no descriptor is generated for the configuration”

See here.

And called:

curl http://127.0.0.1:8080/status/delay -H"host: httpbin.com" -v -o /dev/nullwith the following result:

time="2023-11-29T16:07:07Z" level=debug msg="got descriptor: (PATH=yes),(PATH=no)"

time="2023-11-29T16:07:07Z" level=debug msg="starting get limit lookup"

time="2023-11-29T16:07:07Z" level=debug msg="looking up key: PATH_yes"

time="2023-11-29T16:07:07Z" level=debug msg="found rate limit: PATH_yes"So, only when my path satisfied both the header_value_match , the descriptor was passed on to the RLS. My current config was working as an AND operator.

Then, it all boiled down to: how to set an OR logic instead of an AND in the rate_limit actions?

A Solution

The original config changed a little bit, like:

# EnvoyFilter configuring route actions

value:

rate_limits:

- actions: # any actions in here

- header_value_match:

descriptor_key: "PATH_DELAY"

descriptor_value: "yes"

headers:

- name: ":path"

safe_regex_match:

google_re2: {}

regex: ".*delay.*"

- actions:

- header_value_match:

descriptor_key: "PATH_STATUS"

descriptor_value: "yes"

headers:

- name: ":path"

safe_regex_match:

google_re2: {}

regex: ".*status.*"Which looks like a working one, as per:

❯ curl http://127.0.0.1:8080/delay/1 -H"host: httpbin.com" -v -s -o /dev/null

* Trying 127.0.0.1:8080...

* Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0)

> GET /delay/1 HTTP/1.1

> Host: httpbin.com

> User-Agent: curl/7.81.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< server: istio-envoy

< date: Wed, 29 Nov 2023 20:17:02 GMT

< content-type: application/json

< content-length: 703

< access-control-allow-origin: *

< access-control-allow-credentials: true

< x-envoy-upstream-service-time: 1005

<

{ [703 bytes data]

* Connection #0 to host 127.0.0.1 left intact

❯ curl http://127.0.0.1:8080/delay/1 -H"host: httpbin.com" -v -s -o /dev/null

* Trying 127.0.0.1:8080...

* Connected to 127.0.0.1 (127.0.0.1) port 8080 (#0)

> GET /delay/1 HTTP/1.1

> Host: httpbin.com

> User-Agent: curl/7.81.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 429 Too Many Requests <---------- See

< x-envoy-ratelimited: true

< date: Wed, 29 Nov 2023 20:17:06 GMT

< server: istio-envoy

< content-length: 0

<

* Connection #0 to host 127.0.0.1 left intactAnd the RLS logs:

time="2023-11-29T16:33:57Z" level=debug msg="starting cache lookup"

time="2023-11-29T16:33:57Z" level=debug msg="looking up cache key: tetrate-ratelimit_PATH_DELAY_yes_1701275580"

time="2023-11-29T16:33:57Z" level=debug msg="cache key: tetrate-ratelimit_PATH_DELAY_yes_1701275580 current: 1"

time="2023-11-29T16:33:57Z" level=debug msg="returning normal response"

time="2023-11-29T16:33:57Z" level=debug msg="[gostats] flushing time ratelimit_server.ShouldRateLimit.response_time: 0.000000"

time="2023-11-29T16:33:59Z" level=debug msg="got descriptor: (PATH_DELAY=yes)"

time="2023-11-29T16:33:59Z" level=debug msg="starting get limit lookup"

time="2023-11-29T16:33:59Z" level=debug msg="looking up key: PATH_DELAY_yes"

time="2023-11-29T16:33:59Z" level=debug msg="found rate limit: PATH_DELAY_yes"

time="2023-11-29T16:33:59Z" level=debug msg="applying limit: 1 requests per MINUTE, shadow_mode: false"Trust me, the /status limit was also in place.

AND/OR Logic for Rate Limit

The rate_limits config supports more than one position in its array, so the AND/OR logic operation depends on whether the actions are nested into the same actions subset or not.

Note: There is likely a safer and more efficient way for path matching than a regex as open as in this example. It is made simple so we focus on the subject matter.