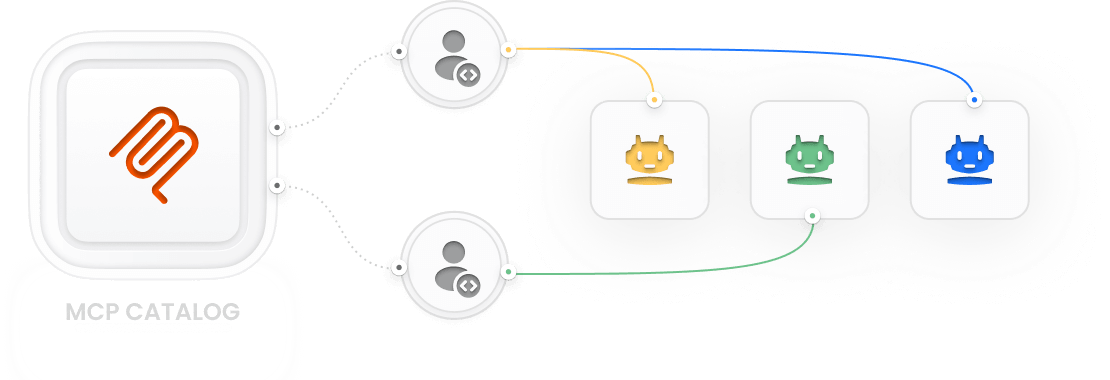

Operationalize Agent Readiness with Tetrate Agent Router

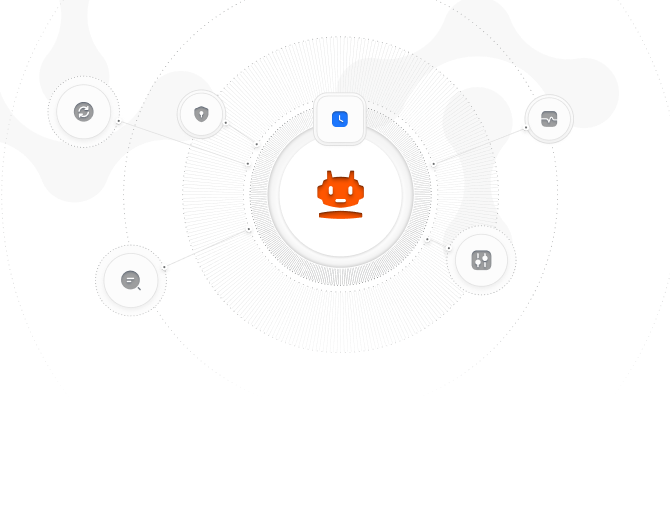

Prove AI Agent Consistency & Completeness

Bridge the gap between "it works" and "is complete." Achieve agent readiness with a consistent way to define, build, and certify agents as complete for use at scale. Built on Envoy AI Gateway, co-created and maintained by Tetrate.