Announcing Tetrate Agent Operations Director for GenAI Runtime Visibility and Governance

Tetrate helps enterprises maximize the return on investment (ROI) from generative artificial intelligence (GenAI) initiatives.

Today, we are excited to announce the technical preview of Tetrate Agent Operations Director! Tetrate designed this new product in response to a growing question from platform teams:

How can I offer application development, security, and FinOps teams better visibility and governance of inference workloads to support our strategic GenAI initiatives?

At Tetrate, we believe the answer lies in intelligent traffic orchestration of inference workloads. We laid the technical groundwork months ago with the launch of Envoy AI Gateway in collaboration with Bloomberg and others in the Envoy community. With this launch, we’ve made the power of Envoy even more accessible for GenAI inference traffic management by adding Agent Operations Director on top of Envoy AI Gateway. In this blog, I will introduce Agent Operations Director as the first installment of a series.

The Rise of Inference Workloads and Risks of Shadow AI

In the past 12 months, unsurprisingly, inference workloads have become the fastest-growing segment of regulated workloads as enterprises and government entities experiment with GenAI. Rightly so since inference workloads meets all the criteria for tight governance:

- High costs of internal GPU-based hardware or external LLM providers, with very dynamic and unpredictable usage patterns;

- Extremely rapid innovation cycles with new models, frameworks, and providers emerging almost weekly;

- Severe business consequences for misuse such as the loss of intellectual property or private data that can incur millions of dollars in fines.

Caught between the pressure to incorporate GenAI quickly and the demand to demonstrate business value (or return on investment), application developers, security, and FinOps teams are faced with a lot of questions they are ill equipped to answer:

- Which models are being used, by which teams, and are these models sanctioned by the organization?

- What factors are driving GenAI consumption? How can we showback or chargeback?

- How much should we budget for GenAI going forward? How to calculate the ROI?

Moreover, they are also left with limited options to influence outcome:

- How to stop unsanctioned model usage?

- How to reduce developer toil with a unified API for different models?

- How to proactively manage ROI with rate limiting and fallback?

Most solutions today have obvious drawbacks:

- Technology Business Management (TBM) software was built to manage cloud costs, with no granularity at the model level, and no ability to govern transactions.

- Service provider software is vendor specific, making cross-vendor visibility and control impossible.

- Building custom stacks using AI gateways show promise, but many python-based implementations suffer from scaling problems in production, and not every team is ready to take on the additional complexity.

Lacking adequate visibility and governance in inference traffic creates “Shadow AI” — unsanctioned, ungoverned GenAI usage that ultimately exposes enterprises to legal risks or excessive unplanned costs. However, managing inference traffic requires a solution that can cope with high dynamism, heterogeneity, and scale — sounds like a job for Envoy!

Tetrate is the leading innovator in Envoy-based dynamic gateways that autonomously orchestrate traffic to discover, connect, secure, and optimize regulated workloads. Can Tetrate extend its expertise in managing regulated workload traffic to secure and optimize inference workload traffic?

The answer is YES.

Frictionless Discovery, Granular Controls, Battle-Tested Technology

Tetrate Agent Operations Director addresses three key critical barriers in inference traffic management:

- Frictionless discovery: how to inventory and reconcile the addressable inference traffic in your organization without dependency on application development teams?

- Granular controls: how to set fine-grained policies at a transaction level—in the case of inference workloads, down to the model and provider?

- Future-proof scale: How to ensure the infrastructure is scalable for the entire enterprise, and does not become tech debt?

Global Source of Truth with Frictionless Discovery

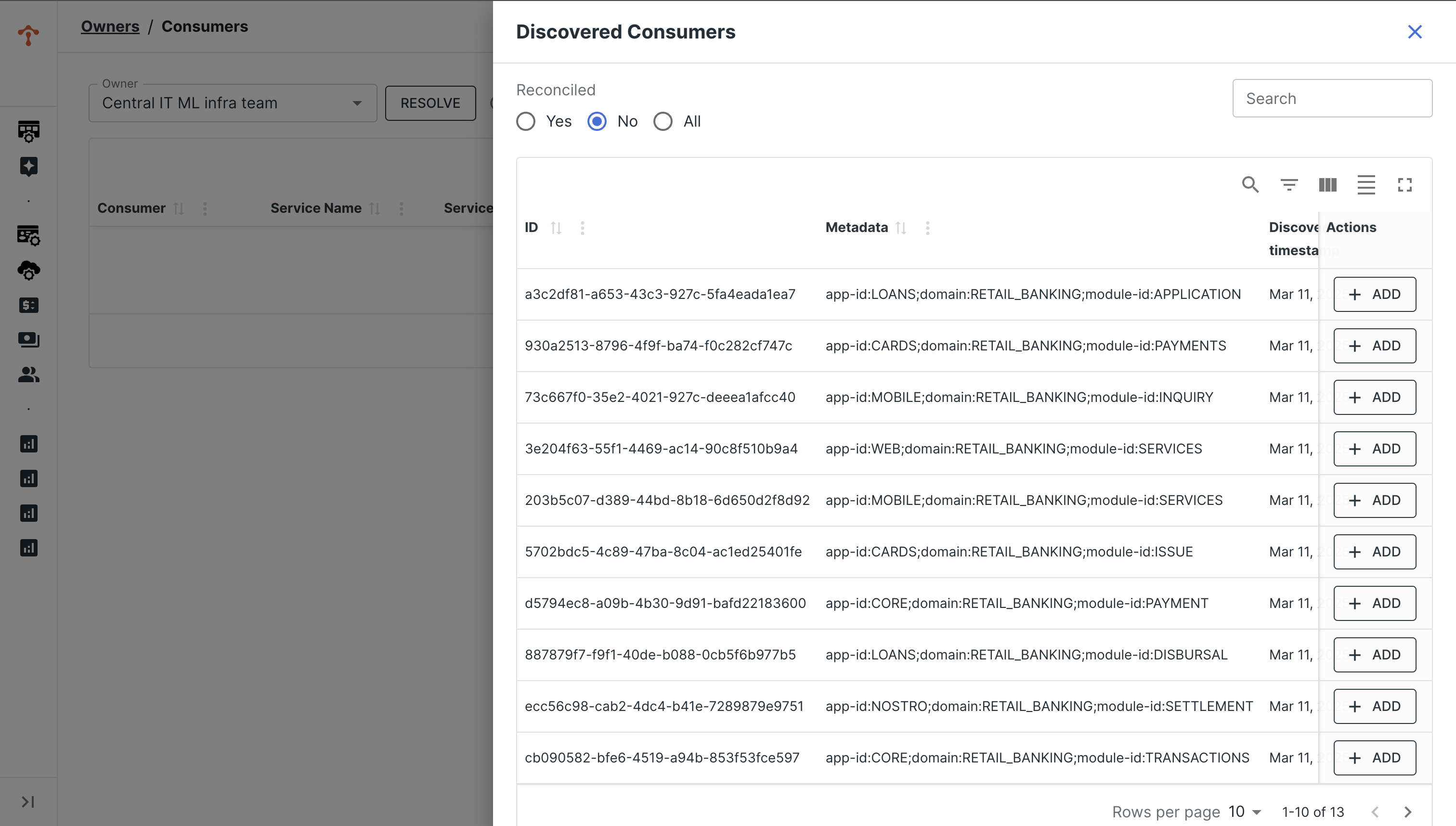

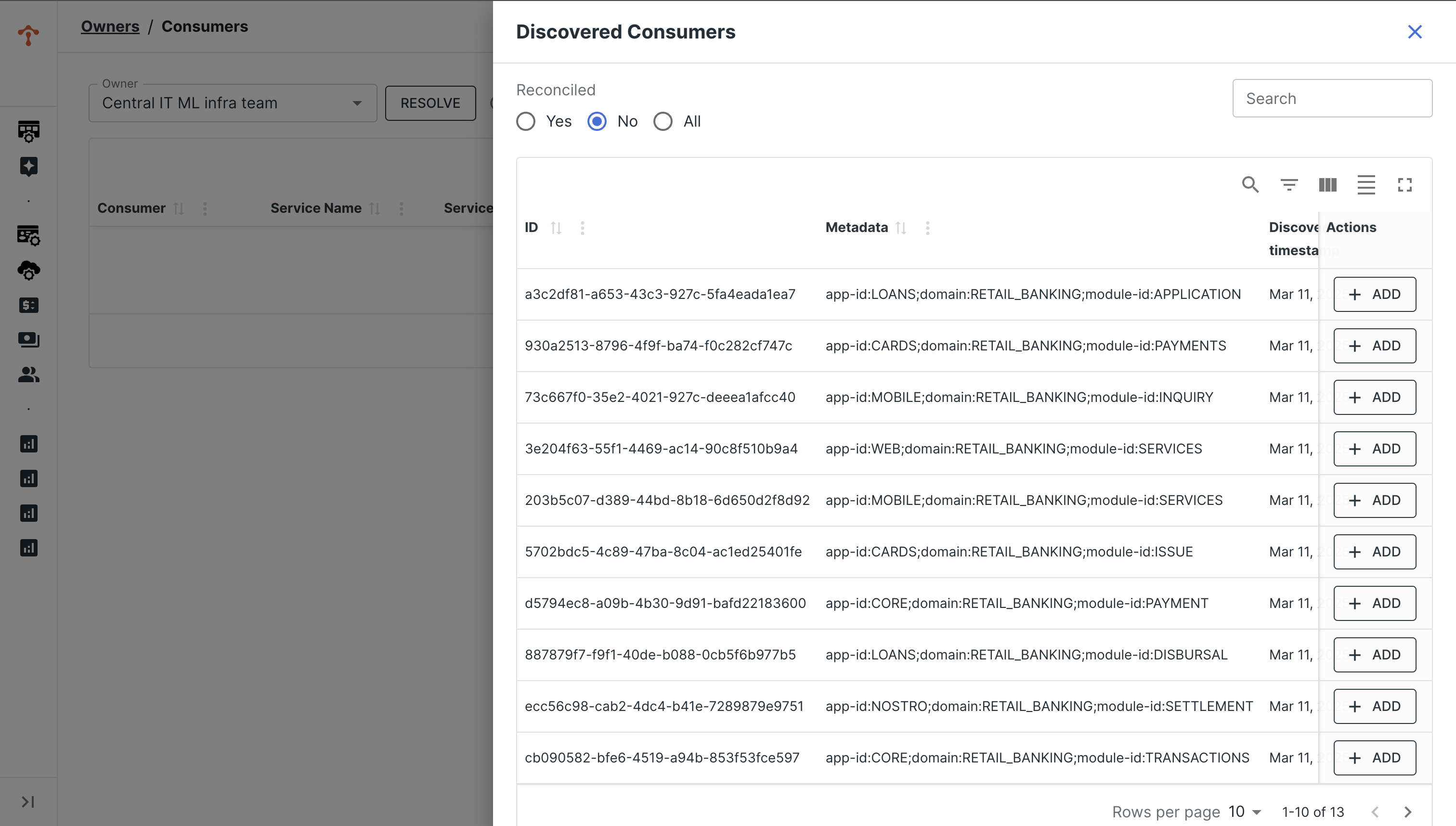

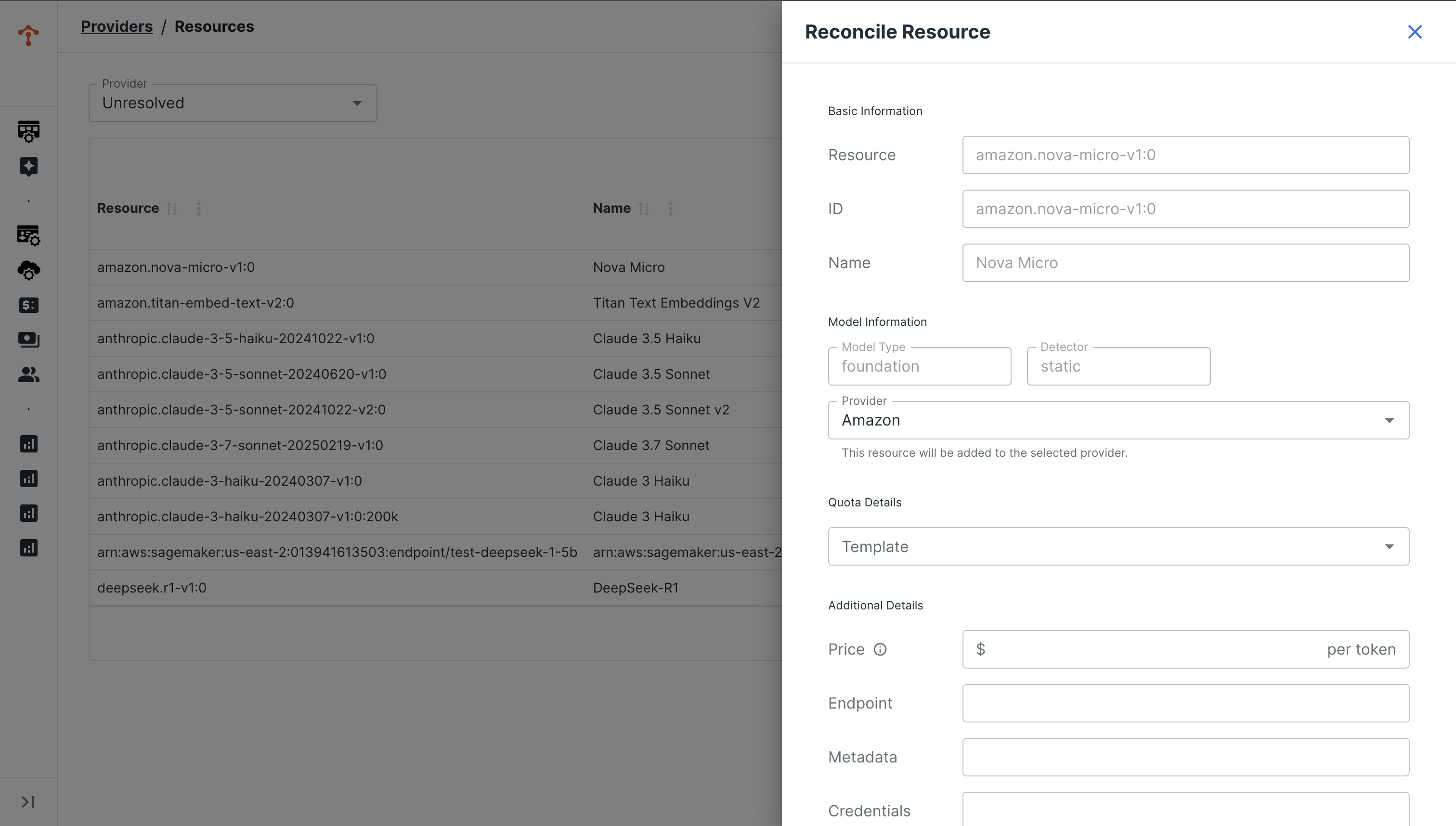

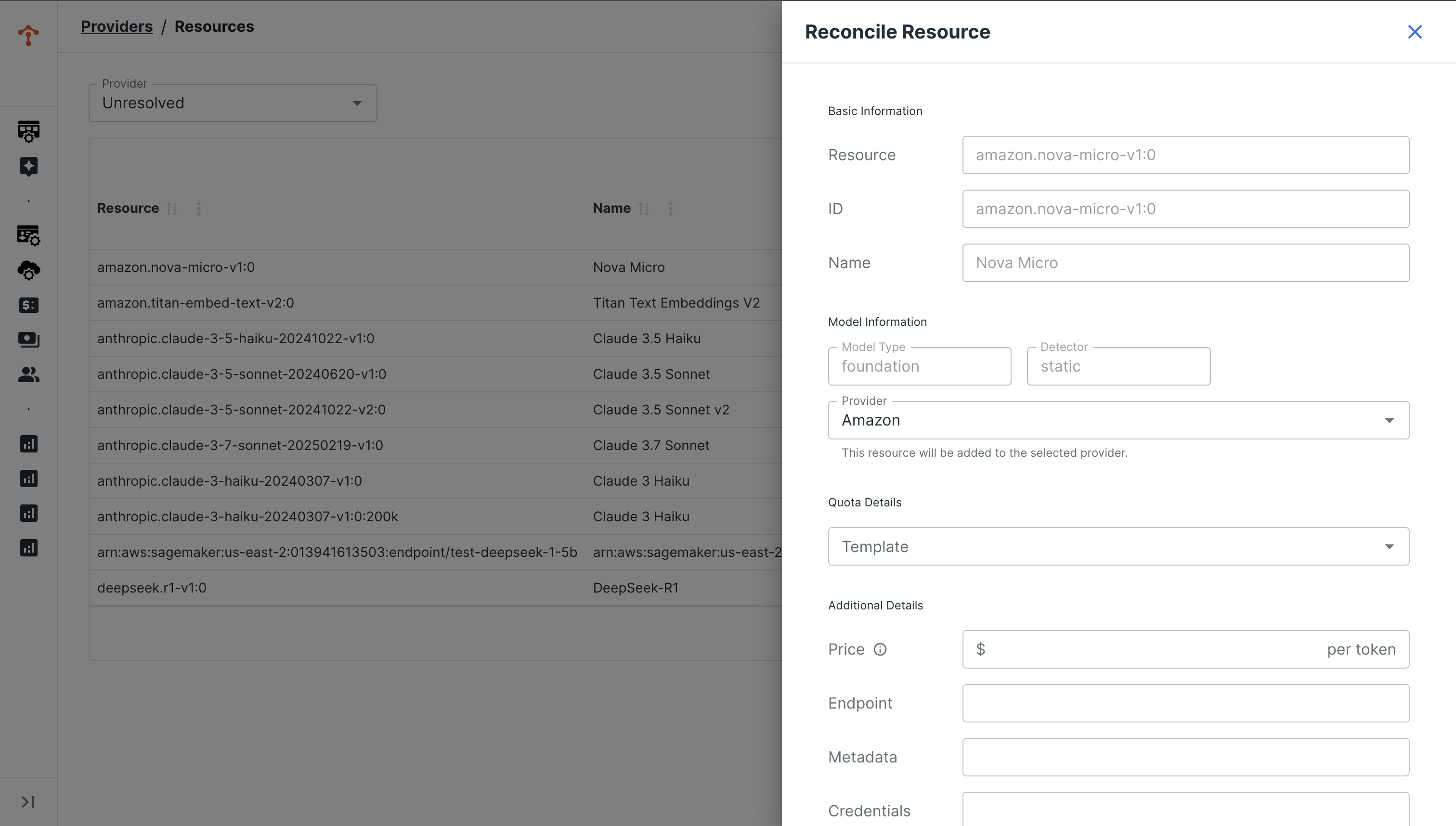

To achieve frictionless discovery of addressable inference traffic, Tetrate Agent Operations Director provides Discovery Gateways. Discovery Gateways are deployed near the network perimeter, and they passively intercept and inventory inference transactions. The metadata gathered by Discovery Gateways are then aggregated and presented in Agent Operations Director’s management console for administrators to resolve ownership (consumers responsible for initiating transactions) and providers (running resources being consumed).

Tetrate Agent Operations Director’s Discovery Gateway and reconciliation workflow allow users to establish a global source of truth of entities who consume and provide GenAI resources. Insights and governance starts with having a real-time, continuously updated, accurate global source of truth of models and their consumers in Tetrate Agent Operations Director.

Granular Insights and Controls

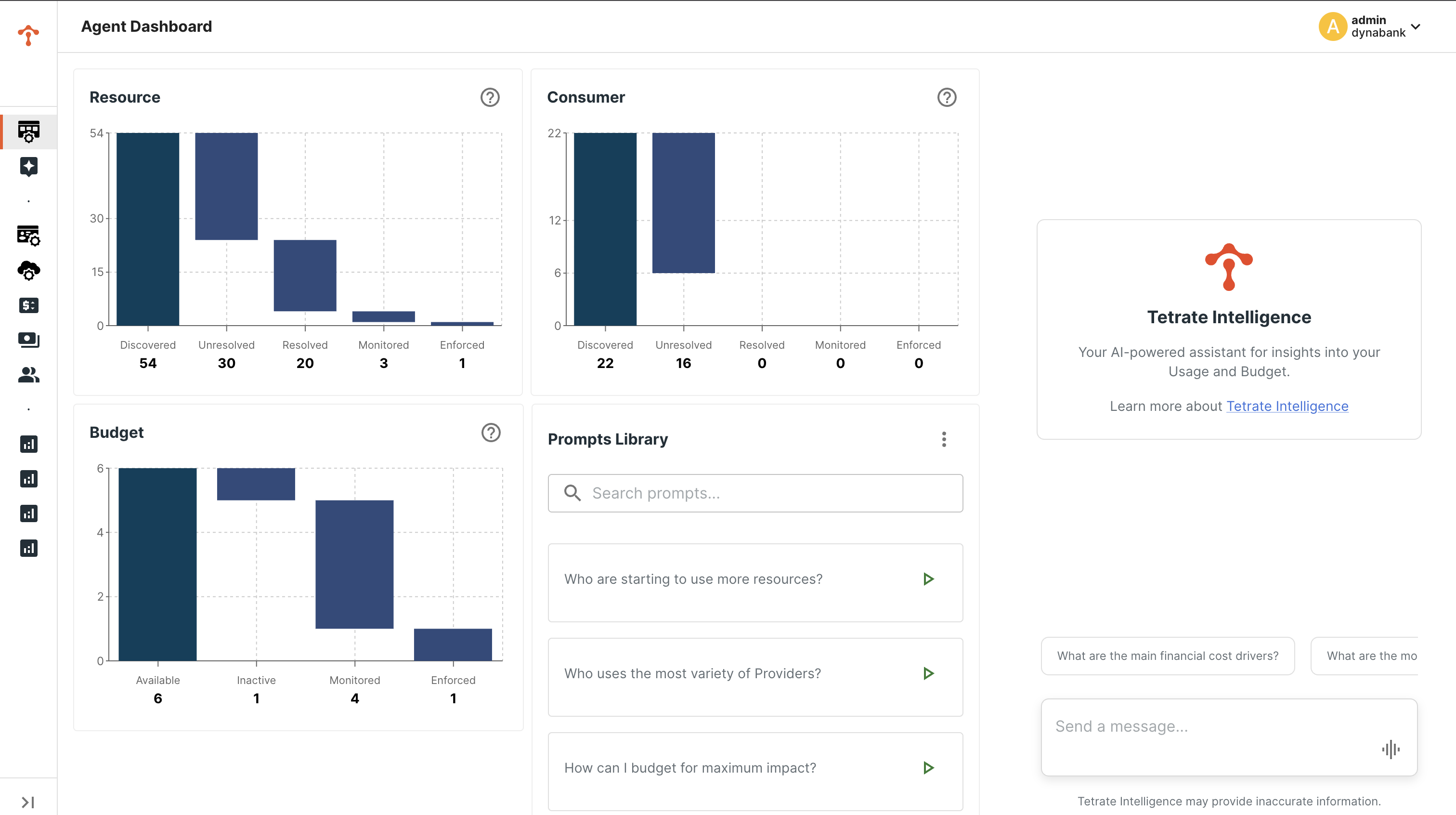

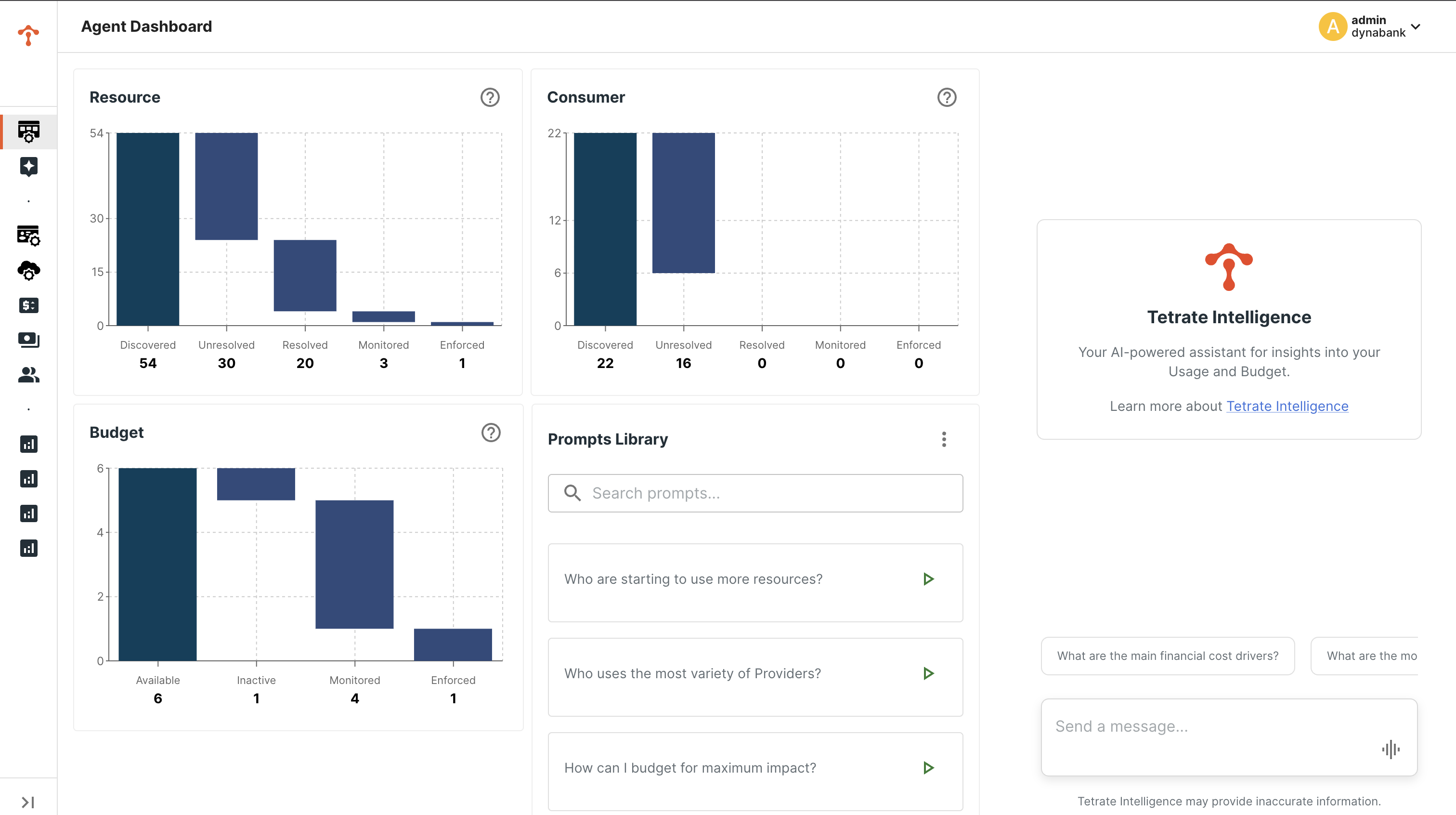

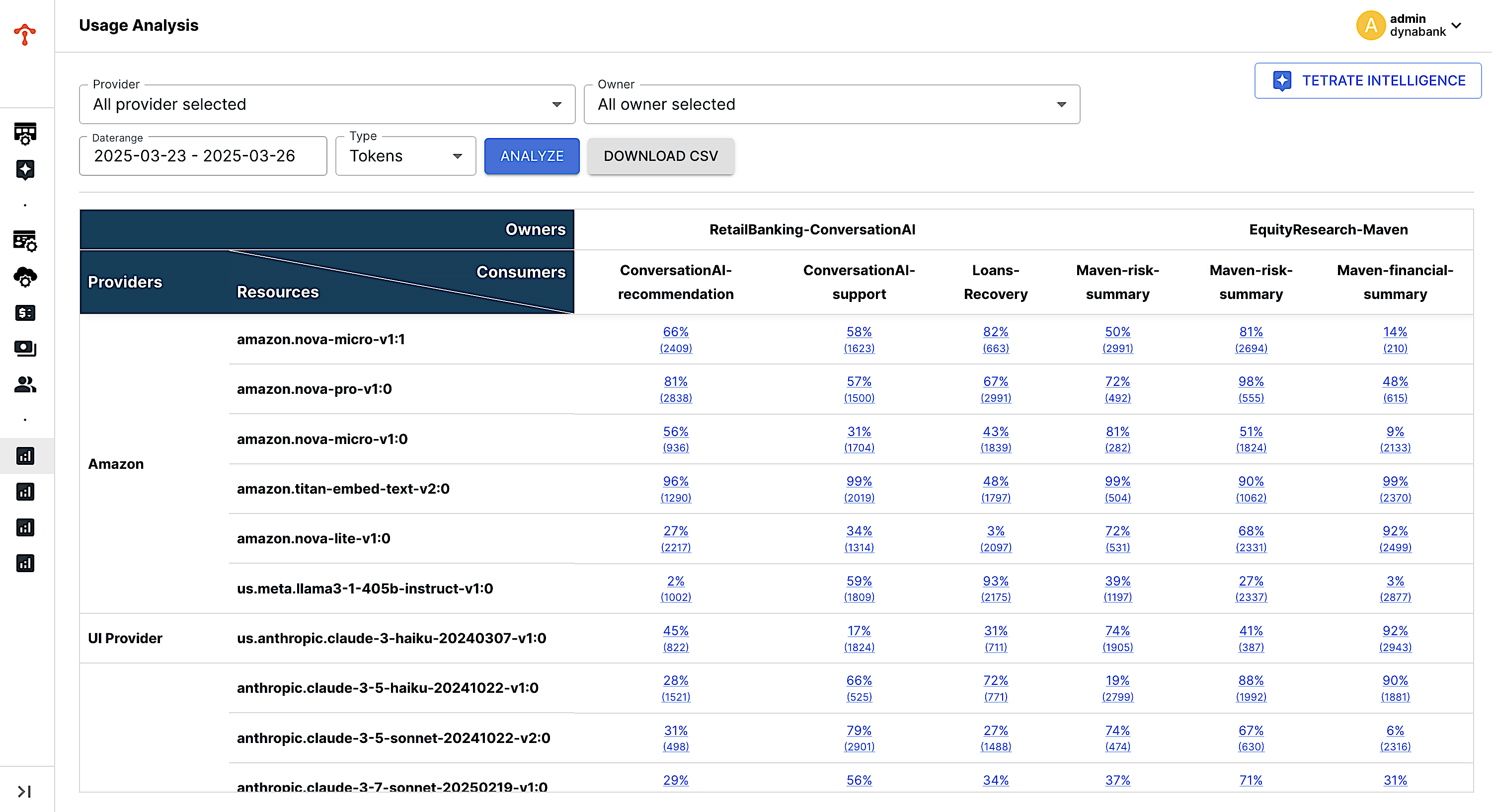

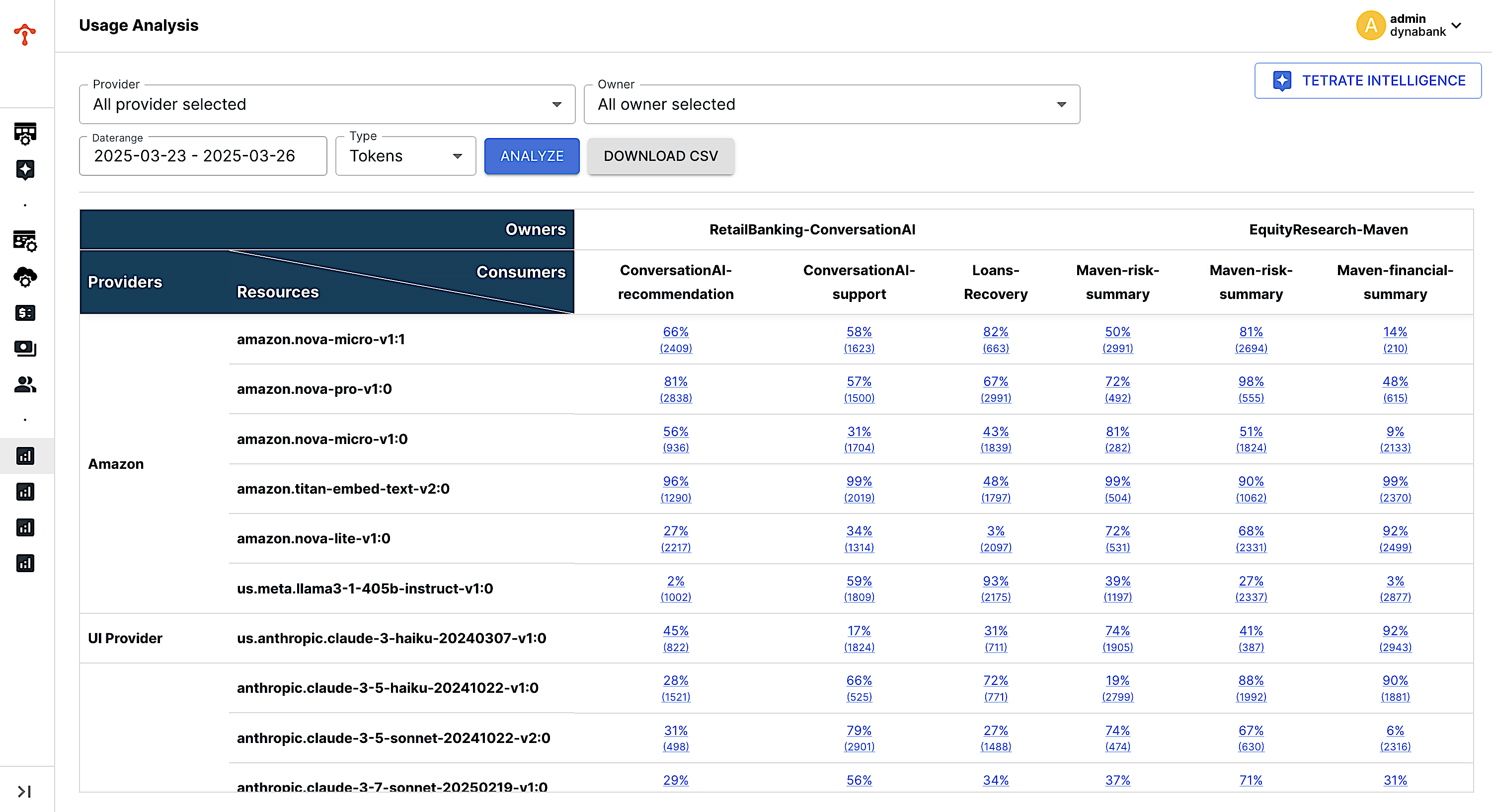

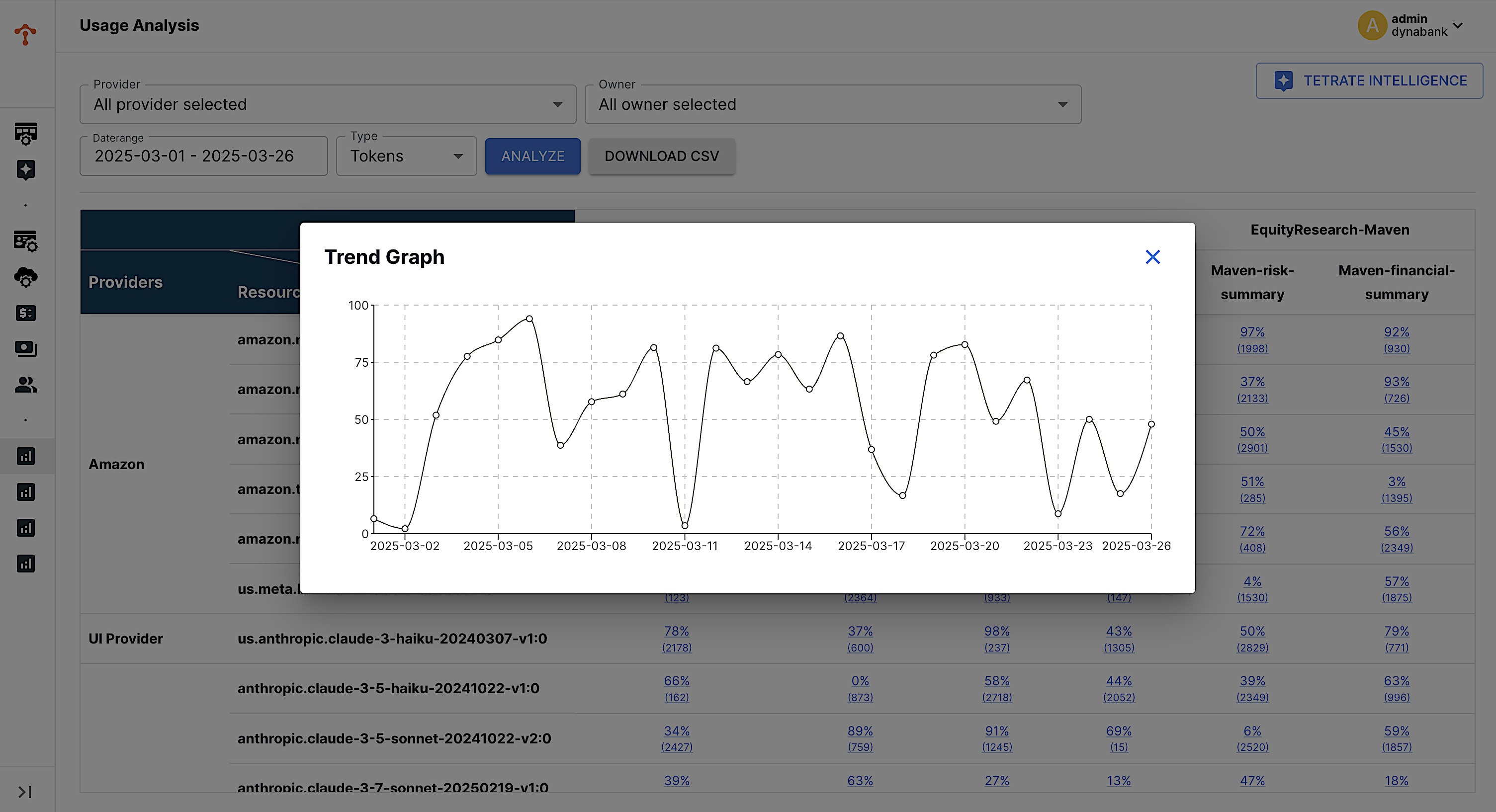

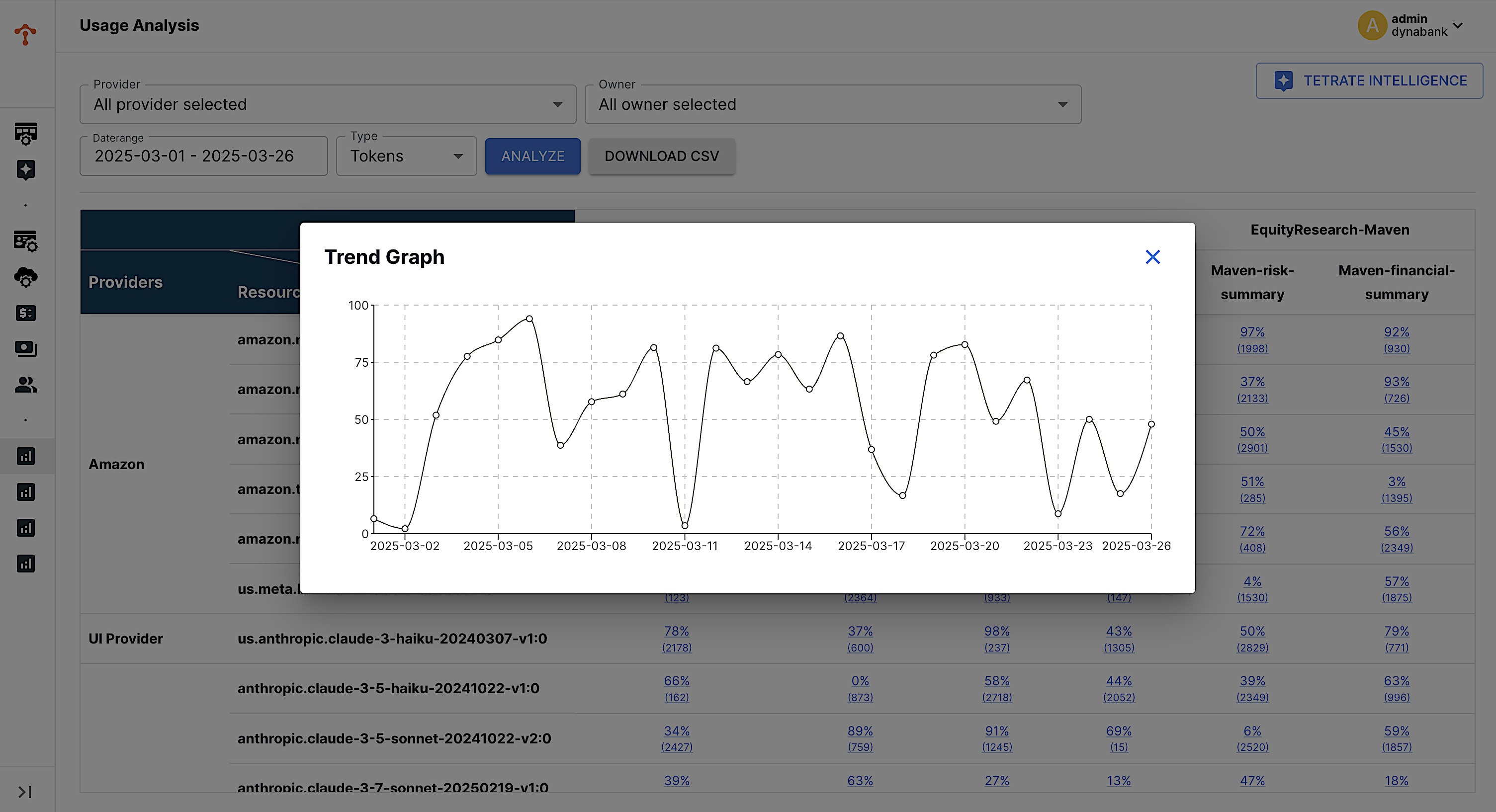

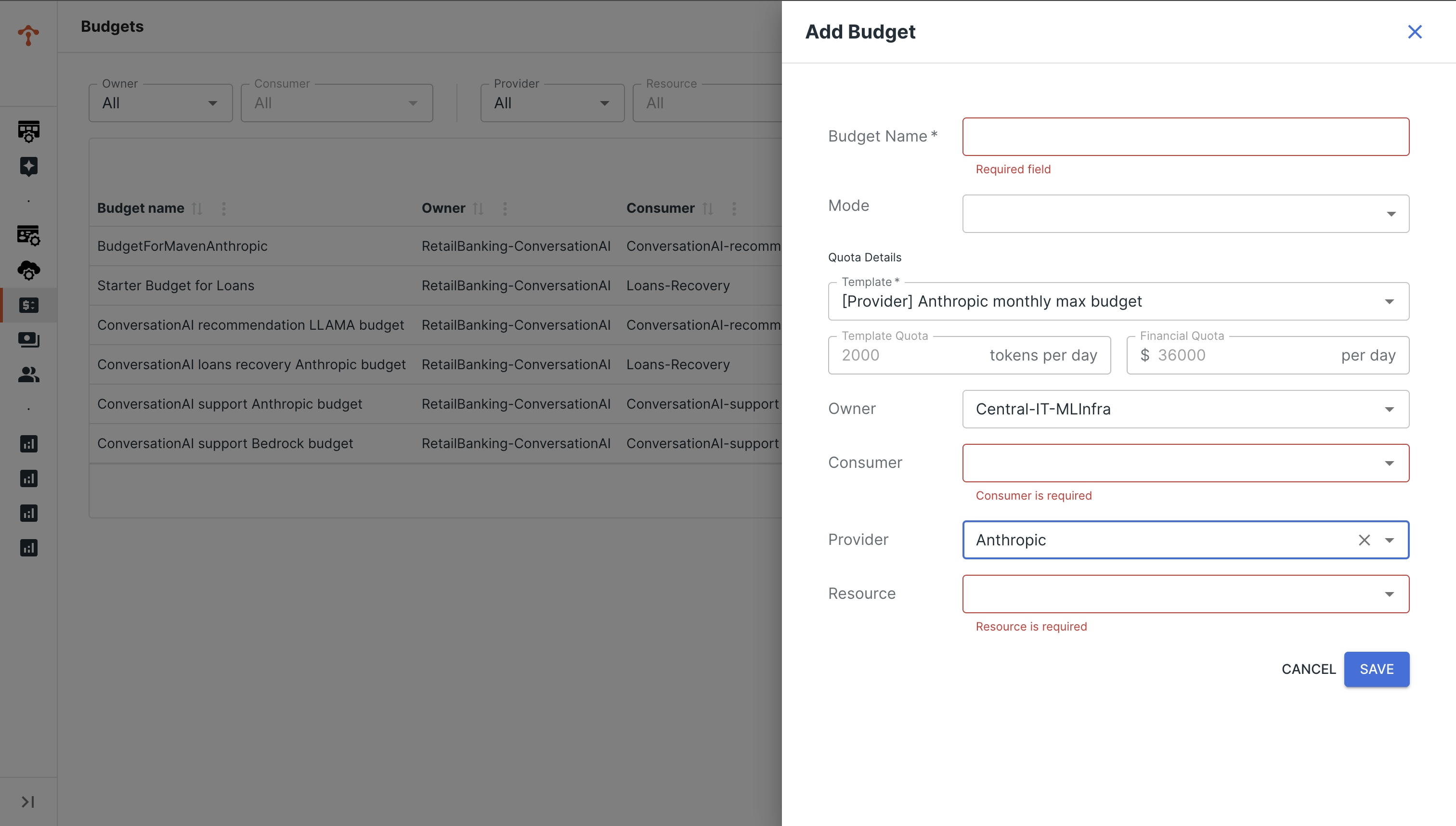

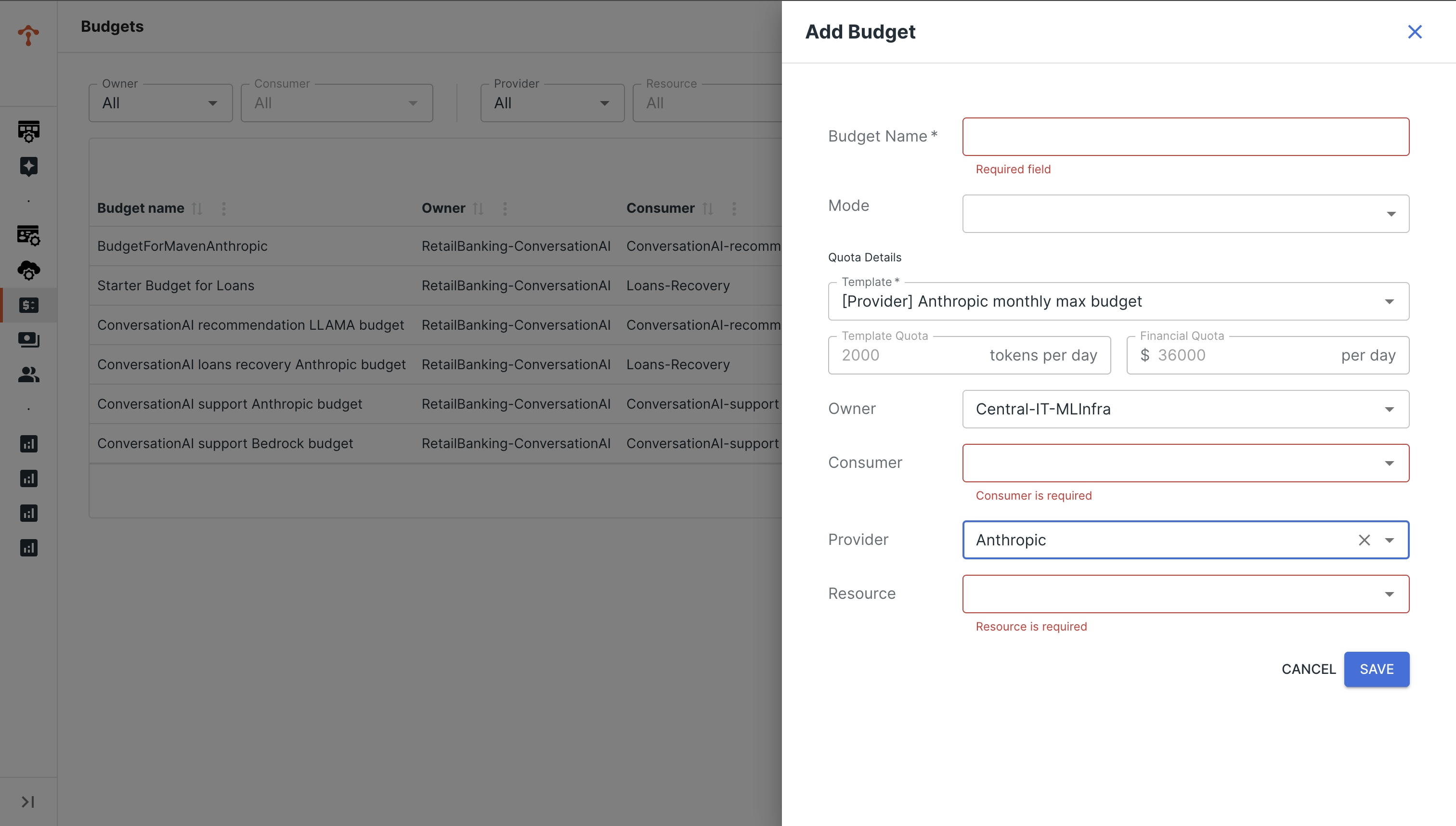

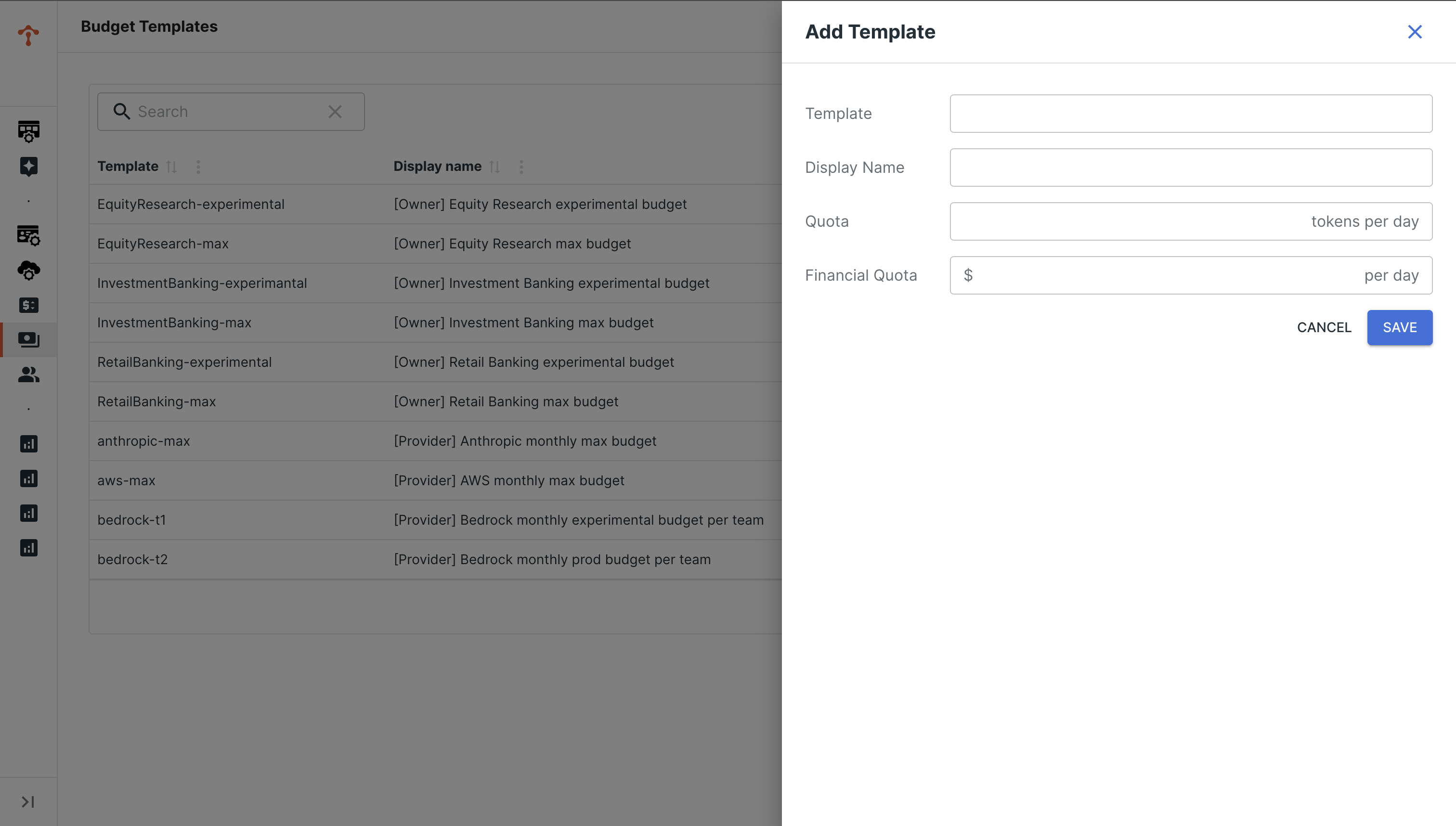

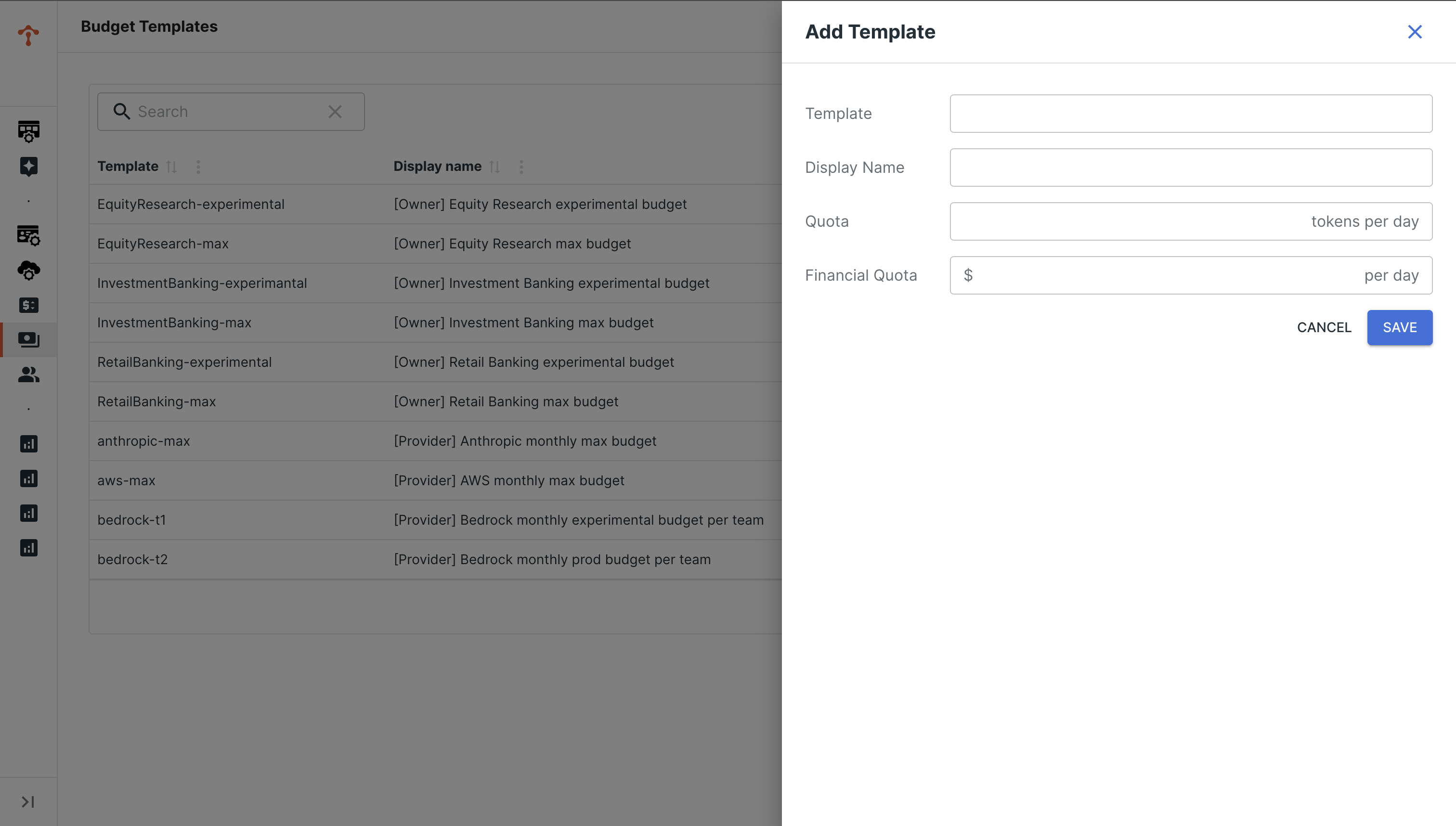

To enable granular analysis and control down the model level, Tetrate Agent Operations Director introduces the construct of Budgets, and Budget Templates. A Budget governs the relationship between consumers (app or service with an owner) and resources (versioned models at a provider), in monitor mode, it allows central analysis of trends and usage drivers.

In enforcement mode, Budgets allows central governance of model consumption — starting with simple rate limiting by tokens or financial measures. Tetrate Agent Operations Director simplifies the management of budgets at scale with Budget Templates, which are pre-set budgets that can be flexibly applied at scale.

Between the granularity of Budgets and the convenience of Budget Templates, Tetrate Agent Operations Director allows organizations to exert fine-grained controls simply at scale with every discovered GenAI traffic transaction.

Future-Proofing Infrastructure

The Discovery Gateway and Agent Gateway components of Tetrate Agent Operations Director are both built on Envoy. Envoy has the advantage of being proven at scale over the years at many hyper-scale providers. The Agent Gateway is also built on Envoy AI Gateway, which was specifically designed with Envoy community members after their Python-based LLM gateways failed to scale in production.

The gateways are not the only components with proven scale, the management console of Tetrate Agent Operations Director is a Tetrate-managed component built on the same technology that has supported production traffic for years (over 60% of production ingress traffic for a Fortune 500 financial services provider for example).

Every component of Tetrate Agent Operations Director has been battle-tested, and ready for enterprise-scale production deployment.

What’s Next

There’s a lot more to explore in Tetrate Agent Operations Director, including very frequent enhancements after the initial release, we will be sharing more key concept introduction and deep-dives in the coming weeks. Follow Tetrate on LinkedIn to get updates as they happen.

If you want to discuss how Tetrate can help your organization intelligently orchestrate inference traffic (or other regulated workload traffic), Tetrate Agent Operations Director is officially in technical preview. Contact us to get started!