Envoy Gateway Overview—Modern Kubernetes Ingress with Envoy Gateway and the Gateway API

Selecting the right networking tool in a Kubernetes environment is crucial. In a recent post, we’ve discussed the issues involved when choosing betwee

Selecting the right networking tool in a Kubernetes environment is crucial. In a recent post, we’ve discussed the issues involved when choosing between a gateway and. a service mesh and often that choice hinges on the type of network traffic you need to manage: north-south or east-west. For services primarily handling external requests, Envoy Gateway—the open source, cloud-native service gateway—and Tetrate’s enterprise-ready distribution, Tetrate Enterprise Gateway for Envoy, is the ideal choice for Kubernetes ingress. It not only efficiently manages traffic but also seamlessly integrates as you transition to a microservices architecture.

This article explores the advantages of deploying Envoy Gateway on Kubernetes, its relationship with other service mesh components, and why it’s the ideal choice for exposing services to the public internet.

Tetrate offers an enterprise-ready, 100% upstream distribution of Istio, Tetrate Istio Subscription (TIS). TIS is the easiest way to get started with Istio for production use cases. TIS+, a hosted Day 2 operations solution for Istio, adds a global service registry, unified Istio metrics dashboard, and self-service troubleshooting.

Core Features and Advantages of Envoy Gateway

Envoy Gateway offers several core features that make it a prominent choice for an API gateway:

- Simplified Configuration: Through direct integration with the Kubernetes Gateway API, Envoy Gateway allows developers to use Kubernetes custom resources to declaratively configure routing rules, security policies, and traffic management.

- Performance and Scalability: Built on battle-tested Envoy Proxy, it delivers outstanding performance and scalability, effortlessly handling thousands of services and millions of requests per second.

- Security Features: Built-in support for various security measures such as SSL/TLS termination, OAuth2, OIDC authentication, and fine-grained access control.

- Observability: Provides comprehensive monitoring capabilities including detailed metrics, logs, and tracing, crucial for diagnosing and understanding traffic behavior.

Relationship to the Gateway API

The introduction of the Gateway API in Kubernetes provides a powerful new way to integrate and configure ingress gateways, offering higher flexibility and functionality compared to traditional ingress. As discussed in this blog, the Gateway API simplifies gateway management, allowing developers to define custom routing rules, TLS termination policies, and traffic policies using Kubernetes-native resources.

The Kubernetes Gateway API serves as the cornerstone of Envoy Gateway, providing a more expressive, flexible, and role-based approach to configuring gateways and routes within the Kubernetes ecosystem. This API offers custom resource definitions (CRDs) such as GatewayClass, Gateway, HTTPRoute, etc. Envoy Gateway utilizes these resources to create a user-friendly and consistent configuration model that aligns with Kubernetes’ native principles.

Overview of Envoy Gateway Architecture

The architecture of Envoy Gateway is designed to be lightweight and concise. It consists of a control plane that dynamically configures an Envoy proxy running as the data plane. This separation of concerns ensures that the gateway can scale with increasing traffic without affecting the efficiency of the control plane.

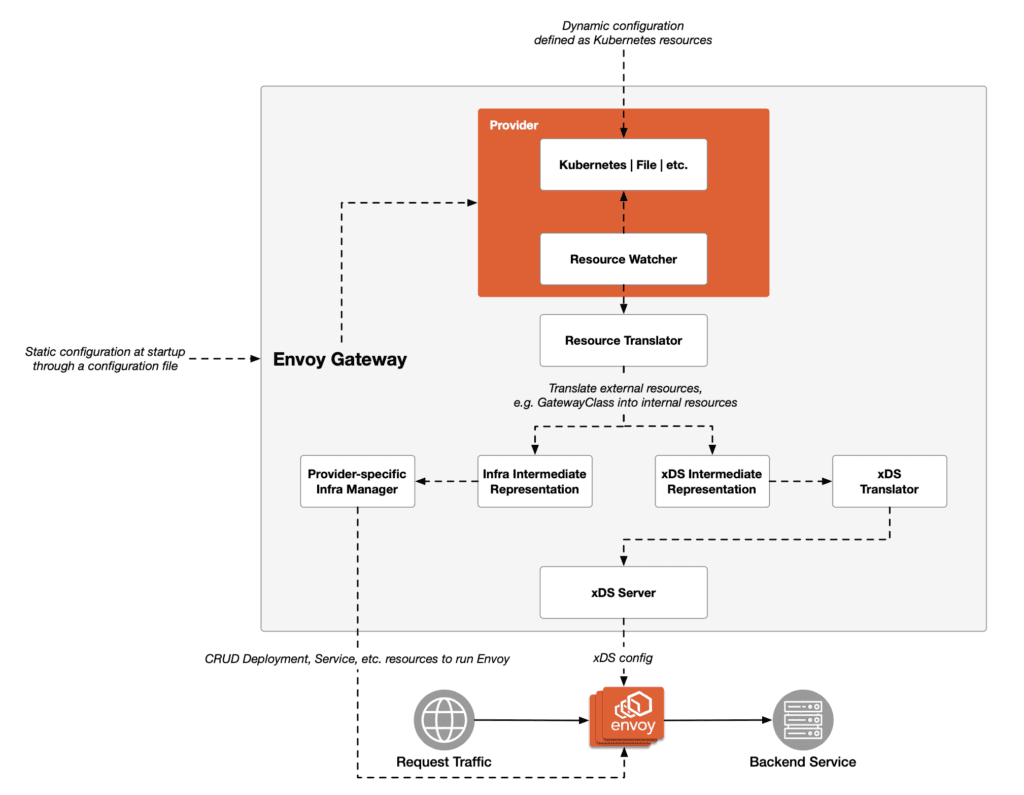

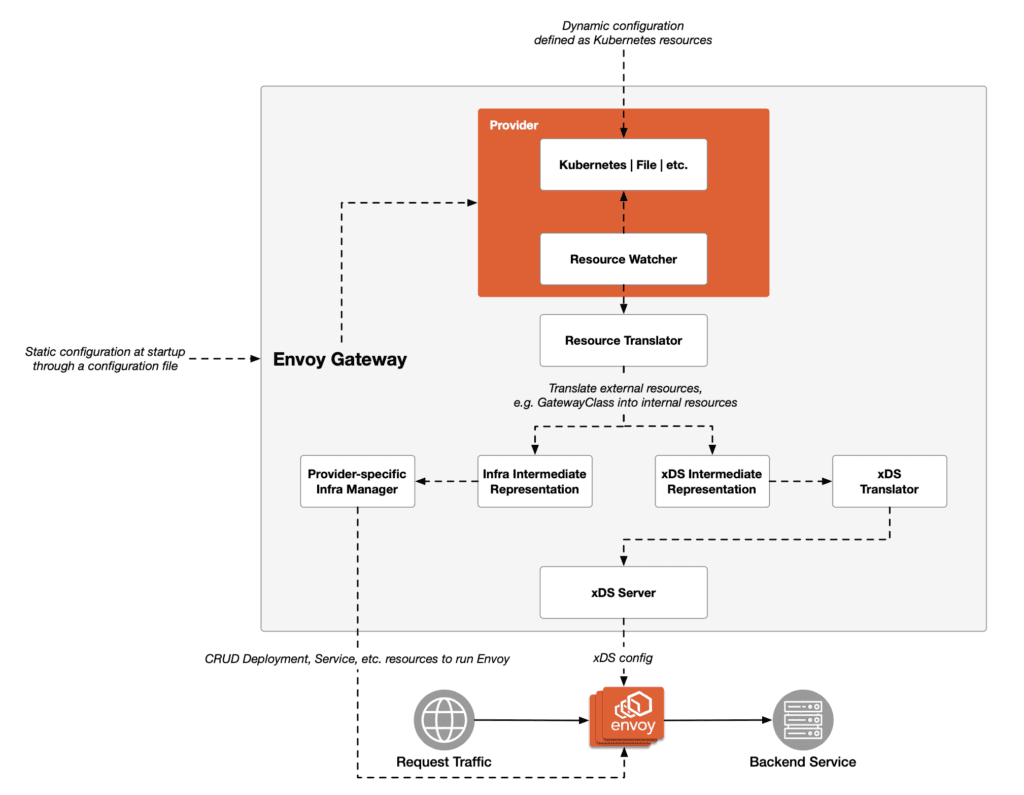

The architecture diagram of Envoy Gateway is shown below.

At the core of this architecture is the Envoy Gateway, which is an instance of the Envoy proxy responsible for handling all traffic in and out of the Kubernetes cluster. Upon initial startup, Envoy Gateway provides static configuration through configuration files, establishing the basic parameters of its operation.

The dynamic aspect of Envoy Gateway configuration is handled by providers, which define the interaction between the gateway and Kubernetes or other dynamic configuration input sources. The resource monitor is responsible for monitoring changes to Kubernetes resources, with particular attention to CRUD operations related to custom resource definitions (CRDs).

As changes occur, resource transformers intervene to translate these external resources into a form understandable by Envoy Gateway. This transformation process is further facilitated by provider-specific infrastructure managers, who are responsible for managing resources related to specific clouds or infrastructure providers, shaping the infrastructure into an intermediate representation crucial for the gateway’s functionality.

This intermediate representation then transforms into the xDS intermediate representation, serving as the precursor to the final xDS configuration understood and executed by Envoy. The xDS translator plays the role of converting this intermediate representation into specific xDS configurations.

These configurations are delivered and executed by xDS servers, which act as services diligently managing Envoy instances based on the xDS configurations they receive. As the actual running proxy, Envoy ultimately receives these configurations from xDS servers, interprets them, and implements them to effectively manage traffic requests.

Ultimately, all requests are redirected to the final destination of Envoy Gateway routes for traffic, which are the backend services.

Overview of Envoy Gateway and Its Role in Service Mesh

Envoy Gateway is a Kubernetes-native API gateway built around Envoy Proxy. It aims to lower the barrier for users adopting Envoy as an API gateway and lays the foundation for vendors to build value-added products like Tetrate Enterprise Gateway for Envoy.

Envoy Gateway is not only an ideal choice for managing north-south traffic but also serves as a crucial component for connecting and securing services within the service mesh. It enhances communication efficiency and security among microservices by providing features such as secure data transmission, traffic routing, load balancing, and fault recovery. Leveraging its built-in Envoy Proxy technology, Envoy Gateway can handle a large number of concurrent connections and complex traffic management policies while maintaining low latency and high throughput.

Furthermore, the tight integration of Envoy Gateway with the Kubernetes Gateway API allows for declarative configuration and management, significantly simplifying the deployment and update processes of gateways within the service mesh. This integration not only improves operational efficiency but also enables Envoy Gateway to seamlessly collaborate with solutions like Istio without adding extra complexity.

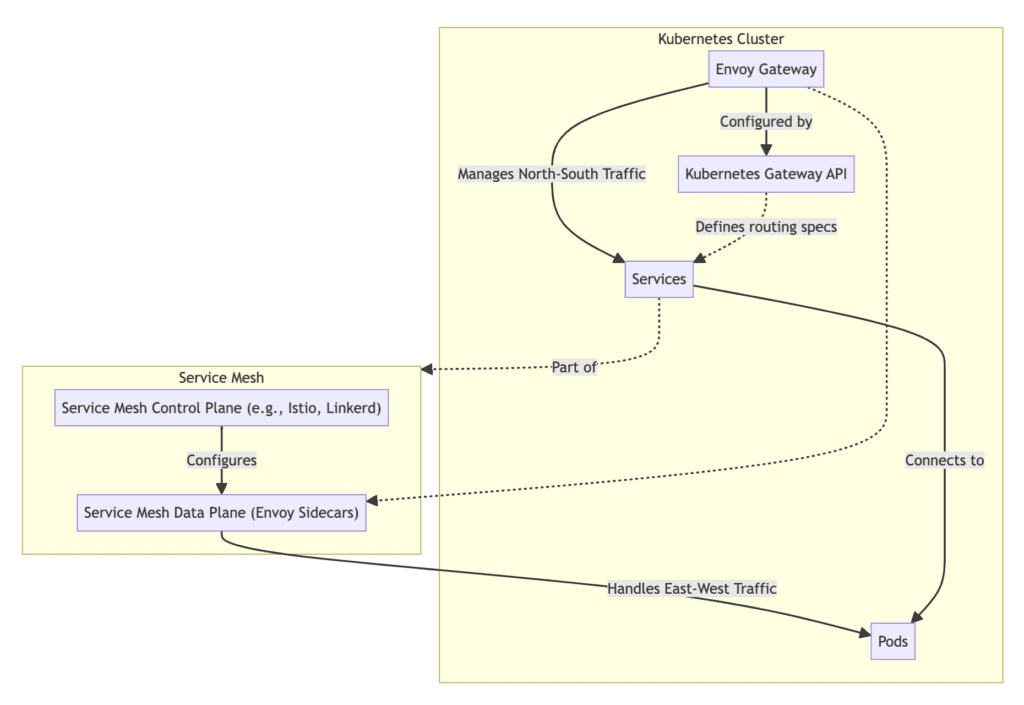

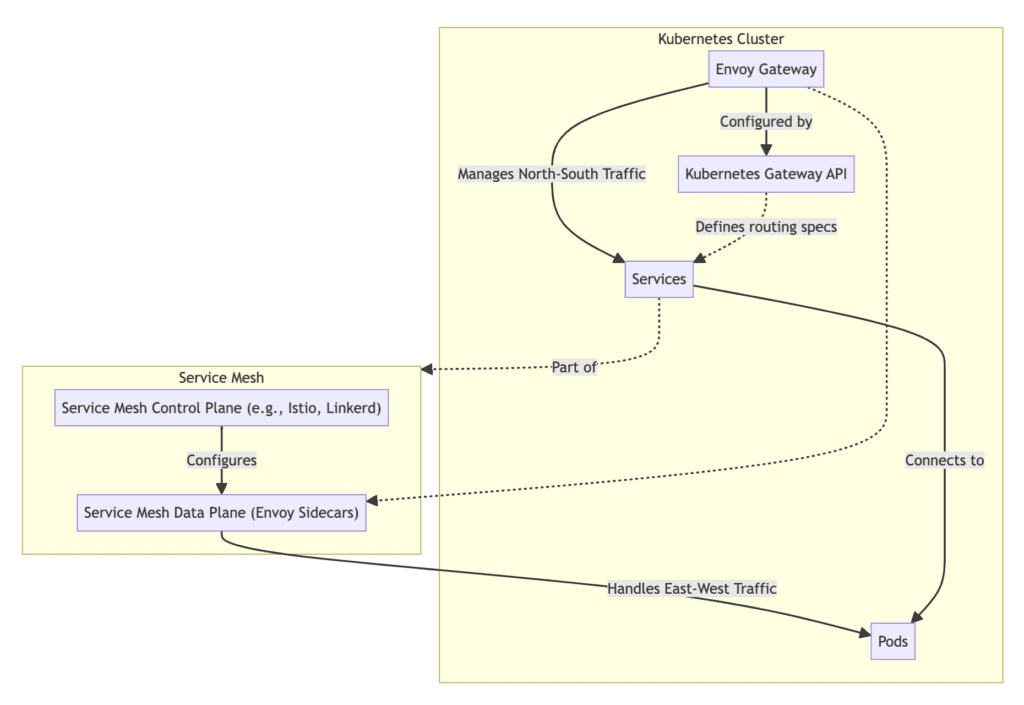

The figure below illustrates the relationship between Envoy Gateway and the service mesh.

In a Kubernetes cluster, Envoy Gateway is responsible for managing north-south traffic, i.e., traffic entering and leaving the cluster, and is configured through the Kubernetes Gateway API, which defines routing specifications for services. Services within the cluster directly connect to pods. In the service mesh, the control plane (e.g., Istio or Linkerd) configures Envoy sidecars in the data plane, which handles east-west traffic within the cluster. In this system, Envoy Gateway can collaborate with the service mesh, but they independently manage traffic in different directions.

Think of Envoy Gateway as the main entry point to a city (e.g., customs), where all traffic (like various vehicles) must pass through. It acts as a strict gatekeeper, responsible for inspection and guidance, ensuring each packet (like each passenger) is accurately delivered to its destination. In the city of Kubernetes, Envoy Gateway manages all inbound traffic, ensuring data flows securely and efficiently into the city and is accurately delivered to services within the city.

Once inside the city, the service mesh takes over, acting as a series of transportation networks within the city. Envoy sidecars in the service mesh are like taxis or buses within the city, responsible for transporting packets from the port to their specific destinations within the city. Envoy Gateway ensures smooth entry for external requests, and then the service mesh efficiently handles these requests within the cluster.

The support for Kubernetes Gateway API by Envoy Gateway can be seen as a significant upgrade to our city’s traffic signal system. It not only provides clearer and more personalized guidance for incoming data flows but also makes the entire city’s traffic operations more intelligent.

Comparison with Other Gateway Options

Compared to other popular solutions such as Istio’s Ingress Gateway or NGINX Ingress, Envoy Gateway stands out with its native integration with Kubernetes and its focus on leveraging the full potential of Envoy. The table below compares various open-source API gateways from multiple aspects.

Image could not be loaded Image could not be loaded in lightbox

To quickly get started with Envoy Gateway, you can set up a local experimental environment using the following simplified steps. First, start a local Kubernetes cluster:

minikube start --driver=docker --cpus=2 --memory=2gNext, deploy the Gateway API CRD and Envoy Gateway itself:

helm install eg oci://docker.io/envoyproxy/gateway-helm --version v1.0.1 -n envoy-gateway-system --create-namespaceThen, install the gateway configuration and deploy a sample application:

kubectl apply -f https://github.com/envoyproxy/gateway/releases/download/v1.0.1/quickstart.yaml -n defaultTo expose the LoadBalancer service, here we use port forwarding as an example. You can also choose to use minikube tunnel or install MetalLB as a load balancer:

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=default,gateway.envoyproxy.io/owning-gateway-name=eg -o jsonpath='{.items[0].metadata.name}')

kubectl -n envoy-gateway-system port-forward service/${ENVOY_SERVICE} 8888:80 &Test if your Envoy Gateway is working properly with the following command:

curl --verbose --header "Host: www.example.com" http://localhost:8888/getFor more detailed installation and configuration steps, visit the Envoy Gateway website. With these steps, you can quickly start exploring the capabilities of Envoy Gateway.

Conclusion

Envoy Gateway not only optimizes Layer 7 gateway configuration in the cloud-native era but also provides a smooth transition from edge gateways to service meshes. As the promotion of service meshes faces some challenges, such as the intrusiveness to applications and issues driven by operations teams, edge gateways are more readily accepted by development teams. Envoy Gateway, with its simplified Kubernetes Gateway API, enhances traffic management and observability capabilities. Additionally, the transition from Envoy Gateway to Istio is a confident technical advancement for teams already familiar with Envoy features, supporting seamless switching from the standard Kubernetes Gateway API to Istio Ingress Gateway or continuing to collaborate with Istio as a custom solution. These features make Envoy Gateway a gateway choice worth investing in the cloud-native era.

Stay tuned for the subsequent parts of this blog series, where we will delve into configuring and optimizing Envoy Gateway, providing practical guides, and showcasing a wider range of real-world use cases.