How Service Mesh Layers Microservices Security with Traditional Security to Move Fast Safely

This is the first in a series of service mesh best practices articles excerpted from Tetrate’s forthcoming book, Istio in Production by Tetrate foundi

This is the first in a series of service mesh best practices articles excerpted from Tetrate’s forthcoming book, Istio in Production by Tetrate founding engineer Zack Butcher.

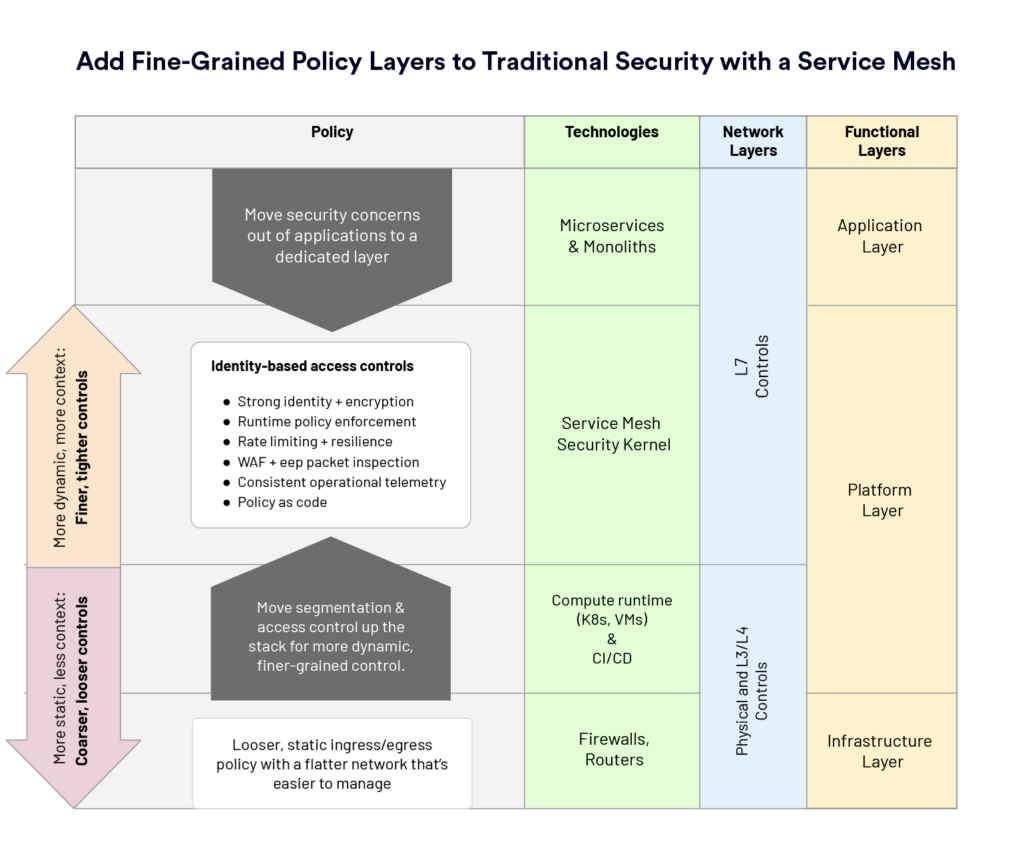

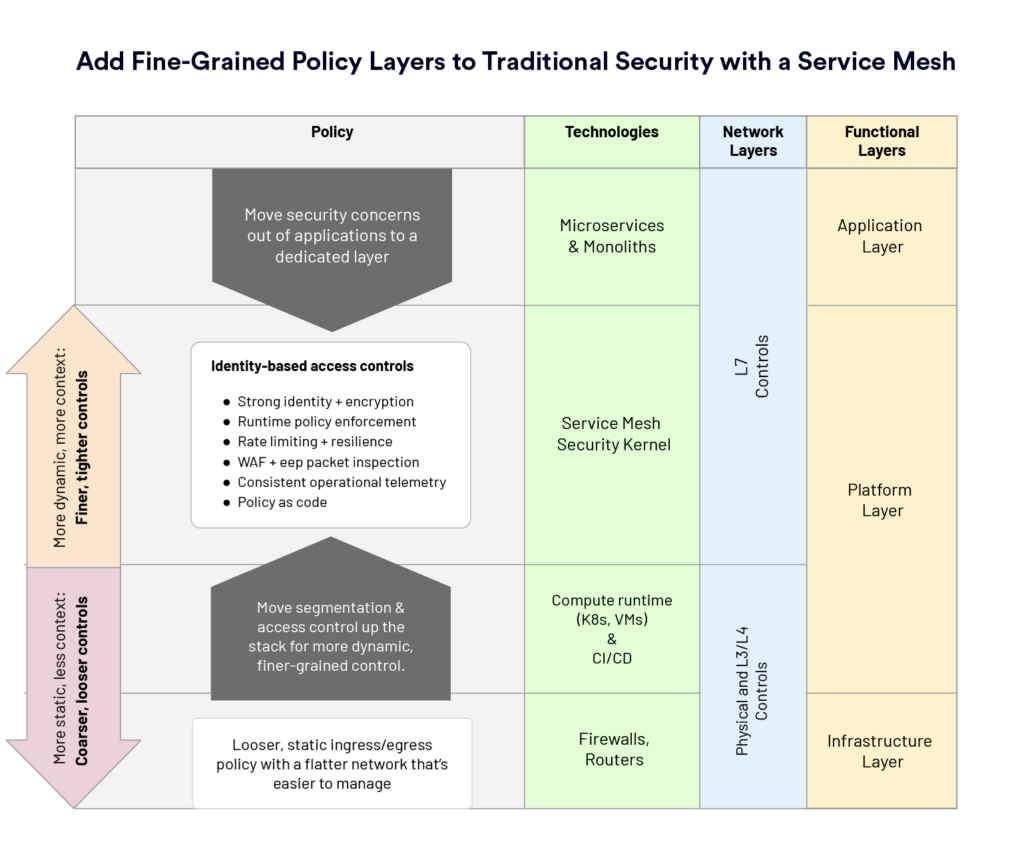

One of the biggest questions we get from enterprises implementing the mesh is “which controls do I still need, and which does the mesh provide?” In other words, they’re wondering how the mesh fits into an existing security model. We’ve seen that the mesh is most effective as the inner ring in a concentric set of security controls implemented at each layer from the physical network up to the application itself.

Service Mesh as a Universal Policy Enforcement Point

We see the mesh’s sidecar acting as a universal policy enforcement point (NIST SP 800-204B: Attribute-based Access Control for Microservices-based Applications using a Service Mesh). Because it intercepts all traffic in and out of the application, the sidecar gives us a powerful location to implement all kinds of policy. We can implement traditional security policies like application-to-application authorization with higher assurance (based on application identity rather than network location). But we can also implement policies that were not practical before, or that required deep involvement with the application. For example, the mesh allows you to write policies like: “the backend can read from the database (authenticated and authorized with application-level identity), but only if the request has a valid end-user credential with the read scope (authenticated and authorized with end-user identity).”

While the service mesh provides a strong, dynamic, and consistent security baseline you can build your application security model on, the mesh itself can never provide one hundred percent of the runtime security an application needs. For example, because the sidecar sits in user space, the mesh is not as effective as traditional firewall mechanisms at mitigating many types of network denial of service attacks. On the other hand, the mesh is more effective at mitigating many application-level denial of service attacks than traditional infrastructure for the same reason.

A Powerful Middle Layer to Enable Agility up the Stack

The mesh sits as a powerful middle layer in the infrastructure: above the physical network and L3/L4 controls you implement, but under the application. This allows more brittle and slower-to-change lower layers to be configured more loosely—allowing more agility up the stack—because controls are accounted for at higher layers.

The primary security capabilities the mesh provides are:

- A runtime identity as an X.509 certificate that is used for encryption in transit as well as authentication and authorization of service-to-service communication.

- A policy enforcement point to implement consistent end-user authentication and authorization for all applications in the mesh.

- Runtime policy enforcement over both service identity as well as end-user identity (“A can talk to B only with a valid end-user credential with the read scope”)

- Rate limiting and resiliency features to mitigate common application-level denial of service attacks, as well as to protect against common cascading failure modes.

- WAF and other deep packet inspection capabilities for internal traffic, not just at the edge.

- Consistent operational telemetry from all applications in the mesh that can be used to understand, implement, and audit security policies.

- Policy-as-code model with dynamic runtime update, independent of application lifecycle.

Service Mesh as Part of Layered Defense in Depth

Given the security capabilities of the mesh, we believe it makes the most sense for organizations to adopt it as part of a layered defense-in-depth approach.

Agility at Layer 3: Coarse-Grained Ingress and Egress Policy with Fine-Grained Control at L7

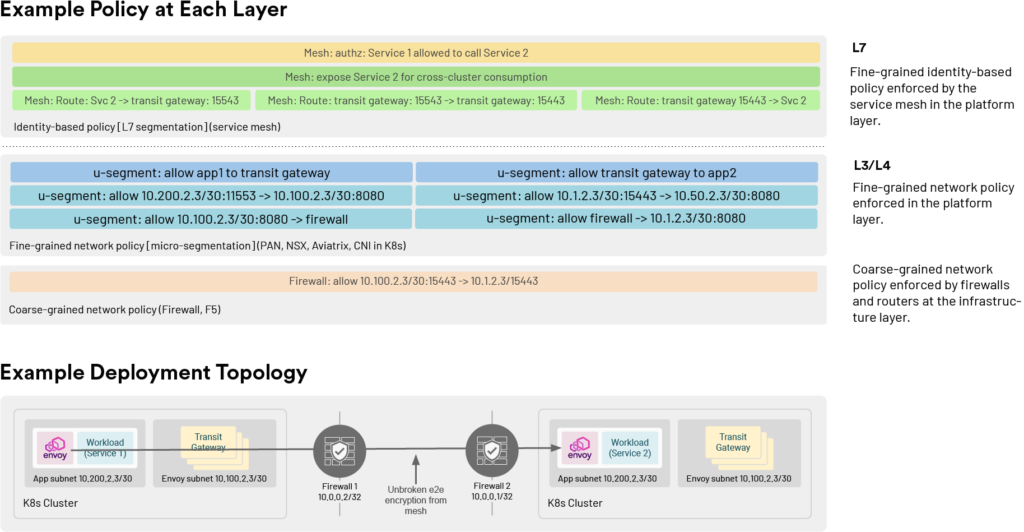

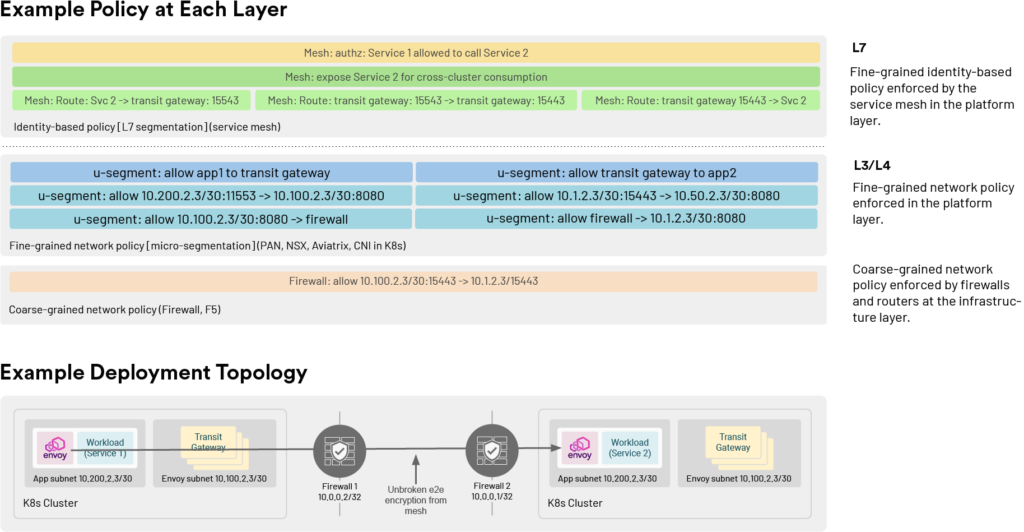

L3 controls like firewalls at the edge remain effective at coarse-grained ingress and egress policy, but often slow application development agility. Since the mesh provides fine-grained service-to-service authorization, policies at L3 can be made more broad, giving more agility to platform, security, and application teams.

Implement access controls to external services. The mesh’s egress proxy is especially powerful for implementing application-to-external-service controls while only the egress proxy itself is allow-listed by the outbound firewall: this gives the platform or security teams lots of agility in administering which applications are allowed to communicate outside the enterprise’s infrastructure while maintaining the existing perimeter based model.

Replace “reachability as authorization” with encryption and dynamic access control. The mesh can begin to effectively replace VPNs and IPSec-based network “reachability is authorization” models by providing encryption in transit as well as authentication and authorization per application rather than per host.

Refinement at Layer 4: Micro-Segmentation in a Flatter Network That’s Easier to Manage

Controls like micro-segmentation can be further refined with the mesh: while we might allow entire (small) subnets to communicate in a segmentation-based strategy, we can control individual service-to-service communication per method and verb with the mesh.

Complement existing micro-segmentation while flattening the network. By offering fine-grained service-to-service controls, the mesh tends to complement existing micro-segmentation strategies, while enabling the adoption of flatter networks where it’s easier for the organization to manage (for example, in cloud environments).

Augmentation at Layer 7: Edge and Access Controls, Everywhere

Provide edge controls for all traffic. Layer 7 controls like web application firewalls (WAFs) as well as “API gateway” functionality like rate limiting are almost always implemented at the edge. The mesh can augment these existing deployments by enabling the same functionality for all traffic in the mesh, including internal, “east-west” communication.

Simplify access control for applications. In addition to making edge controls ubiquitous, the mesh can perform end-user authentication and coarse-grained authorization before applications ever see the request, vastly simplifying the access control that needs to be performed by the application itself. In the future, we’ll see more and more access control functionality migrate from applications into the mesh.

Parting Thoughts and What’s Next

We believe service mesh best fits into an existing security model by layering finer-grained security policy above traditional security controls. As a universal policy enforcement point, the mesh affords looser policy at lower layers where change is hardest, pushing agility up the stack where more context allows for more specific controls at higher layers. This powerful added security layer makes sense for most organizations to adopt as part of a layered defense-in-depth approach.

Next up: Service Mesh Deployment Best Practices

The next installment of our series of blog posts on service mesh best practices is on deployment topology. There are a few moving pieces when it comes to a service mesh deployment in a real infrastructure across many clusters. In our next post, we take a closer look at:

- How control planes should be deployed near applications.

- How ingresses should be deployed to facilitate safety and agility.

- How to facilitate cross-cluster load balancing using Envoy.

- What certificates should look like inside the mesh.

If you’re new to service mesh and Kubernetes security, we have a bunch of free online courses available at Tetrate Academy that will quickly get you up to speed with Istio and Envoy.

If you’re looking for a fast way to get to production with Istio, check out Tetrate Istio Distribution (TID), Tetrate’s hardened, fully upstream Istio distribution, with FIPS-verified builds and support available. It’s a great way to get started with Istio knowing you have a trusted distribution to begin with, an expert team supporting you, and also have the option to get to FIPS compliance quickly if you need to.

As you add more apps to the mesh, you’ll need a unified way to manage those deployments and to coordinate the mandates of the different teams involved. That’s where Tetrate Service Bridge comes in. Learn more about how Tetrate Service Bridge makes service mesh more secure, manageable, and resilient here, or contact us for a quick demo.