CNI Essentials: Kubernetes Networking under the Hood

Effective management of networking is crucial in containerized environments. The Container Network Interface (CNI) is a standard that defines how cont

Effective management of networking is crucial in containerized environments. The Container Network Interface (CNI) is a standard that defines how containers should be networked. This article delves into the fundamentals of CNI and explores its relationship with CRI.

## Key Points of the CNI SpecificationTetrate offers enterprise-ready, 100% upstream distributions of Istio and Envoy Gateway, the de facto standard connectivity platform for Kubernetes. Get access now ›

- CNI is a plugin-based containerized networking solution.

- CNI plugins are executable files.

- The responsibility of a single CNI plugin is singular.

- CNI plugins are invoked in a chained manner.

- The CNI specification defines a Linux network namespace for a container.

- Network definitions in CNI are stored in JSON format.

- Network definitions are transmitted to plugins via STDIN input streams, meaning network configuration files are not stored on the host, and other configuration parameters are passed to plugins via environment variables.

CNI plugins receive network configuration parameters according to the operation type, perform network setup or cleanup tasks accordingly, and return the execution results. This process ensures dynamic configuration of container networks synchronized with container lifecycles.

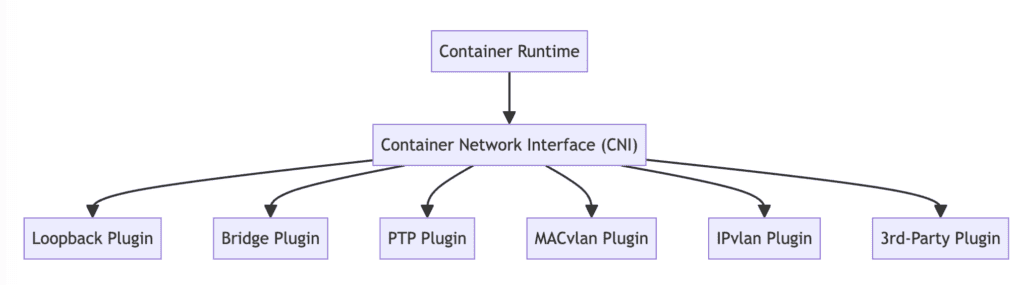

The following diagram illustrates the multitude of network plugins encompassed by CNI.

According to the CNI specification, a CNI plugin is responsible for configuring a container’s network interface in some way. Plugins can be classified into two major categories:

- “Interface” plugins, responsible for creating network interfaces inside containers and ensuring their connectivity.

- “Chained” plugins, adjusting the configuration of already created interfaces (but may need to create more interfaces to accomplish this).

Relationship Between CNI and CRI

CNI and CRI (Container Runtime Interface) are two critical interfaces in Kubernetes, responsible for container network configuration and runtime management, respectively. In Kubernetes clusters, CRI invokes CNI plugins to configure or clean up container networks, ensuring tight coordination between the network configuration process and container creation and destruction processes.

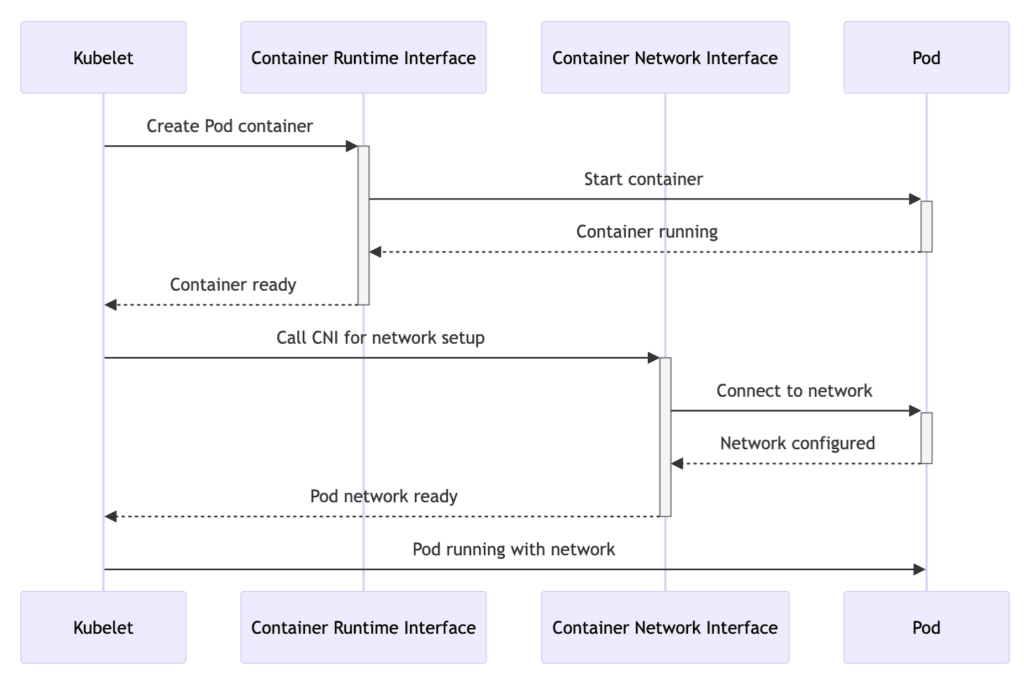

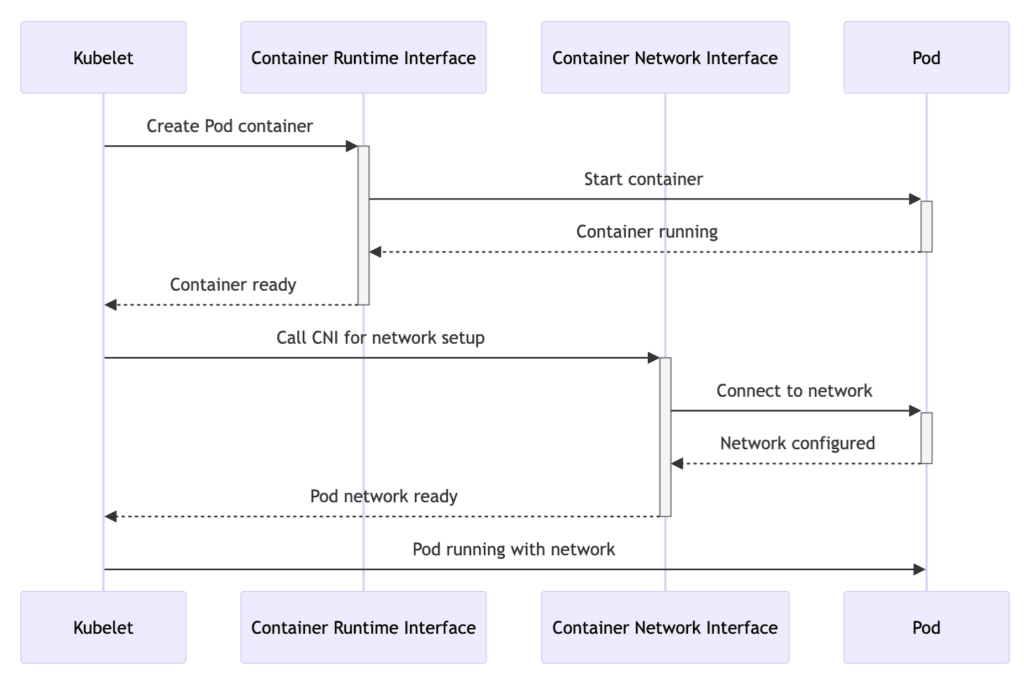

The following diagram intuitively illustrates how CNI collaborates with CRI:

- Kubelet to CRI: The Kubelet instructs the CRI to create the containers for a scheduled pod.

- CRI to Pod: The container runtime starts the container within the pod.

- Pod to CRI: Once the container is running, it signals back to the container runtime.

- CRI to Kubelet: The container runtime notifies the Kubelet that the containers are ready.

- Kubelet to CNI: With the containers up, the Kubelet calls the CNI to set up the network for the pod.

- CNI to Pod: The CNI configures the network for the pod, attaching it to the necessary network interface.

- Pod to CNI: After the network is configured, the pod confirms network setup to the CNI.

- CNI to Kubelet: The CNI informs the Kubelet that the pod’s network is ready.

- Kubelet to Pod: The pod is now fully operational, with both containers running and network configured.

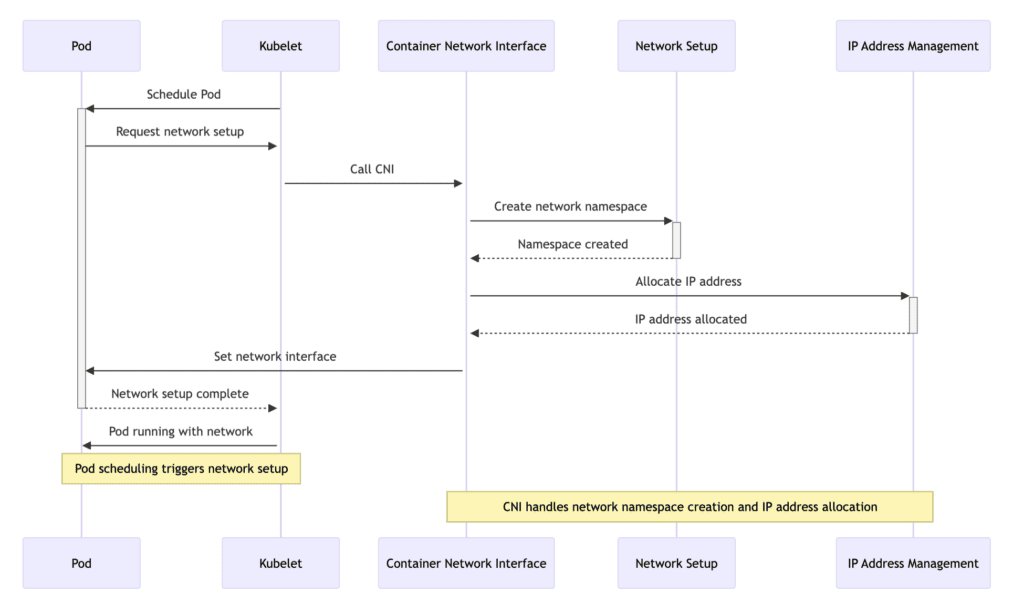

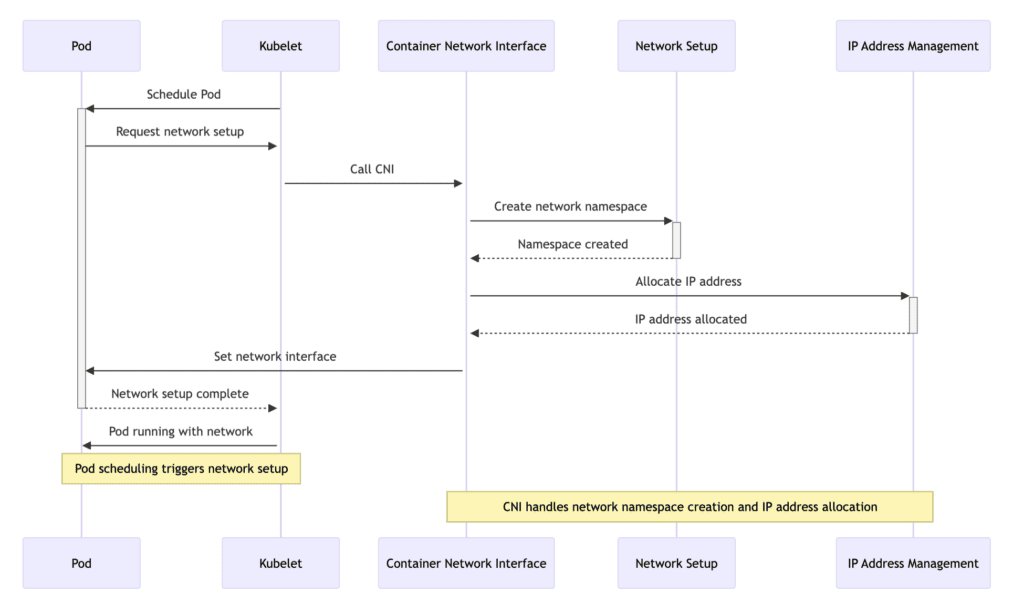

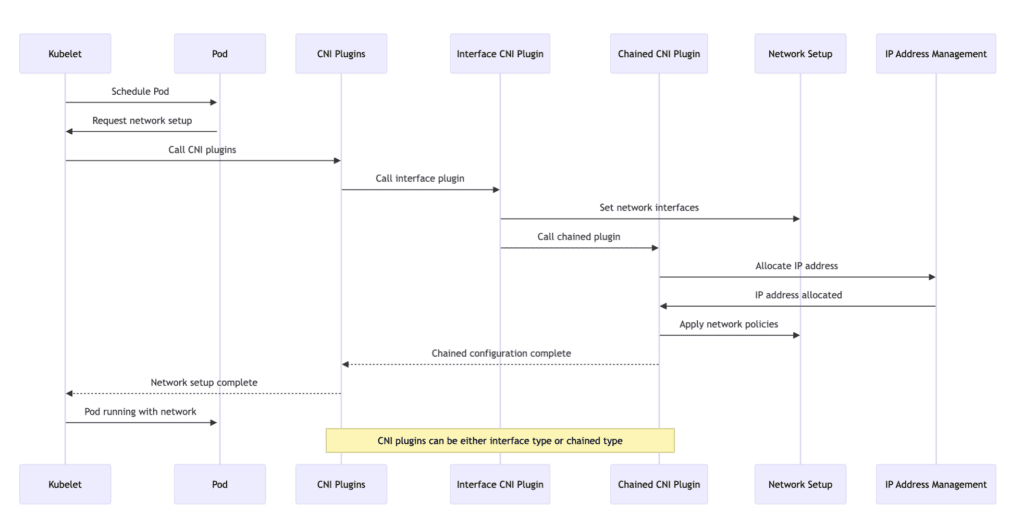

The following diagram shows the detailed steps involved in setting up networking for a pod in Kubernetes:

- Pod scheduling: The Kubelet schedules a pod to run on a node.

- Request network setup: The scheduled pod requests network setup from the Kubelet.

- Invoke CNI: The Kubelet invokes the CNI to handle the network setup for the pod.

- Create network namespace: The CNI creates a network namespace for the pod, isolating its network environment.

- Allocate IP address: The CNI, through its IP Address Management (IPAM) plugin, allocates an IP address for the pod.

- Setup network interfaces: The CNI sets up the necessary network interfaces within the pod’s network namespace, attaching it to the network.

- Network setup complete: The pod notifies the Kubelet that its network setup is complete.

- Pod running with network: The pod is now running with its network configured and can communicate with other pods and services within the Kubernetes cluster.

CNI Workflow

The Container Network Interface (CNI) specification defines how containers should configure networks, including five operations: ADD, CHECK, DELETE, GC, and VERSION. Container runtimes execute these operations by calling various CNI plugins, enabling dynamic management and updates of container networks.

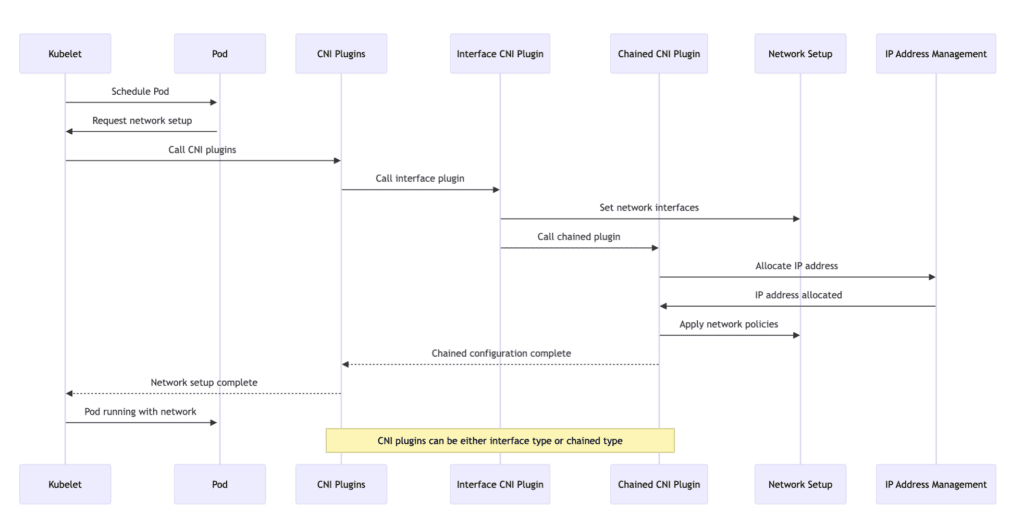

To elaborate on each step described in the sequence diagram, involving interactions between Kubelet, Pod, CNI plugins (both interface and chained), network setup, and IP address management (IPAM), let’s delve deeper into the process:

- Schedule Pod: Kubelet schedules a Pod to run on a node. This step initiates the lifecycle of Pods within the Kubernetes cluster.

- Request Network Setup: The Pod requests Kubelet for network setup. This request triggers the process of configuring the network for the Pod, ensuring its ability to communicate within the Kubernetes cluster.

- Call CNI Plugins: Kubelet invokes configured Container Network Interface (CNI) plugins. CNI defines a standardized way for container management systems to set up network interfaces within Linux containers. Kubelet passes necessary information to CNI plugins to initiate network setup.

- Call Interface Plugin: The CNI framework calls an interface CNI plugin responsible for setting up primary network interfaces for the Pod. This plugin may create a new network namespace, connect a pair of veth, or perform other actions to ensure the Pod has the required network interfaces.

- Set Network Interfaces: The interface CNI plugin configures network interfaces for the Pod. This setup includes assigning IP addresses, setting up routes, and ensuring interfaces are ready for communication.

- Call Chained Plugin: After setting up network interfaces, the interface CNI plugin or the CNI framework calls chained CNI plugins. These plugins perform additional network configuration tasks, such as setting up IP masquerading, configuring ingress/egress rules, or applying network policies.

- Allocate IP Address: As part of the chained process, one of the chained CNI plugins may involve IP Address Management (IPAM). The IPAM plugin is responsible for assigning an IP address to the Pod, ensuring each Pod has a unique IP within the cluster or namespace.

- IP Address Allocated: The IPAM plugin allocates an IP address and returns the allocation information to the calling plugin. This information typically includes the IP address itself, subnet mask, and possible gateway.

- Apply Network Policies: Chained CNI plugins apply any specified network policies to the Pod’s network interfaces. These policies may dictate allowed ingress and egress traffic, ensuring network security and isolation per cluster configuration requirements.

- Chained Configuration Complete: Once all chained plugins have completed their tasks, the overall network configuration for the Pod is considered complete. The CNI framework or the last plugin in the chain signals to Kubelet that network setup is complete.

- Network Setup Complete: Kubelet receives confirmation of network setup completion from the Pod. At this point, the Pod has fully configured network interfaces with IP addresses, route rules, and applied network policies.

- Pod Running with Network: The Pod is now running and has its network configured. It can communicate with other Pods within the Kubernetes cluster, access external resources per network policies, and perform its designated functions.

The following are example sequence diagrams and detailed explanations for the ADD, CHECK, and DELETE operations based on the CNI examples. Through these operations, interactions between the container runtime and CNI plugins facilitate dynamic management and updates of container network configurations.

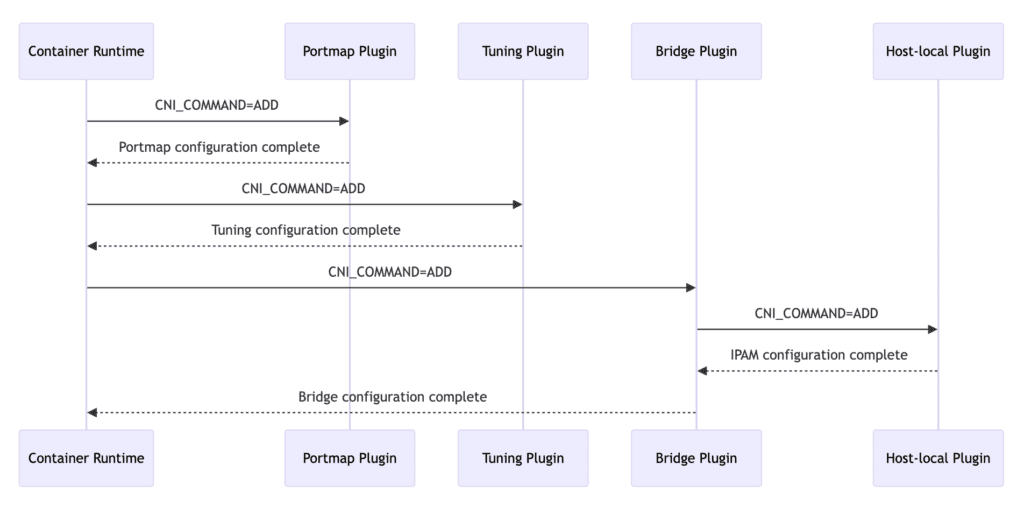

ADD Operation Example

Below is the example sequence diagram and detailed explanation for the ADD operation:

- Container Runtime Calls Portmap Plugin: The container runtime executes the ADD operation by calling the Portmap plugin to configure port mapping for the container.

- Portmap Configuration Complete: The Portmap plugin completes the port mapping configuration and returns the result to the container runtime.

- Container Runtime Calls Tuning Plugin: The container runtime invokes the Tuning plugin to execute the ADD operation and configure network tuning parameters for the container.

- Tuning Configuration Complete: The Tuning plugin finishes configuring network tuning parameters and returns the result to the container runtime.

- Container Runtime Calls Bridge Plugin: The container runtime calls the Bridge plugin to execute the ADD operation and configure network interfaces and IP addresses for the container.

- Bridge Plugin Calls Host-local Plugin: Before completing its own configuration, the Bridge plugin calls the Host-local plugin to execute the ADD operation and configure IP addresses for the container.

- IPAM Configuration Complete: The Host-local plugin, acting as the authority for IP Address Management (IPAM), completes IP address allocation and returns the result to the Bridge plugin.

- Bridge Configuration Complete: The Bridge plugin finishes configuring network interfaces and IP addresses and returns the result to the container runtime.

These operations ensure that the required network configuration is set up as expected when the container starts, including port mapping, network tuning, and IP address allocation.

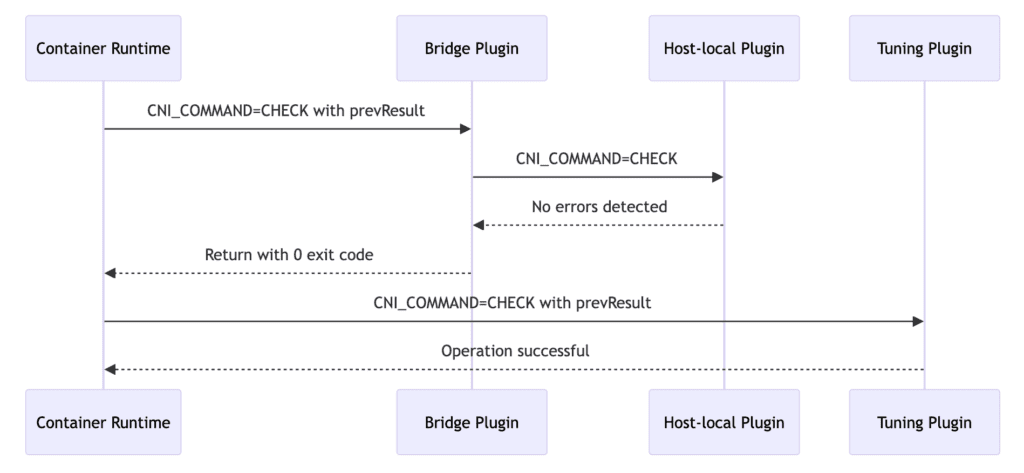

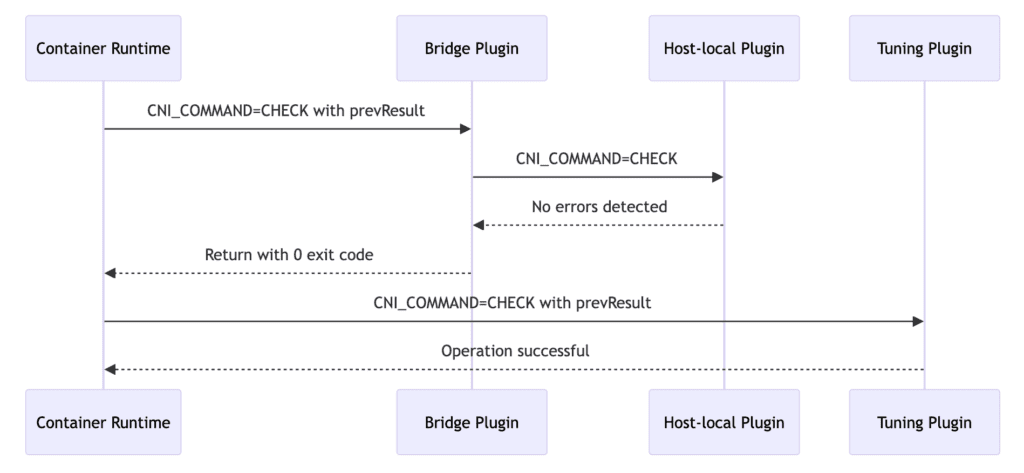

CHECK Operation Example

Below is the example sequence diagram and detailed explanation for the CHECK operation:

- Container Runtime Calls Bridge Plugin for Check: The container runtime performs the CHECK operation by calling the Bridge plugin to inspect the container’s network configuration.

- Bridge Plugin Calls Host-local Plugin for Check: The Bridge plugin calls the Host-local plugin to inspect IP address allocation.

- No Errors Detected: The Host-local plugin detects no errors in IP address allocation and reports no errors to the Bridge plugin.

- Return with 0 Exit Code: The Bridge plugin confirms no network configuration errors and returns with a 0 exit code to the container runtime.

- Container Runtime Calls Tuning Plugin for Check: The container runtime invokes the Tuning plugin to inspect network tuning parameters.

- Operation Successful: The Tuning plugin confirms no errors in network tuning parameters, indicating a successful operation to the container runtime.

These operations ensure that during container runtime, network configuration and tuning parameters are checked and verified as expected to ensure consistency and correctness in network configuration.

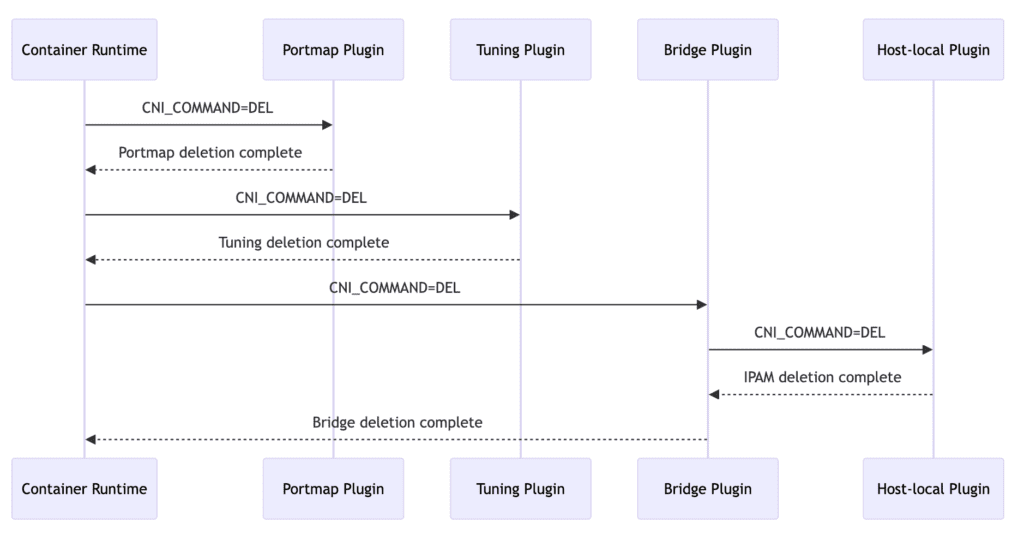

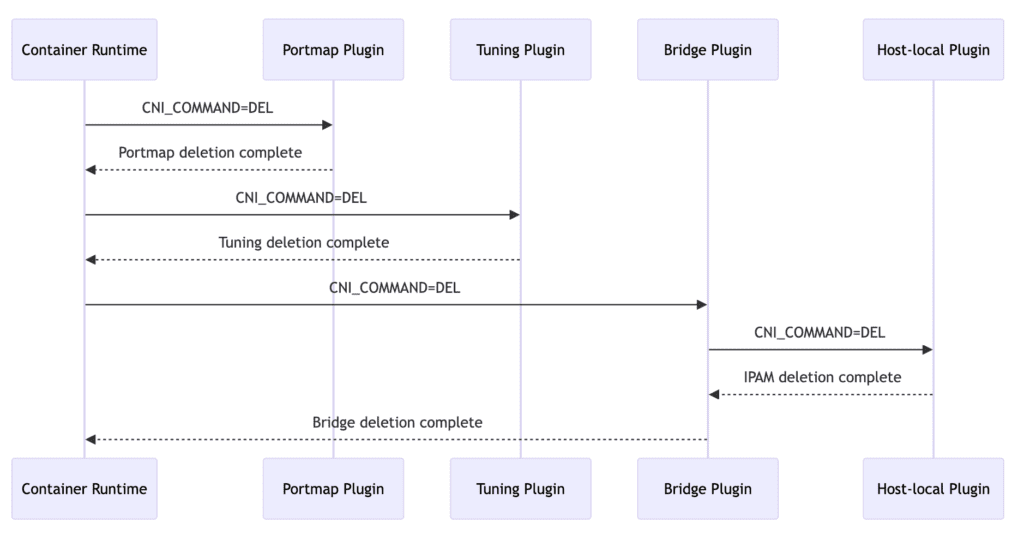

DELETE Operation Example

Below is the example sequence diagram and detailed explanation for the DELETE operation:

- Container Runtime Calls Portmap Plugin for Delete: The container runtime initiates the DELETE operation by calling the Portmap plugin to remove port mapping configuration for the container.

- Portmap Deletion Complete: The Portmap plugin finishes deleting port mapping and reports completion to the container runtime.

- Container Runtime Calls Tuning Plugin for Delete: The container runtime calls the Tuning plugin to execute the DELETE operation and remove network tuning parameters for the container.

- Tuning Deletion Complete: The Tuning plugin completes deletion of network tuning parameters and notifies the container runtime.

- Container Runtime Calls Bridge Plugin for Delete: The container runtime invokes the Bridge plugin to execute the DELETE operation and remove network interfaces and IP address configuration for the container.

- Bridge Plugin Calls Host-local Plugin for Delete: Before completing its own deletion, the Bridge plugin calls the Host-local plugin to execute the DELETE operation and remove IP address allocation for the container.

- IPAM Deletion Complete: The Host-local plugin completes IP address deletion and informs the Bridge plugin.

- Bridge Deletion Complete: The Bridge plugin finishes removing network interfaces and IP addresses and notifies the container runtime.

These operations ensure that when the container stops running, its required network configuration is properly cleaned up and removed to ensure effective release and management of network resources.

Through the example sequence diagrams and detailed explanations for ADD, CHECK, and DELETE operations, it’s clear how interactions between the container runtime and CNI plugins facilitate dynamic management and updates of container network configurations.

Conclusion

The Container Network Interface (CNI) specification standardizes container network configuration in Kubernetes clusters, allowing container runtimes to interface with various network plugins seamlessly. Understanding CNI’s core components and its collaboration with the Container Runtime Interface (CRI) is essential for effective network management in containerized environments.

References

• CNI Spec