Seamless Cross-Cluster Connectivity for Multicluster Istio Service Mesh Deployments

Introduction As enterprise information systems increasingly adopt microservices architecture like kubernetes, how to achieve efficient and secure cros

Introduction

As enterprise information systems increasingly adopt microservices architecture like kubernetes, how to achieve efficient and secure cross-cluster access to services in a multicluster environment has become a crucial challenge. Istio, as a popular service mesh solution, offers a wealth of features to support seamless inter-cluster service connections.

There are several challenges when deploying and using a multicluster service mesh:

- Cross-cluster service registration, discovery, and routing

- Identity recognition and authentication between clusters

This article will explore achieving seamless cross-cluster access in a multicluster Istio deployment by implementing the SPIRE federation and exposing services via east-west gateways. Through a series of configuration and deployment examples, this article aims to provide readers with a clear guide to understanding and addressing common issues and challenges in multicluster service mesh deployments.

This article focuses on the hybrid deployment model of multi-cloud + multi-mesh + multi-control plane + multi-trust domain. This is a relatively complex scenario. If you can successfully deploy this model, then other scenarios should also be manageable.

FQDN in Multicluster Istio Service Mesh

For services across different meshes to access each other, they must be aware of each other’s fully qualified domain name (FQDN). FQDNs typically consist of the service name, namespace, and top-level domain (e.g., svc.cluster.local). In Istio’s multi-cloud or multi-mesh setup, different mechanisms such as ServiceEntry, VirtualService, and Gateway configurations are used to control and manage service routing and access, instead of altering the FQDN.

The FQDN in a multi-cloud service mesh remains the same as in a single cluster, usually following the format:

<service-name>.<namespace>.svc.cluster.local

You might think about using meshID to distinguish meshes. The meshID is mainly used to differentiate and manage multiple Istio meshes within the same environment or across environments, and it is not used to directly construct the service FQDN.

Main roles of meshID

- Mesh-level telemetry data aggregation: Differentiates data from different meshes, allowing for monitoring and analysis on a unified platform.

- Mesh federation: Establishes federation among meshes, allowing for sharing some configurations and services.

- Cross-mesh policy implementation: Identifies and applies mesh-specific policies, such as security policies and access control.

Cross-Cluster Service Registration, Discovery, and Routing

In the Istio multi-mesh environment, the east-west gateway plays a key role. It not only handles ingress and egress traffic between meshes but also supports service discovery and connectivity. When one cluster needs to access a service in another cluster, it routes to the target service through this gateway.

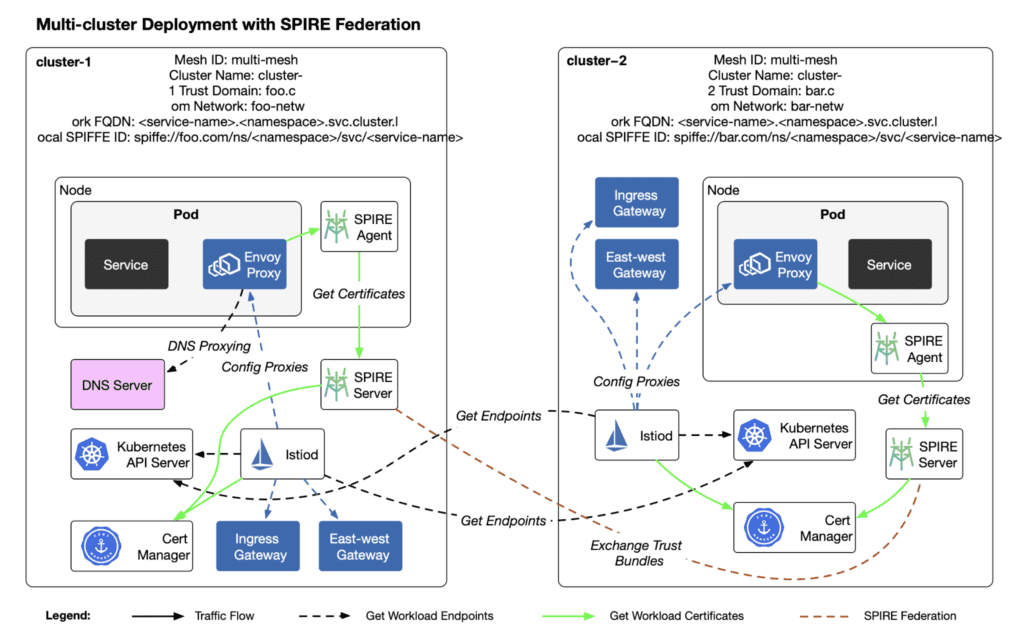

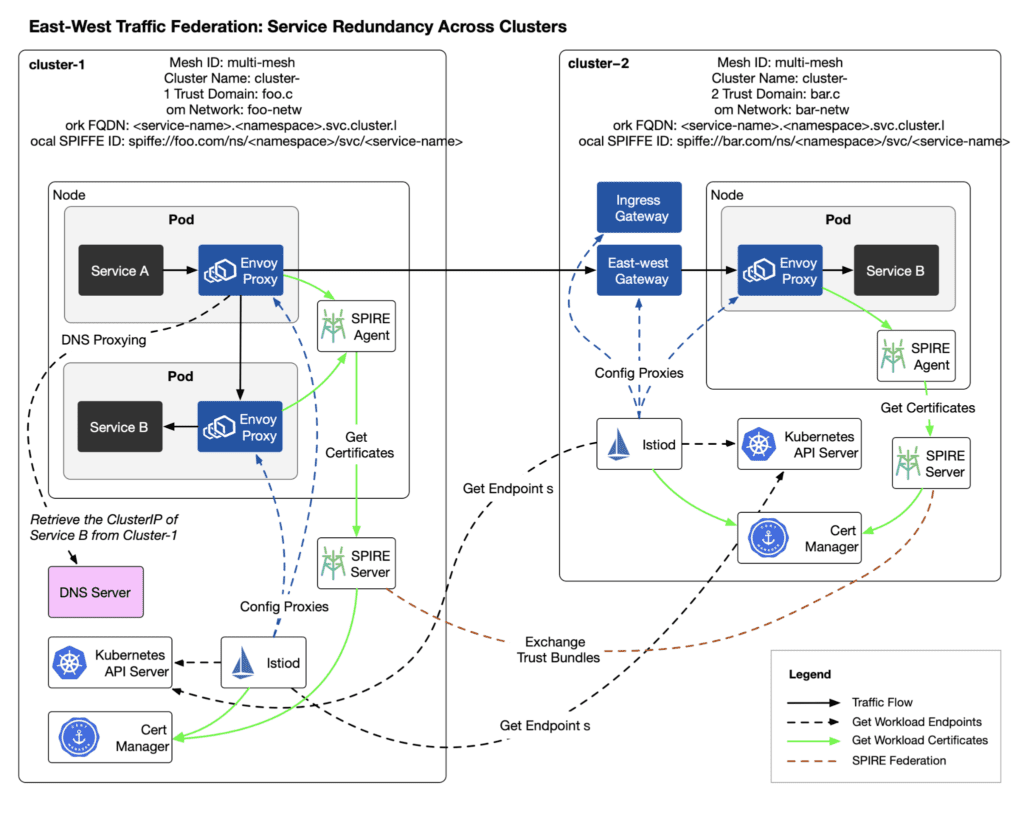

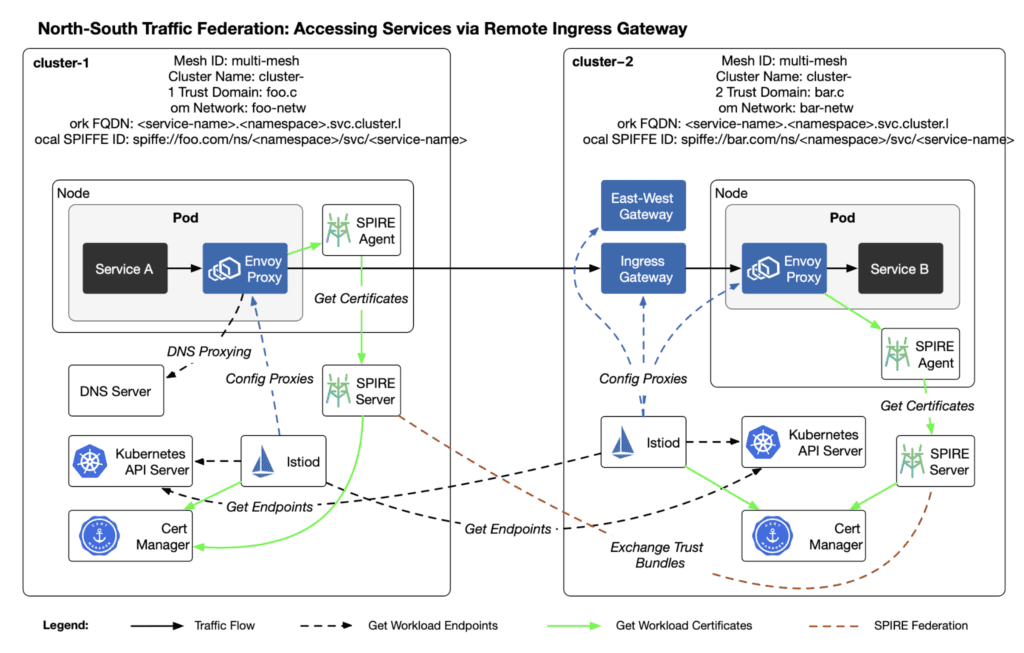

The diagram below shows the process of service registration, discovery, and routing across clusters.

In the configuration of Istio multi-mesh, the processes of service registration, discovery, and routing are crucial as they ensure that services in different clusters can discover and communicate with each other. Here are the basic steps in service registration, discovery, and routing in the Istio multi-mesh environment:

1. Service Registration

In each Kubernetes cluster, when a service is deployed, its details are registered with the Kubernetes API server. This includes the service name, labels, selectors, ports, etc.

2. Sync to Istiod

Istiod, serving as the control plane, is responsible for monitoring changes in the status of the Kubernetes API server. Whenever a new service is registered or an existing service is updated, Istiod automatically detects these changes. Istiod then extracts the necessary service information and builds internal configurations of services and endpoints.

3. Cross-Cluster Service Discovery

To enable a service in one cluster to discover and communicate with a service in another cluster, Istiod needs to synchronize service endpoint information across all relevant clusters. This is usually achieved in one of two ways:

- DNS Resolution: Istio can be configured to use CoreDNS or a similar service to return cross-cluster service endpoints in DNS queries. When a service tries to resolve another cluster’s service, the DNS query returns the IP addresses of the accessible remote service. In this article, we enable Istio’s DNS proxy to achieve cross-cluster service discovery. If a service exists both locally and remotely, a local DNS query returns only the local service’s ClusterIP. If the service exists only in a remote cluster, the DNS query returns the IP address of the East-West Gateway’s load balancer in the remote cluster, which can also be used for cross-cluster failover.

- Service Entry Synchronization: By setting specific ServiceEntry configurations, an Envoy proxy in one cluster knows how to find and route to a service in another cluster through the East-West Gateway.

4. Routing and Load Balancing

When Service A needs to communicate with Service B, its Envoy proxy first resolves the name of Service B to get an IP address, which is the load balancer address of the East-West Gateway in Service B’s cluster. Then, the East-West Gateway routes the request to the target service. Envoy proxies can select the best service instance to send requests based on configured load balancing strategies (e.g., round-robin, least connections, etc.).

5. Traffic Management

Istio offers a rich set of traffic management features, such as request routing, fault injection, and traffic mirroring. These rules are defined in the Istio control plane and pushed to the various Envoy proxies for execution. This allows for flexible control and optimization of communication between services in a cross-cluster environment.

Identity Recognition and Authentication Between Clusters

When services running in different clusters need to communicate with each other, correct identity authentication and authorization are key to ensuring service security. Using SPIFFE helps to identify and verify the identities of services, but in a multi-cloud environment, these identities need to be unique and verifiable.

To this end, we will set up SPIRE federation to assign identities to services across multiple clusters and achieve cross-cluster identity authentication:

- Using SPIFFE to Identify Service Identities: Under the SPIFFE framework, each service is assigned a unique identifier in the format

spiffe://<trust-domain>/<namespace>/<service>. In a multi-cloud environment, including the cluster name in the “trust domain” ensures the uniqueness of identities. For example,spiffe://foo.com/ns/default/svc/service1andspiffe://bar.com/ns/default/svc/service1can be set to differentiate services with the same name in different clusters. - Using SPIRE Federation to Manage Inter-Cluster Certificates: This enhances the security of the multi-cloud service mesh. SPIRE (SPIFFE Runtime Environment) offers a highly configurable platform for service identity verification and certificate issuance. When using SPIRE federation, cross-cluster service authentication can be achieved by creating a Trust Bundle for each SPIRE cluster.

Here are the steps for implementing the SPIRE federation.

1. Configuring Trust Domain

Each cluster is configured as a separate trust domain. Thus, each service within a cluster will have a unique SPIFFE ID based on its trust domain. For instance, a service in cluster 1 might have the ID spiffe://cluster1/ns/default/svc/service1, while the same service in cluster 2 would be spiffe://cluster2/ns/default/svc/service1.

2. Establishing Trust Bundle

Configure trust relationships in SPIRE to allow nodes and workloads from different trust domains to mutually verify each other. This involves exchanging and accepting each other’s CA certificates or JWT keys between trust domains, ensuring the security of cross-cluster communication.

3. Configuring SPIRE Server and Agent

Deploy a SPIRE Server and SPIRE Agent in each cluster. The SPIRE Server is responsible for managing the issuance and renewal of certificates, while the SPIRE Agent handles the secure distribution of certificates and keys to services within the cluster.

Compatibility Issues with Workload Registration when Using SPIRE Federation in Istio

In this article, we use the traditional Kubernetes Workload Registrar in the SPRIE Server to handle workload registration within the cluster. The Kubernetes Workload Registrar has been deprecated from SPIRE v1.5.4 onwards, and replaced by the SPIRE Controller Manager, which, in my testing, does not run well with Istio.

4. Using the Workload API

Services can request and update their identity certificates through SPIRE’s Workload API. This way, services can continuously verify their identities and securely communicate with other services, even when operating in different clusters. We will configure the proxies in the Istio mesh to share the Unix Domain Socket in the SPIRE Agent, thus accessing the Workload API to manage certificates.

5. Automating Certificate Rotation

We will use cert-manager as SPIRE’s UpstreamAuthority to configure the automatic rotation of service certificates and keys, enhancing the system’s security. With automated rotation, even if certificates are leaked, attackers can only use these certificates for a short period.

These steps allow you to establish a cross-cluster, secure service identity verification framework, enabling services in different clusters to securely recognize and communicate with each other, effectively reducing security risks and simplifying certificate management. This configuration not only enhances security but also improves the system’s scalability and flexibility through distributed trust domains.

Multicluster Deployment

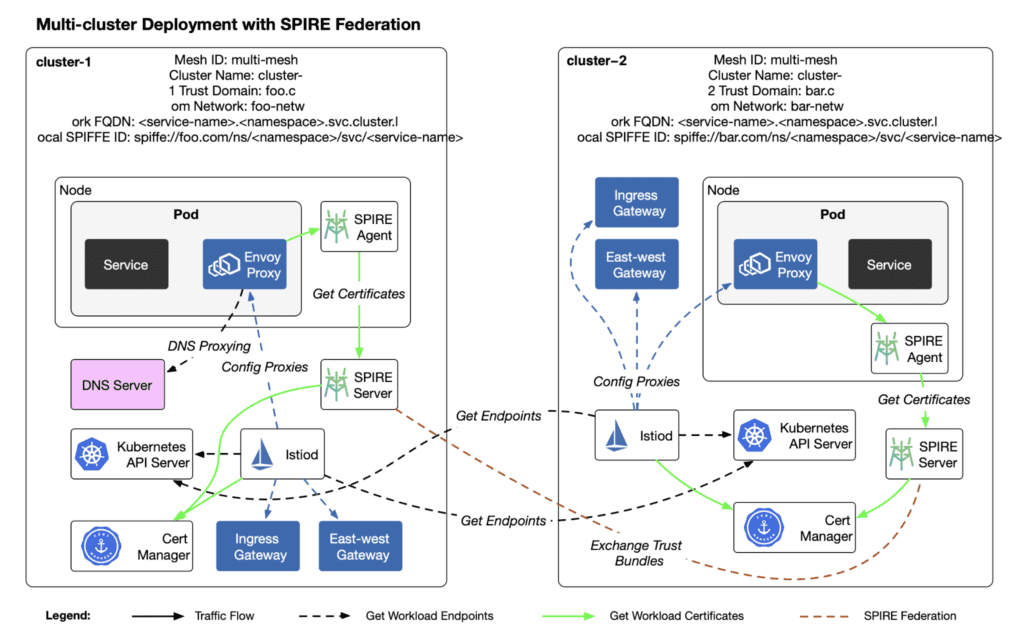

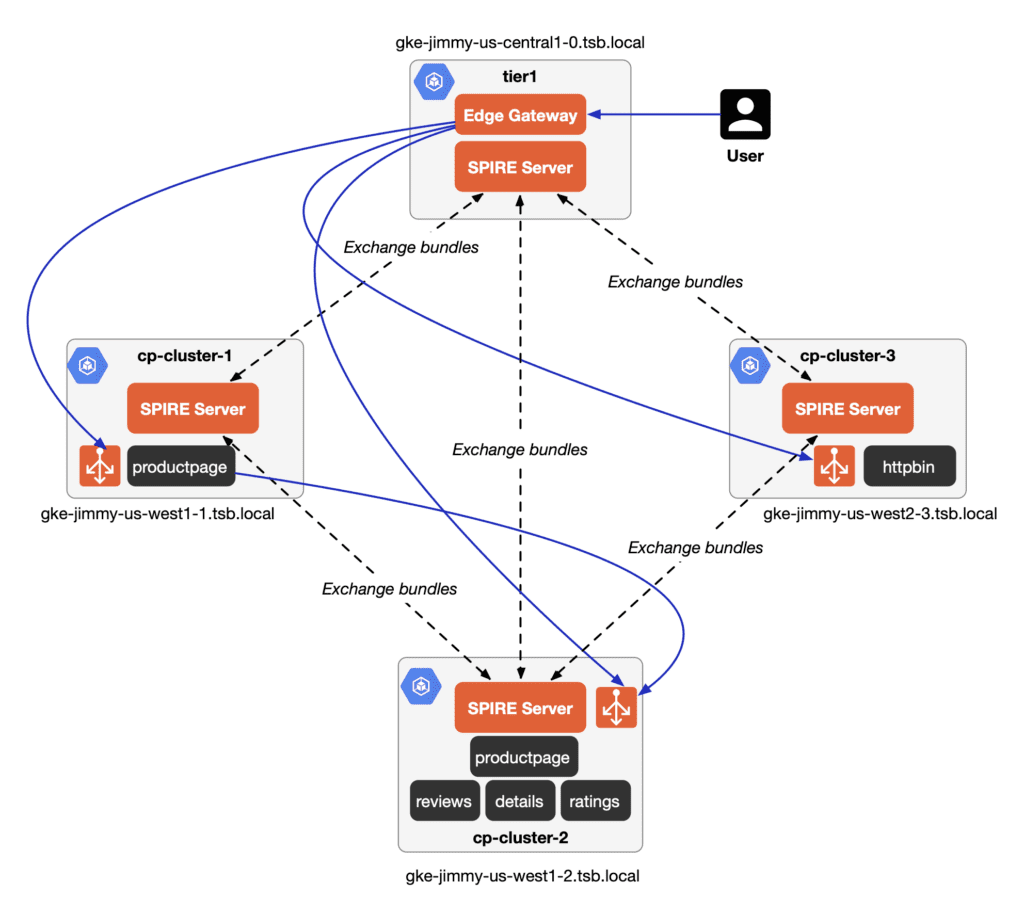

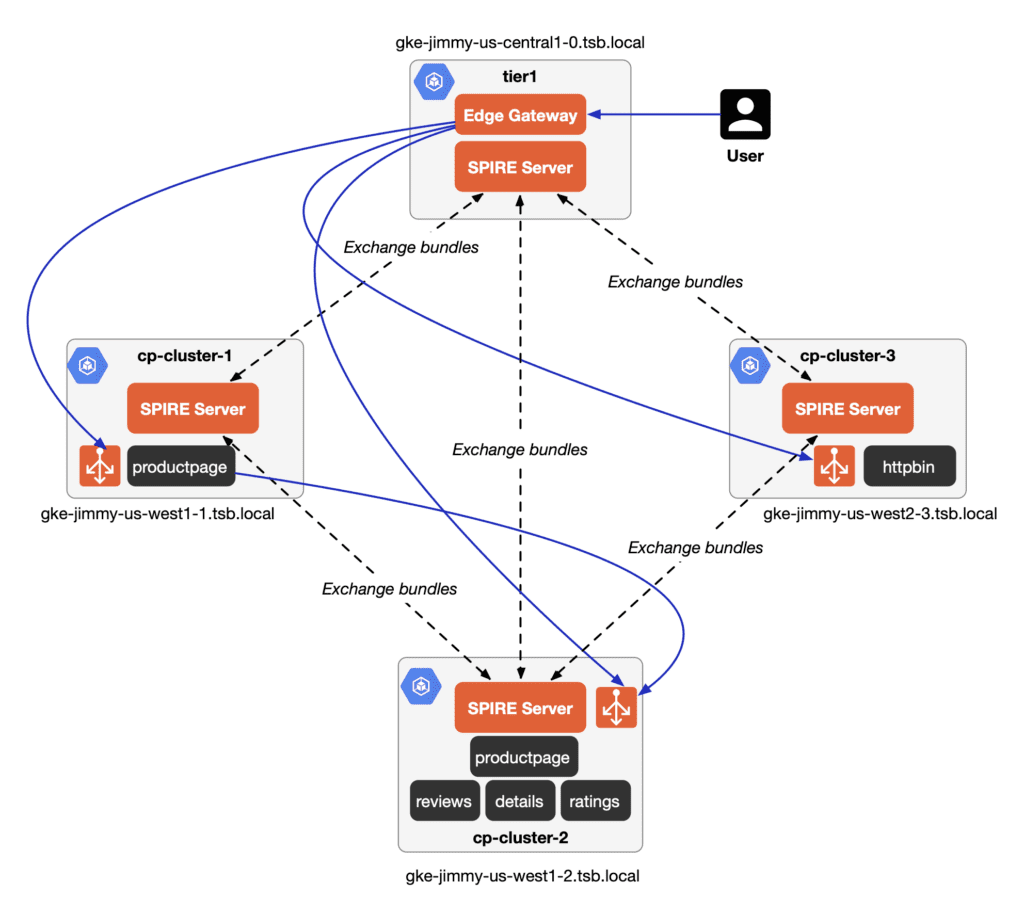

The diagram below shows the deployment model for the Istio multi-cloud and SPIRE federation.

Below, I will demonstrate how to achieve seamless cross-cluster access in a multi-cloud Istio mesh.

- Create two Kubernetes clusters in GKE, named

cluster-1andcluster-2. - Deploy SPIRE and set up federation in both clusters.

- Install Istio in both clusters, paying attention to configuring the trust domain, east-west gateways, ingress gateways,

sidecarInjectorWebhookmounting SPIFFE UDS’sworkload-socket, and enabling DNS proxy. - Deploy test applications and verify seamless cross-cluster access.

The versions of the components we deployed are as follows:

- Kubernetes: v1.29.4

- Istio: v1.22.1

- SPIRE: v1.5.1

- cert-manager: v1.15.1

I have saved all commands and step-by-step instructions on Github: rootsongjc/istio-multi-cluster. You can follow the instructions in this project. Here are explanations for the main steps.

1. Preparing Kubernetes Clusters

Open Google Cloud Shell or your local terminal, and make sure you have installed the gcloud CLI. Use the following commands to create two clusters:

gcloud container clusters create cluster-1 --zone us-central1-a --num-nodes 3

gcloud container clusters create cluster-2 --zone us-central1-b --num-nodes 32. Deploying cert-manager

Use cert-manager as the root CA to issue certificates for istiod and SPIRE.

./cert-manager/install-cert-manager.sh3. Deploying SPIRE Federation

Basic information for SPIRE federation is as follows:

Image could not be loaded

Image could not be loaded in lightbox

Note: The trust domain does not need to match the DNS name but must be the same as the trust domain in the Istio Operator configuration.

Execute the following command to deploy SPIRE federation:

./spire/install-spire.shFor details on managing identities in Istio using SPIRE, refer to Managing Certificates in Istio with cert-manager and SPIRE.

4. Installing Istio

We will use IstioOperator to install Istio, configuring each cluster with:

- Automatic Sidecar Injection

- Ingress Gateway

- East-West Gateway

- DNS Proxy

- SPIRE Integration

- Access to remote Kubernetes cluster secrets

Execute the following command to install Istio:

istio/install-istio.shVerifying Traffic Federation

To verify the correctness of the multi-cloud installation, we will deploy different versions of the helloworld application in both clusters and then access the helloworld service from cluster-1 to test the following cross-cluster access scenarios:

- East-West Traffic Federation: Cross-cluster service redundancy

- East-West Traffic Federation: Handling non-local target services

- North-South Traffic Federation: Accessing services via a remote ingress gateway

Execute the following command to deploy the helloworld application in both clusters:

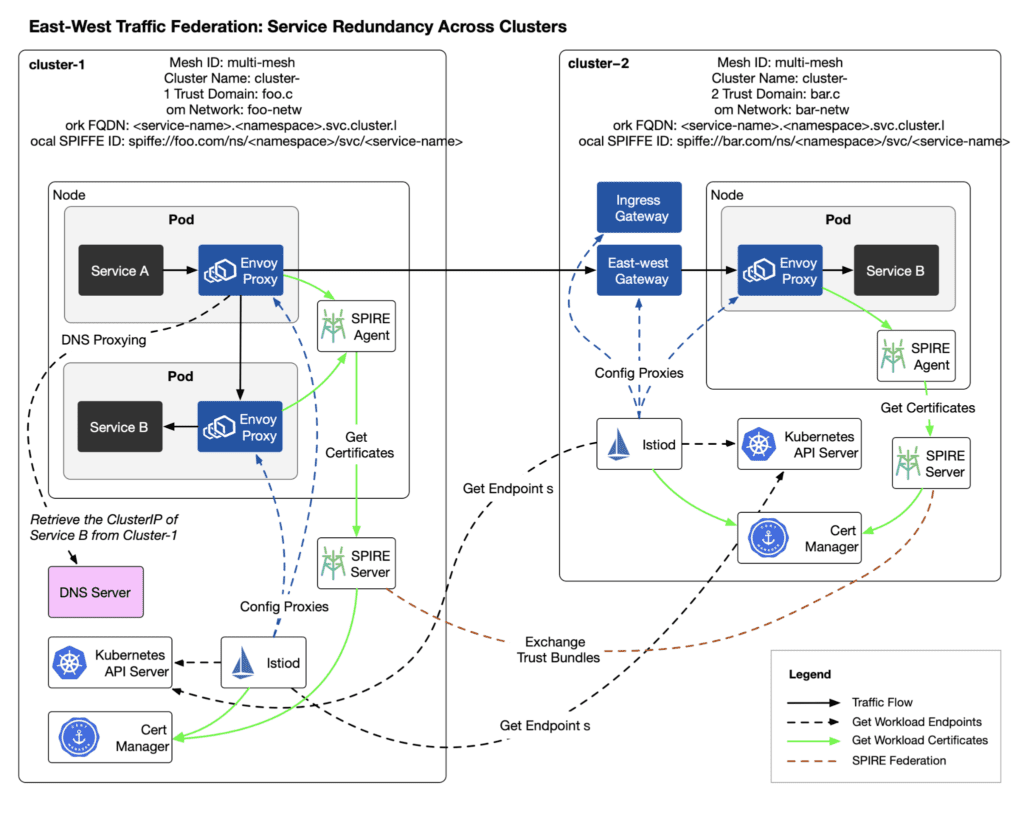

./example/deploy-helloword.shEast-West Traffic Federation: Cross-Cluster Service Redundancy

After deploying the helloworld application, access the hellowrold service from the sleep pod in cluster-1:

kubectl exec --context=cluster-1 -n sleep deployment/sleep -c sleep \

-- sh -c "while :; do curl -sS helloworld.helloworld:5000/hello; sleep 1; done"The diagram below shows the deployment architecture and traffic routing path for this scenario.

The response results including both helloworld-v1 and helloworld-v2 indicate that cross-cluster service redundancy is effective.

Verifying DNSAt this point, since the helloworld service exists both locally and in the remote cluster if you query the DNS name of the helloworld service in cluster-1:

kubectl exec -it deploy/sleep --context=cluster-1 -n sleep -- nslookup helloworld.helloworld.svc.cluster.localYou will get the ClusterIP of the helloworld service in cluster-1.

Verifying Traffic Routing

Next, we will verify the cross-cluster traffic routing path by examining the Envoy proxy configuration.View the endpoints of the helloworld service in cluster-1:

istioctl proxy-config endpoints deployment/sleep.sleep --context=cluster-1 --cluster "outbound|5000||helloworld.helloworld.svc.cluster.local"You will see output similar to the following:

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.76.3.22:5000 HEALTHY OK outbound|5000||helloworld.helloworld.svc.cluster.local

34.136.67.85:15443 HEALTHY OK outbound|5000||helloworld.helloworld.svc.cluster.localThese two endpoints, one is the endpoint of the helloworld service in cluster-1, and the other is the load balancer address of the istio-eastwestgateway service in cluster-2. Istio sets up SNI for cross-cluster TLS connections, and in cluster-2, the target service is distinguished by SNI.

Execute the following command to query the endpoint in cluster-2 based on the previous SNI:

istioctl proxy-config endpoints deploy/istio-eastwestgateway.istio-system --context=cluster-2 --cluster "outbound_.5000_._.helloworld.helloworld.svc.cluster.local"You will get output similar to the following:

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.88.2.4:5000 HEALTHY OK outbound_.5000_._.helloworld.helloworld.svc.cluster.localThis endpoint is the endpoint of the helloworld service in the cluster-2 cluster.

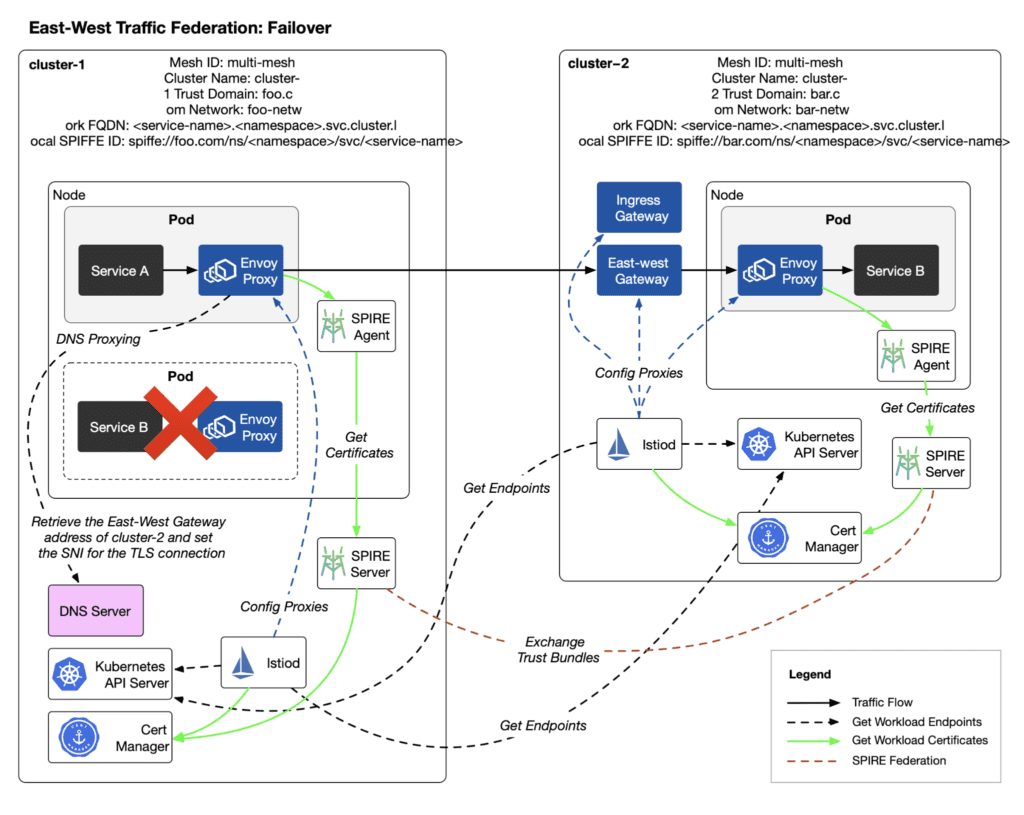

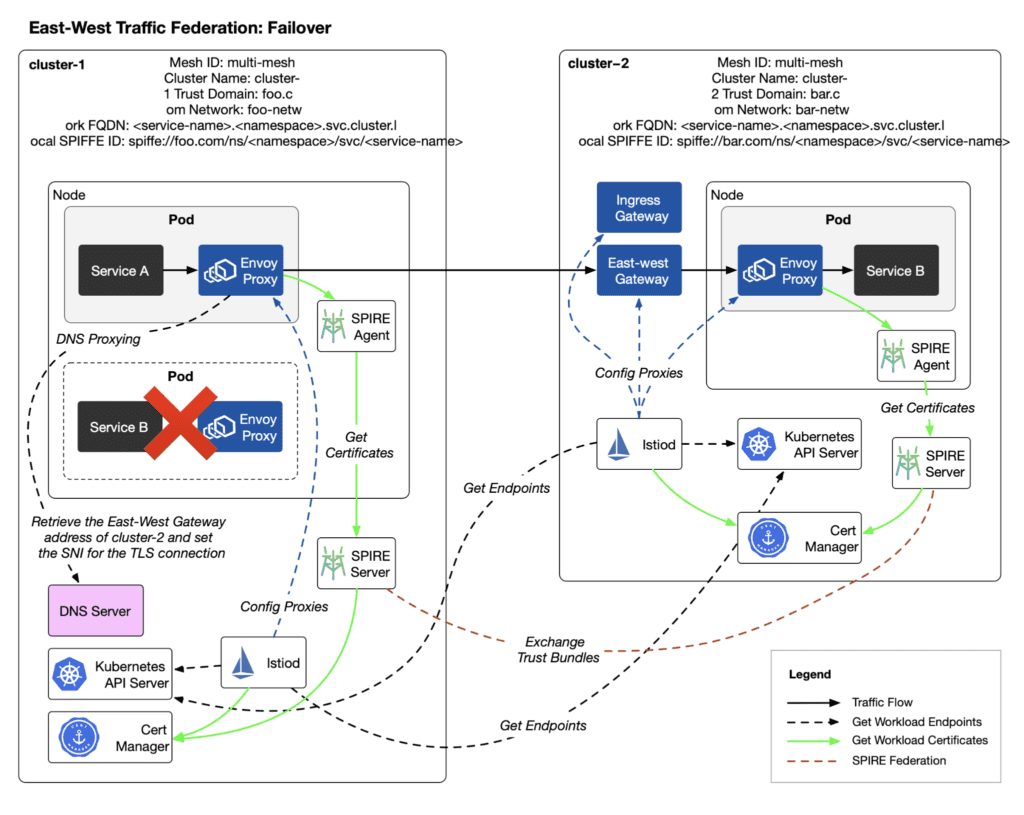

Through the steps above, you should understand the traffic path for cross-cluster redundant services. Next, we will delete the helloworld service in cluster-1. No configuration changes are needed in Istio to automatically achieve failover.

East-West Traffic Federation: Failover

Execute the following command to scale down the replicas of helloworld-v1 in cluster-1 to 0:

kubectl -n helloworld scale deploy helloworld-v1 --context=cluster-1 --replicas 0Access the helloworld service again from cluster-1:

kubectl exec --context=cluster-1 -n sleep deployment/sleep -c sleep \

-- sh -c "while :; do curl -sS helloworld.helloworld:5000/hello; sleep 1; done"You will still receive responses from helloworld-v2.

Now, directly delete the helloworld service in cluster-1:

kubectl delete service helloworld -n helloworld --context=cluster-1You will still receive responses from helloworld-v2, indicating that cross-cluster failover is effective.

The diagram below shows the traffic path for this scenario.

Verifying DNS

At this point, since the helloworld service exists both locally and in the remote cluster, if you query the DNS name of the helloworld service in cluster-1:

kubectl exec -it deploy/sleep --context=cluster-1 -n sleep -- nslookup helloworld.helloworld.svc.cluster.localYou will get the address and port 15443 of the East-West Gateway in cluster-2.

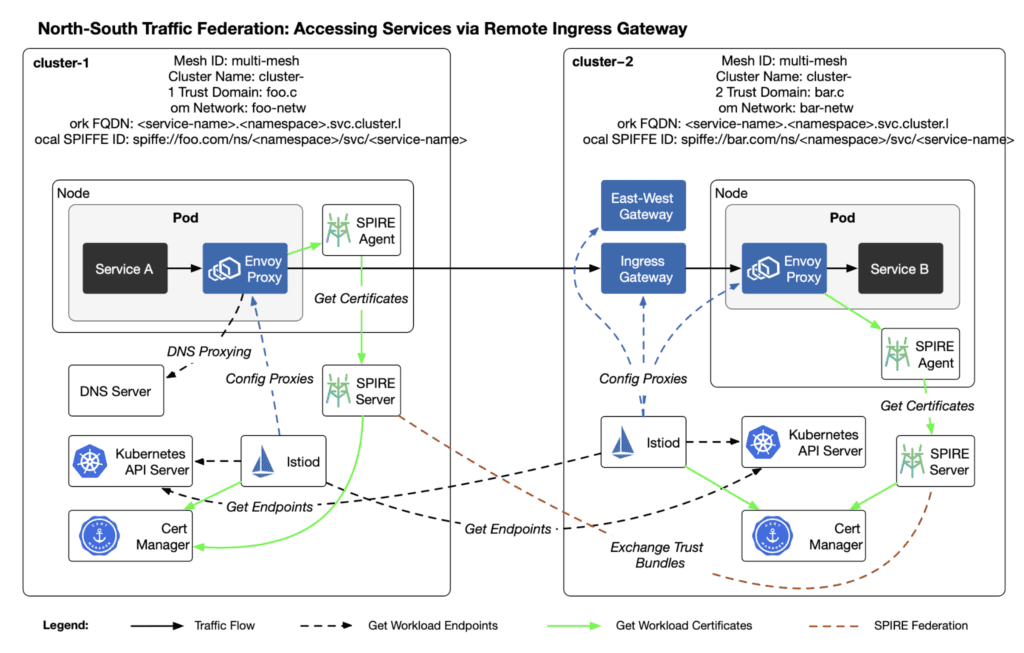

North-South Traffic Federation: Accessing Services via Remote Ingress Gateway

Accessing services in a remote cluster through the ingress gateway is the most traditional way of cross-cluster access. The diagram below shows the traffic path for this scenario.

Execute the following command to create a Gateway and VirtualService in cluster-2:

kubectl apply --context=cluster-2 \

-f ./examples/helloworld-gateway.yaml -n helloworldGet the address of the ingress gateway in cluster-2:

GATEWAY_URL=$(kubectl -n istio-ingress --context=cluster-2 get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')Execute the following validation to access the service via the remote ingress gateway:

kubectl exec --context="${CTX_CLUSTER1}" -n sleep deployment/sleep -c sleep \

-- sh -c "while :; do curl -s http://$GATEWAY_URL/hello; sleep 1; done"You will receive responses from helloworld-v2.

Verifying Identity

Execute the following command to obtain the certificate from the sleep pod in the cluster-1 cluster:

istioctl proxy-config secret deployment/sleep -o json --context=cluster-1| jq -r '.dynamicActiveSecrets[0].secret.tlsCertificate.certificateChain.inlineBytes' | base64 --decode > chain.pem

split -p "-----BEGIN CERTIFICATE-----" chain.pem cert-

openssl x509 -noout -text -in cert-ab

openssl x509 -noout -text -in cert-aaIf you see the following fields in the output message, it indicates that the identity assignment is correct:

Subject: C=US, O=SPIFFE

URI:spiffe://foo.com/ns/sample/sa/sleepView the identity information in SPIRE:

kubectl --context=cluster-1 exec -i -t -n spire spire-server-0 -c spire-server \

-- ./bin/spire-server entry show -socketPath /run/spire/sockets

/server.sock --spiffeID spiffe://foo.com/ns/sleep/sa/sleepYou will see output similar to the following:

Found 1 entry

Entry ID : 9b09080d-3b67-44c2-a5b8-63c42ee03a3a

SPIFFE ID : spiffe://foo.com/ns/sleep/sa/sleep

Parent ID : spiffe://foo.com/k8s-workload-registrar/cluster-1/node/gke-cluster-1-default-pool-18d66649-z1lm

Revision : 1

X509-SVID TTL : default

JWT-SVID TTL : default

Selector : k8s:node-name:gke-cluster-1-default-pool-18d66649-z1lm

Selector : k8s:ns:sleep

Selector : k8s:pod-uid:6800aca8-7627-4a30-ba30-5f9bdb5acdb2

FederatesWith : bar.com

DNS name : sleep-86bfc4d596-rgdkf

DNS name : sleep.sleep.svcRecommendations for Production Environments

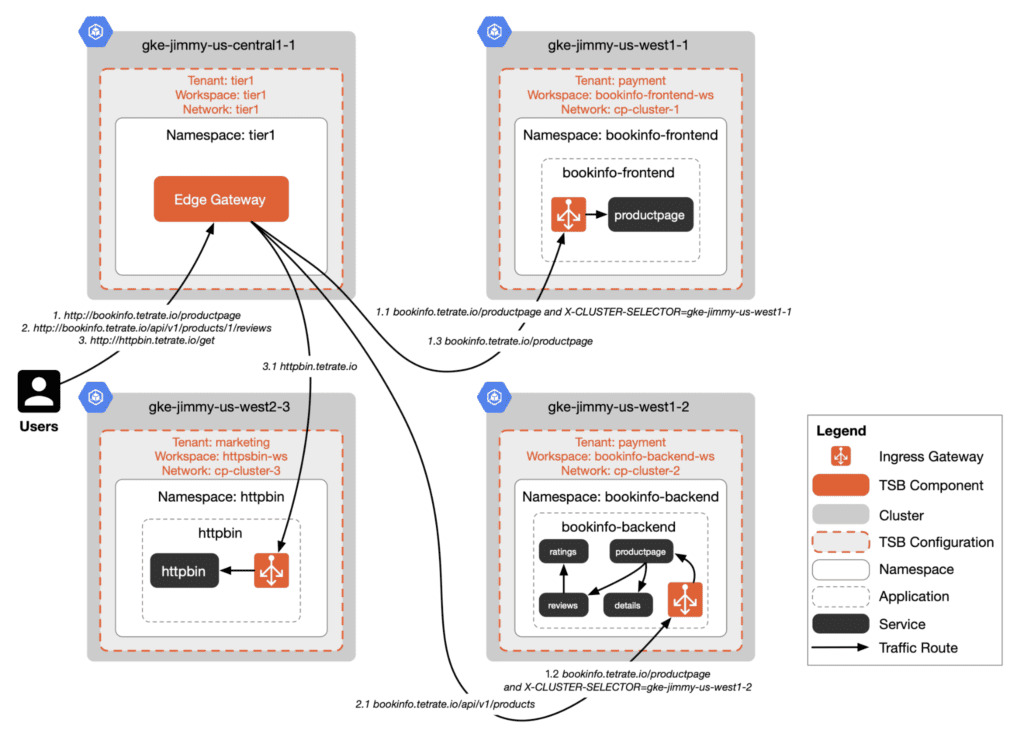

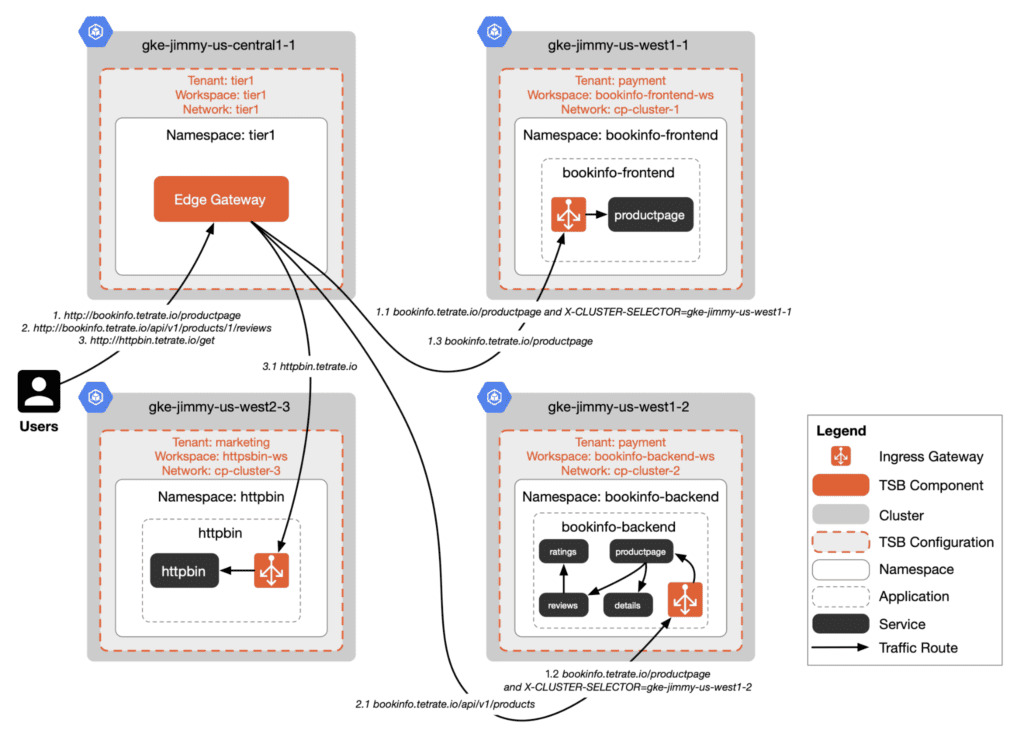

For production environments, it is recommended to use a Unified Gateway, employing a Tier-2 architecture. In the Tier-1 edge gateway, configure global traffic routing. This edge gateway will send the transcribed Istio configuration to the various ingress gateways in the Tier-2 clusters.

The diagram below shows the deployment of an Istio service mesh using a SPIRE federation and a Tier2 architecture with TSB.

We have divided these four Kubernetes clusters into Tier1 cluster (tier1) and Tier2 clusters (cp-cluster-1, cp-cluster-2, and cp-cluster-3). An Edge Gateway is installed in T1, while bookinfo and httpbin applications are installed in T2. Each cluster will have an independent trust domain, and all these clusters will form a SPIRE federation.

The diagram below shows the traffic routing for users accessing bookinfo and httpbin services through the ingress gateway.

You need to create a logical abstraction layer suitable for multi-cloud above Istio. For detailed information about the unified gateway in TSB, refer to TSB Documentation.

Summary

This article has detailed the key technologies and methods for implementing service identity verification, DNS resolution, and cross-cluster traffic management in an Istio multi-cloud mesh environment. By precisely configuring Istio and SPIRE federation, we have enhanced the system’s security and improved the efficiency and reliability of inter-service communication. Following these steps, you will be able to build a robust, scalable multi-cloud service mesh to meet the complex needs of modern applications.

References

- Deploying a Federated SPIRE Architecture – spiffe.io

- Install Multi-Primary on different networks -istio.io

- Istio SPIRE Integration – istio.io

- DNS Proxying – istio.io

- Attesting Istio workload identities with SPIFFE and SPIRE – developer.ibm.com

- Managing Certificates in Istio with cert-manager and SPIRE – tetrate.io