Single-Origin AI Infrastructure: LLM, MCP, and OpenInference in Tetrate Agent Router Service

Discover how Tetrate Agent Router Service closes the experimentation-to-production gap with unified LLM routing, MCP catalog for simplified server discovery, and OpenInference observability—all as managed infrastructure.

Now Available

MCP Catalog with verified first-party servers, profile-based configuration, and OpenInference observability are now generally available in Tetrate Agent Router Service. Start building production AI agents today with $5 free credit.

It’s an exciting time for AI engineers. The ecosystem has taken innovation to new heights, even by the already lofty standards of AI: Major model releases and retirements from Anthropic (Sonnet 4.5 is in, 3.5 is out), OpenAI (GPT-5 is in, GPT-4o was out, then resurrected); New MCP spec (2025-06-18); Almost back-to-back launches of TWO definitive MCP registries (from the Model Context Protocol team AND GitHub).

All this innovation means there’s never been a better time to experiment, yet the dynamic landscape makes taking experiments to production harder than ever. We’re hearing CIOs say 2026 is the year AI experiments finally ship and show ROI (or at least, 5% of them should). Meanwhile, the quieter problems pile up: infrastructure sprawl, complexity debt, and unproven software in business-critical systems. The odds keep stacking against enterprise production readiness.

We built Tetrate Agent Router Service to close the experimentation-to-production gap. It’s a ”single-origin” AI infrastructure for building production AI agents that blends reliability with comprehensive support for LLMs, MCP, and OpenInference as managed infrastructure. No configuration race conditions, no inconsistent routing, no disparate catalogs depending on whether your agents hit MCP or LLM endpoints. Best of all, no complex infrastructure to manage.

Today, we proudly donated MCP support to Envoy AI Gateway. Since Agent Router Service is built on Envoy AI Gateway, it inherits both the enterprise robustness and these MCP features. We’re also launching major Agent Router Service enhancements that make MCP adoption easier: simplified discovery and configuration, plus seamless AI observability with OpenInference support.

These features are now generally available to all Agent Router Service users. Sign up for Agent Router Service to get started today. Read on for more details.

Simplifying MCP Adoption with First-Party Server Discovery and Configuration

The MCP Adoption Challenge

MCP is changing how AI applications connect to tools and data, but adoption has real friction. The first problem is discovery. MCP servers are scattered across GitHub repos, blogs, and forums with no central directory. You’re left figuring out which servers are actively maintained, which ones you can trust with your data (often there are multiple options with no first-party server), and what they actually do. You end up reading through READMEs and source code just to understand basic capabilities.

Finding a trusted server is just the start. GitHub Copilot needs a personal access token configured one way. Stripe wants API keys in specific environment variables. Each server has different connection requirements, and you need to translate everything into the exact JSON your AI assistant expects. One typo means debugging cryptic errors. What should take five minutes becomes an afternoon of trial and error.

The problems don’t end when the connection finally works. Servers that expose many tools immediately hurt performance. Your model must parse dozens of tool descriptions with every request, slowing responses. You face constant prompts: “Should I search issues or list pull requests? Update the issue or add a comment?” The breadth becomes noise instead of power. And when something breaks, you’re stuck troubleshooting client-server incompatibilities with minimal guidance.

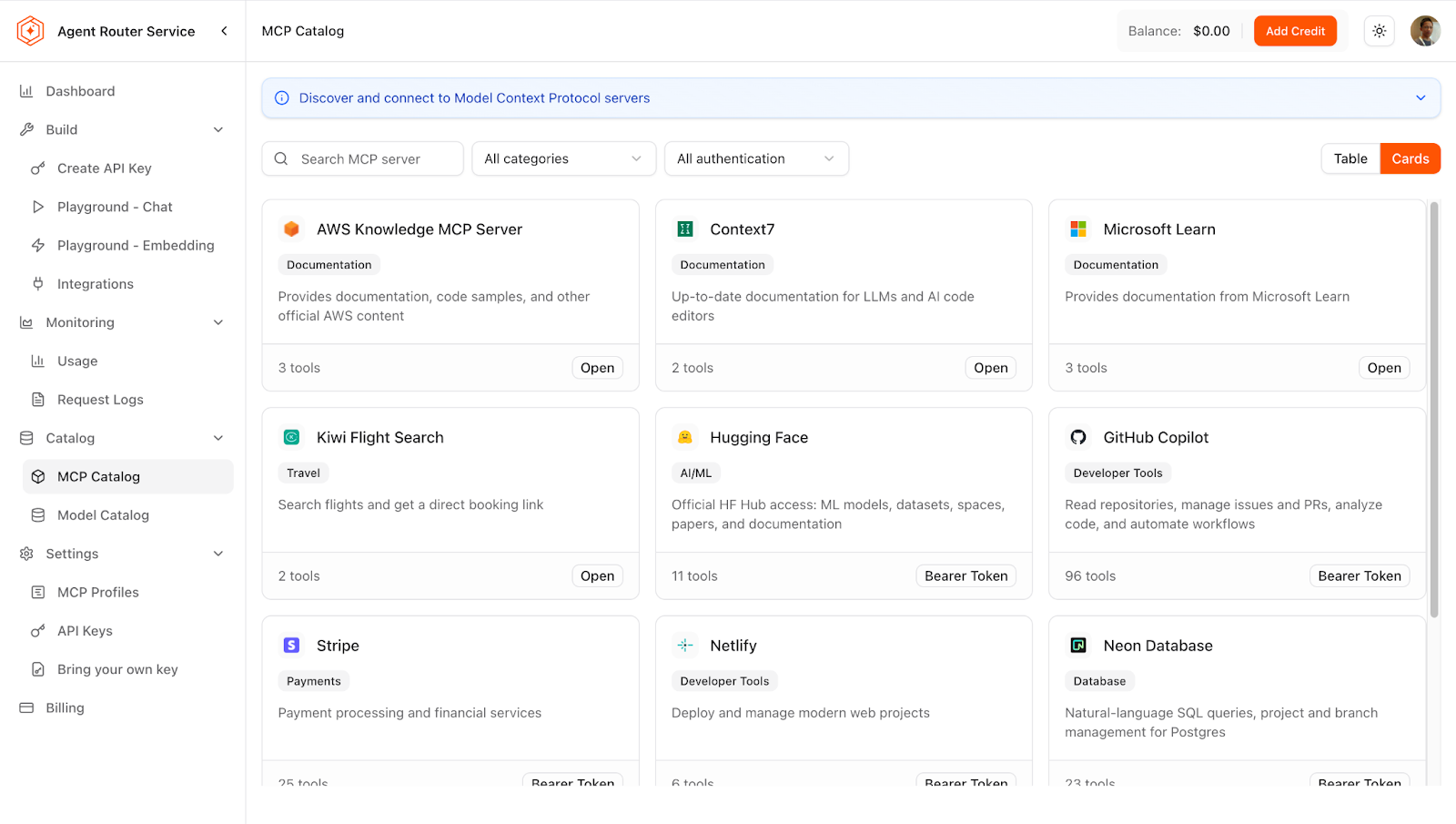

MCP Catalog to Connection in Minutes with Tetrate

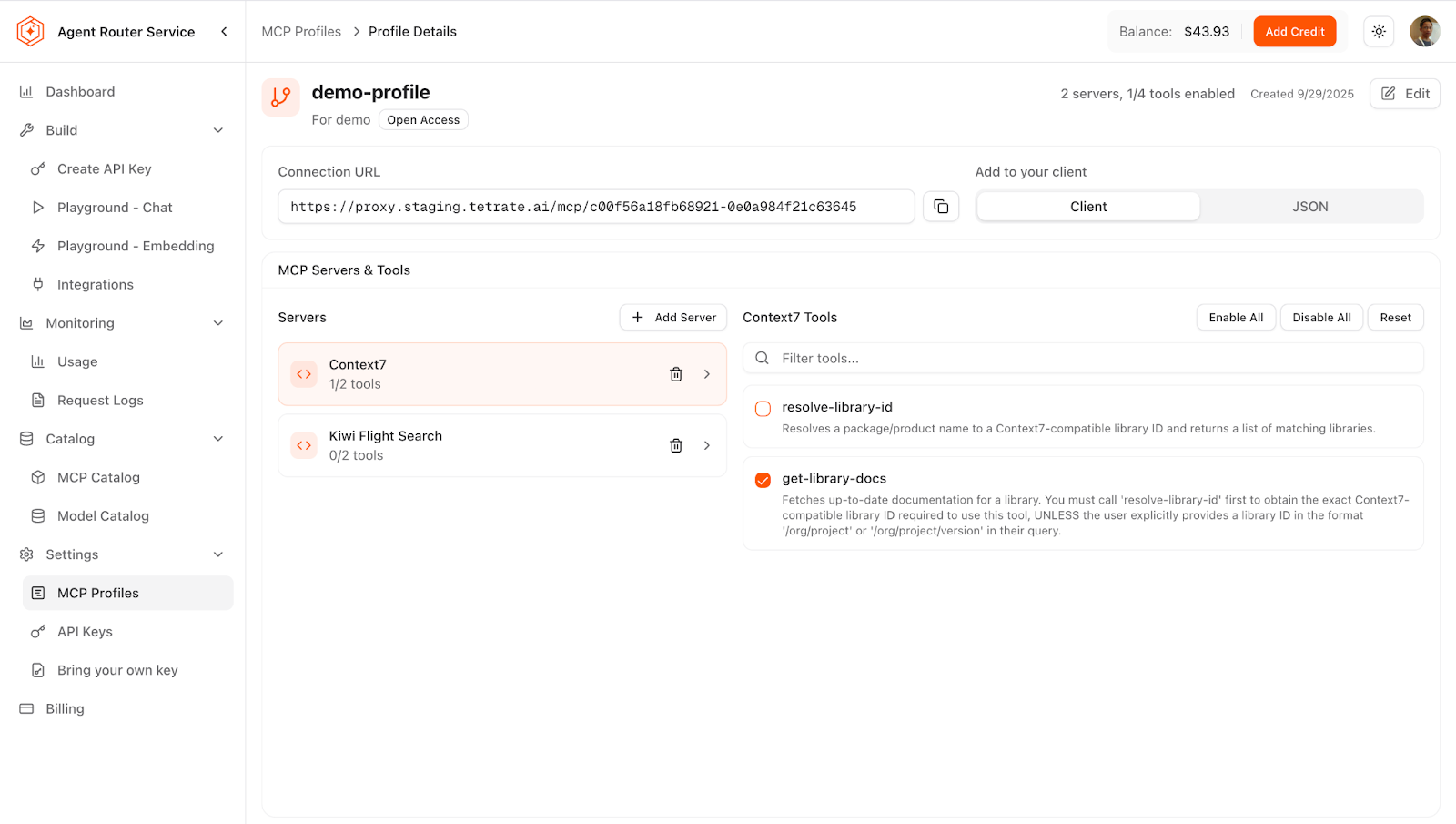

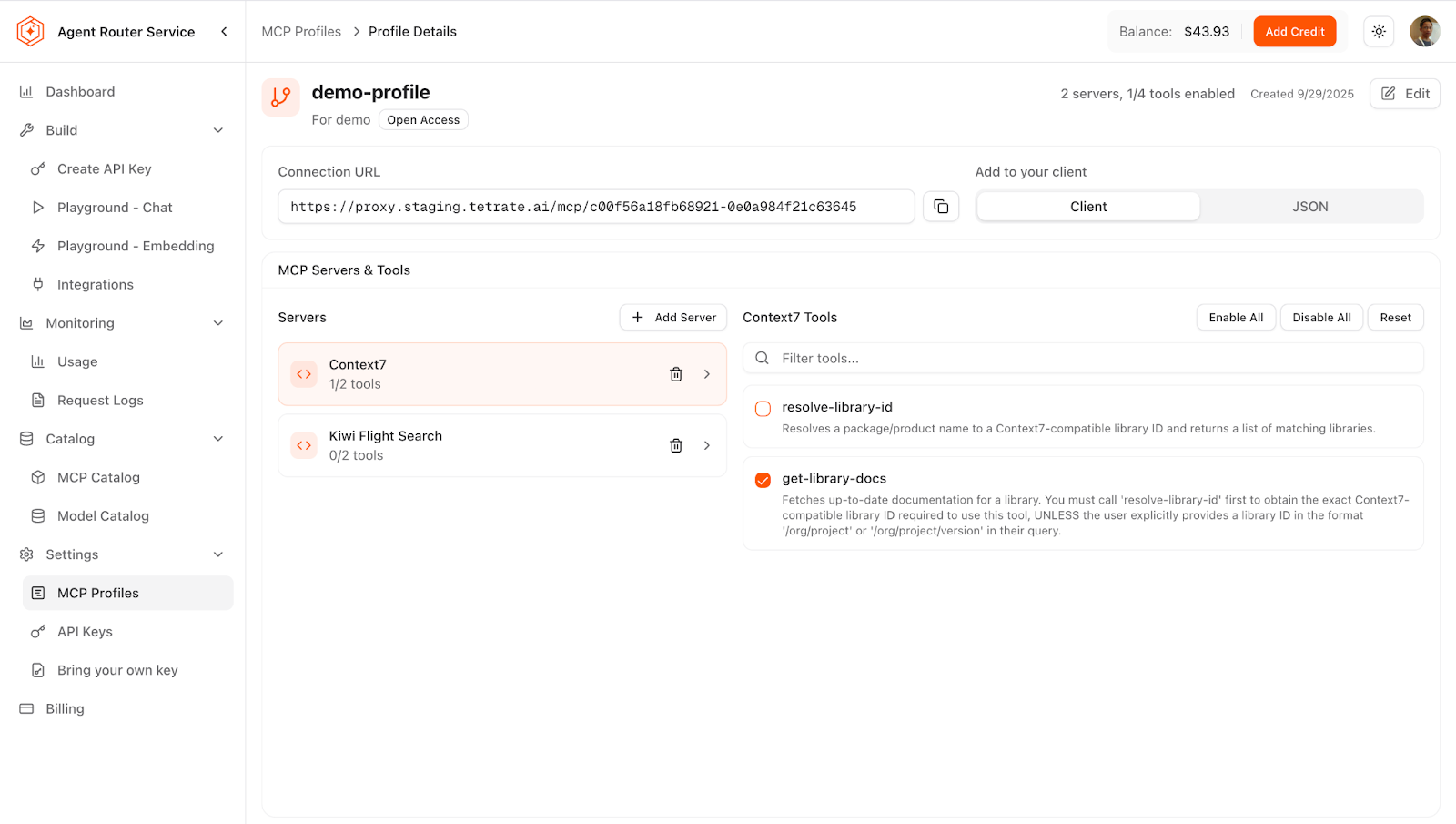

We address these problems directly with two core features: the MCP Catalog and Profile-based configuration. The catalog functions as a verified directory of first-party hosted MCP servers, organized by category with clear information about what each provides. Even though trusted first-party MCP servers are in the minority today, they are exploding in popularity and we expect them to be in the majority in the near future. Looking for documentation tools? You’ll see Context7, AWS Knowledge, and Microsoft Learn with tool counts, authentication requirements, and descriptions of their capabilities. Need developer tools? You’ll see Github and Stripe. Every server in the catalog is tested and verified, so you know it works before you invest time in connecting it. No more hunting through GitHub or wondering if a server is trustworthy.

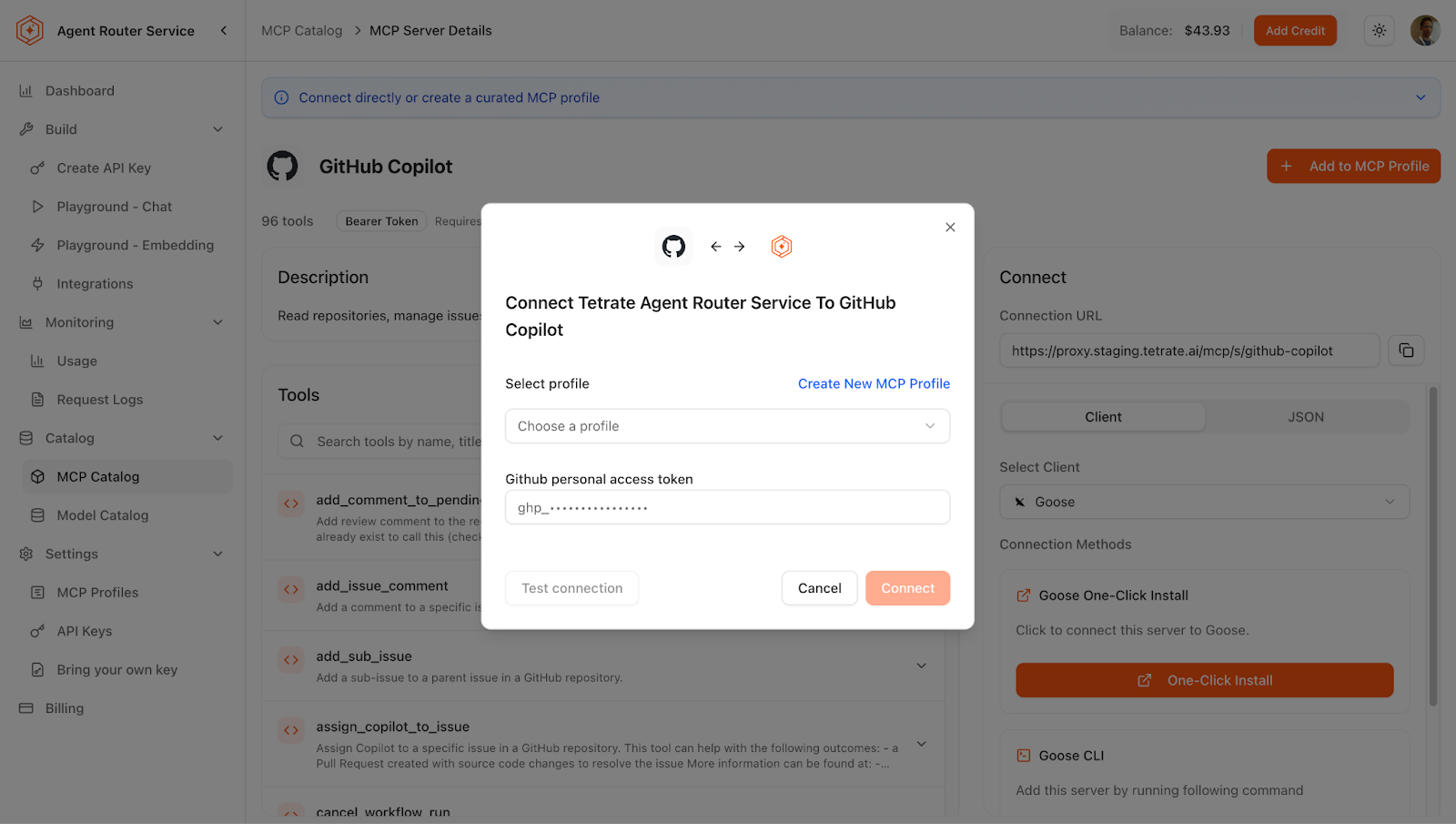

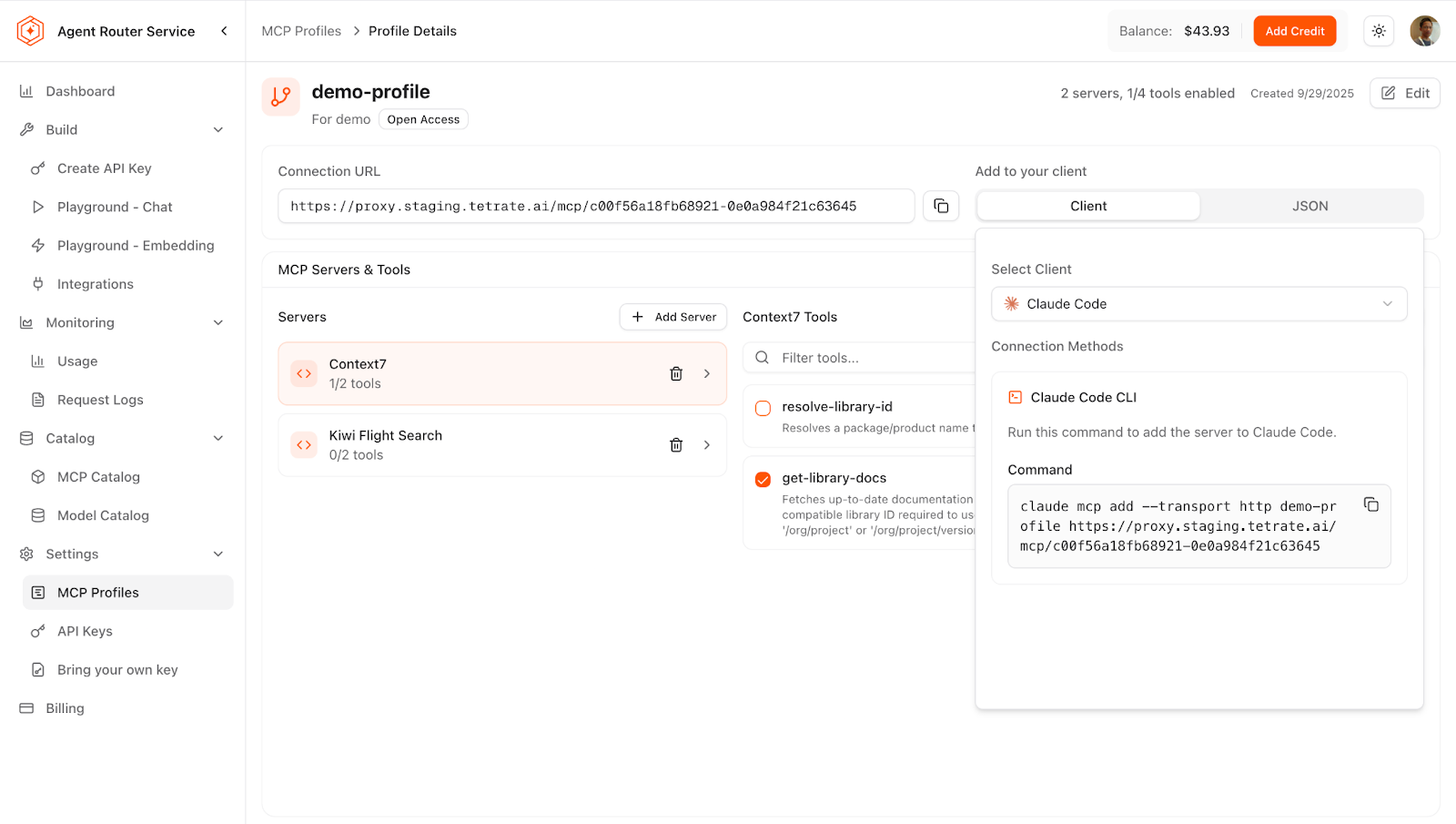

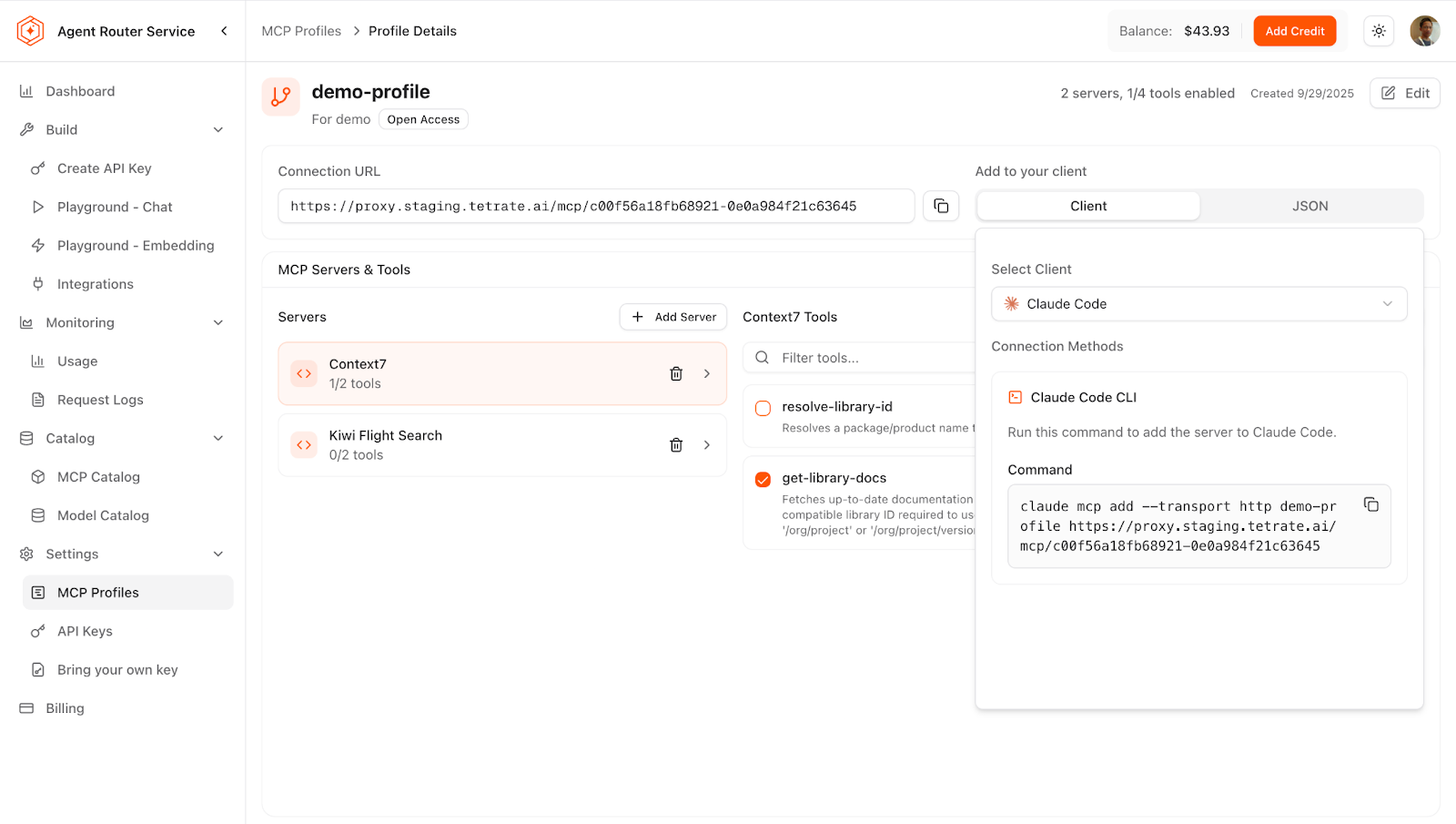

When you find what you need, the “Add to MCP Profile” button starts a guided setup process. Profiles solve the configuration problem by capturing all connection details in one reusable template. Click to add GitHub Copilot to a profile, paste your personal access token once, and you’re done. Agent Router Service stores it securely and generates a proxied connection URL that works with any client. For Context7, a single OAuth click handles authentication. But the key innovation is tool filtering: before saving your profile, you see every tool the server provides with a toggle to enable or disable it. Building a profile for GitHub issue management? Enable the 5 issue-related tools and disable the other 91. Even if the MCP server natively provides tool filtering, two unrelated MCP servers may provide the same function. You can choose the preferred tools while disabling others to avoid LLM confusion. Your agent now processes a focused set of capabilities, runs faster, and prompts you only for relevant actions.

Connecting your client is straightforward because Agent Router Service generates the exact configuration you need. Goose, Cursor, and VS Code users get one-click install. Claude Code users get a single command. Clients needing JSON get perfectly formatted snippets. Behind the scenes, Agent Router Service handles authentication so clients never see your credentials, translates between MCP protocol versions, filters tools at the gateway level, and works around server-specific quirks. Whereas some MCP servers fail to connect directly to Goose, they suddenly work through Agent Router Service because it handles the protocol differences.

The practical impact is clear. Configure authentication once per server, use it everywhere. Create a profile for a specific workflow and share it with your team via URL. Find and connect to new MCP servers in minutes instead of hours of setup and debugging. When GitHub releases a new tool or you need to adjust exposed capabilities, update the profile once and all clients reflect the change. Agent Router Service centralizes MCP complexity in one place where you manage it once, then forget about it. That’s what you need to move from experimenting with MCP to building on it.

Blackbox to Glassbox with OpenInference

Observability Challenges of Building and Running Production-quality Agents

AI applications break traditional monitoring. LLM requests involve complex token costs, streaming responses, and semantic failures that still return HTTP 200. Unlike web services, AI apps need to track token usage, response times, and actual content to catch hallucinations and poor outputs. MCP traffic adds complexity with bidirectional communication and multi-step workflows spanning services. You need specialized tools that capture both LLM metrics and MCP interactions to debug and optimize AI systems.

Tetrate Agent Router Service automatically inherits OpenInference tracing from Envoy AI Gateway, giving you enterprise observability without setup. Every request generates detailed traces with token usage and response times, plus MCP transaction visibility for complex agent actions like using GitHub’s MCP server to generate docs, tests, and refactor code. This exports to any OpenTelemetry-compatible backend like Elastic, Jaeger, or Arize Phoenix for end-to-end visibility without managing infrastructure. With streamlined OTel export workflows, production-grade agent is observability simpler than ever.

OpenInference Export to Elastic Example with Tetrate

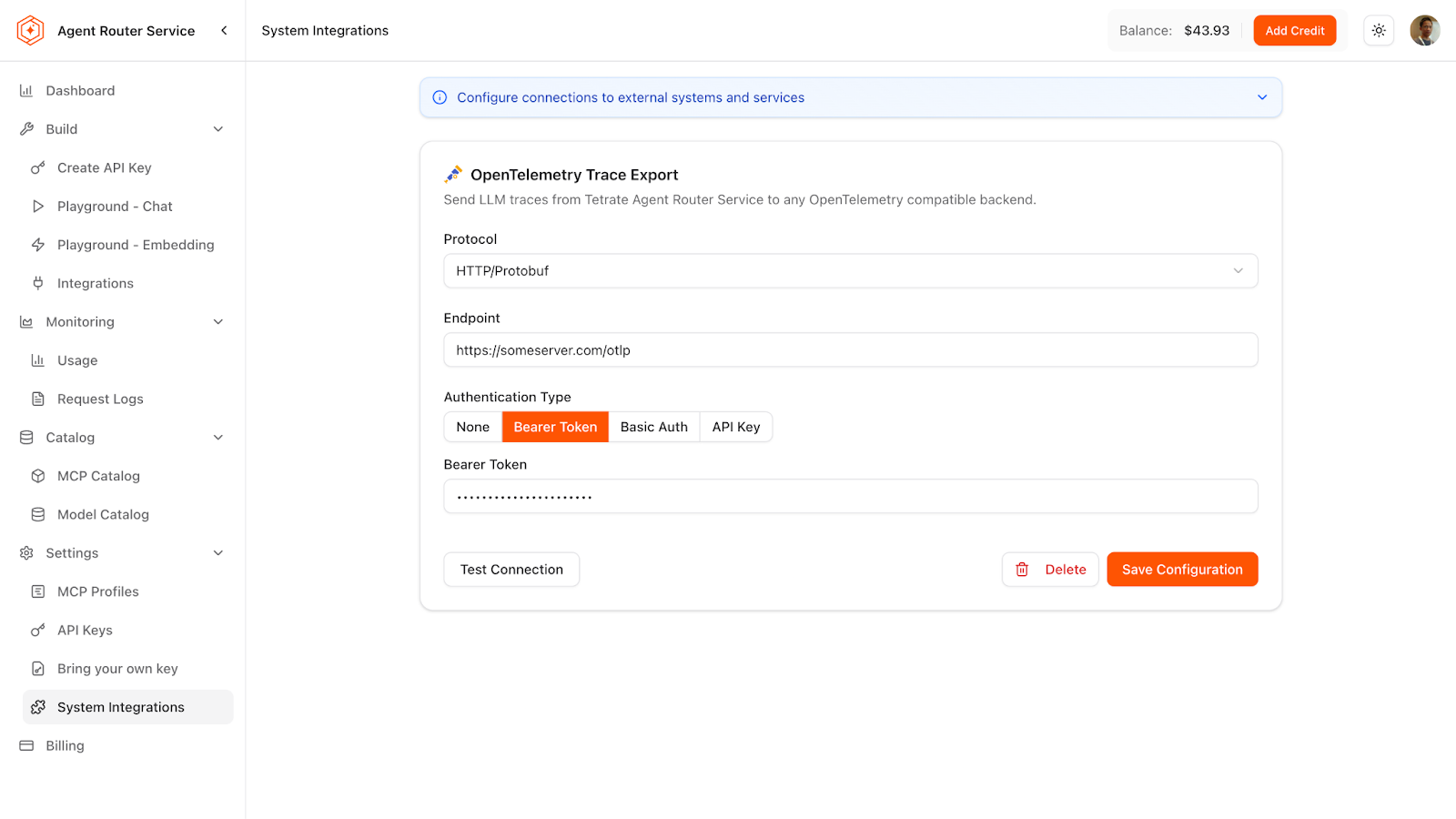

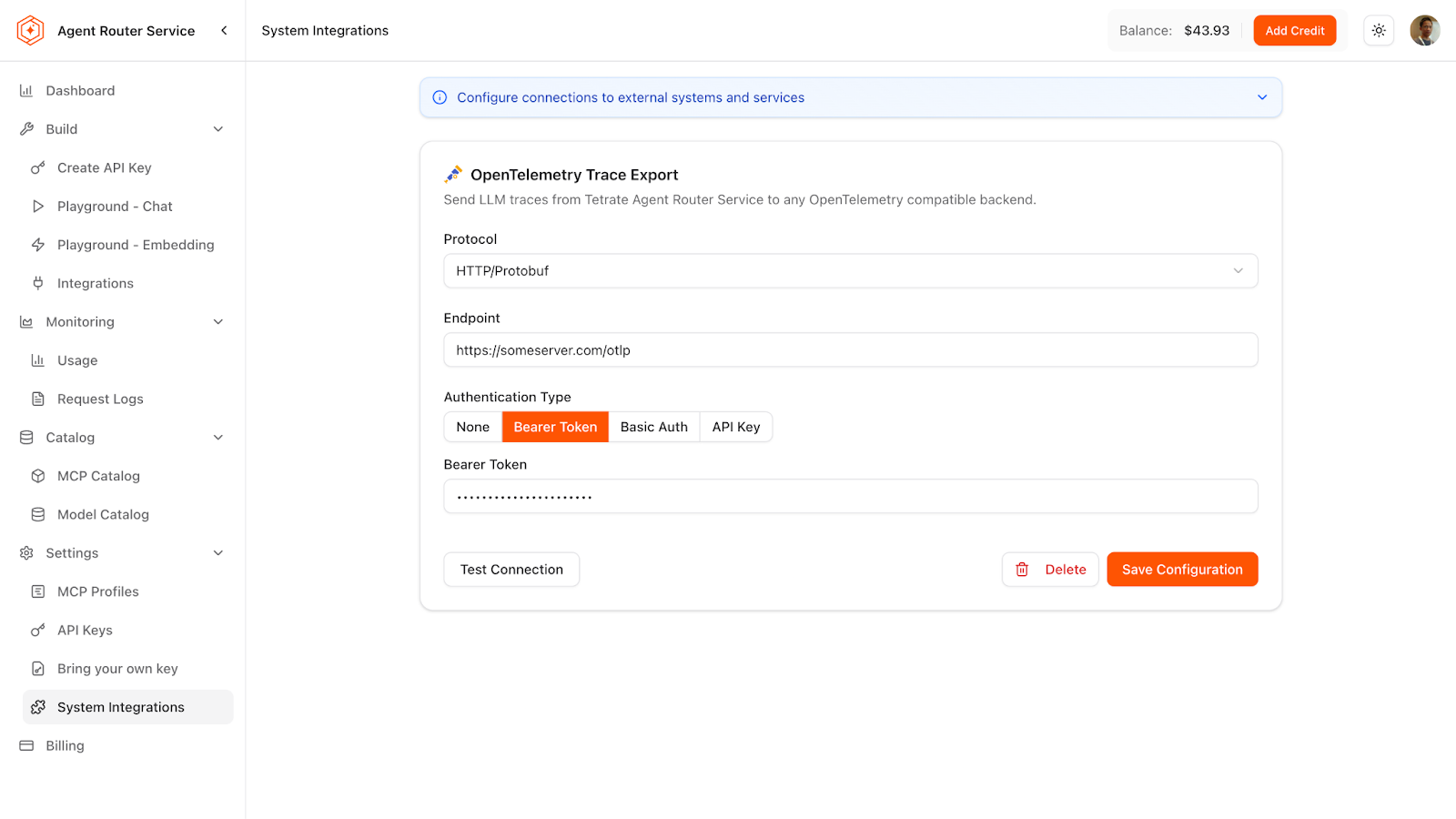

To try out OTel export in Agent Router Service, navigate to Settings → System Integrations and you’ll see the OpenTelemetry Trace Export configuration. The interface is straightforward: select your protocol (HTTP/Protobuf or gRPC), enter your observability backend’s endpoint, and configure authentication. Agent Router Service supports multiple authentication methods—no auth for local development, bearer tokens for cloud services, basic auth, or API keys depending on your backend’s requirements. Click “Test Connection” to verify everything works, then “Save Configuration.” From that moment, every API request and MCP transaction flowing through Agent Router Service generates traces that export to your observability platform.

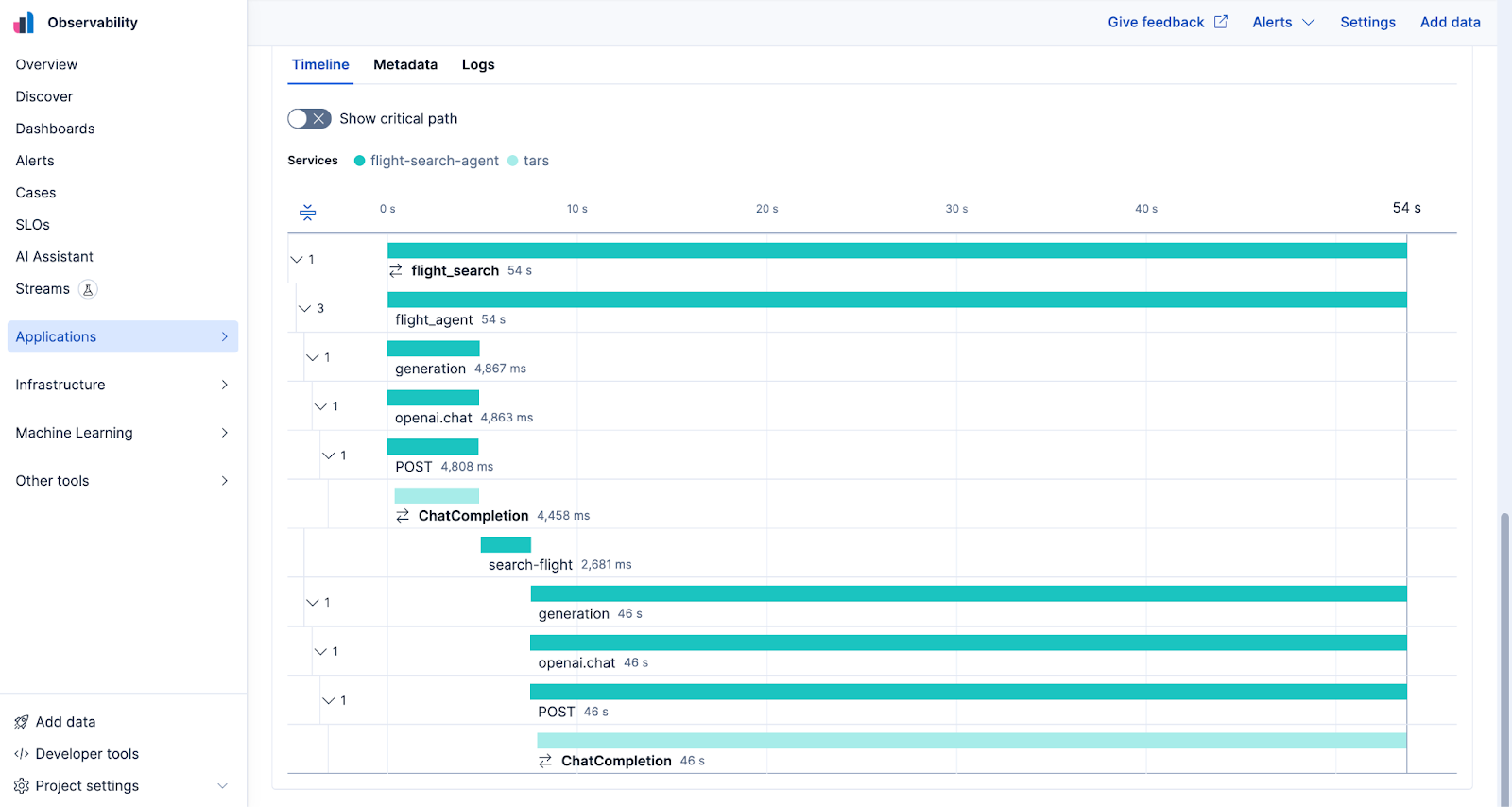

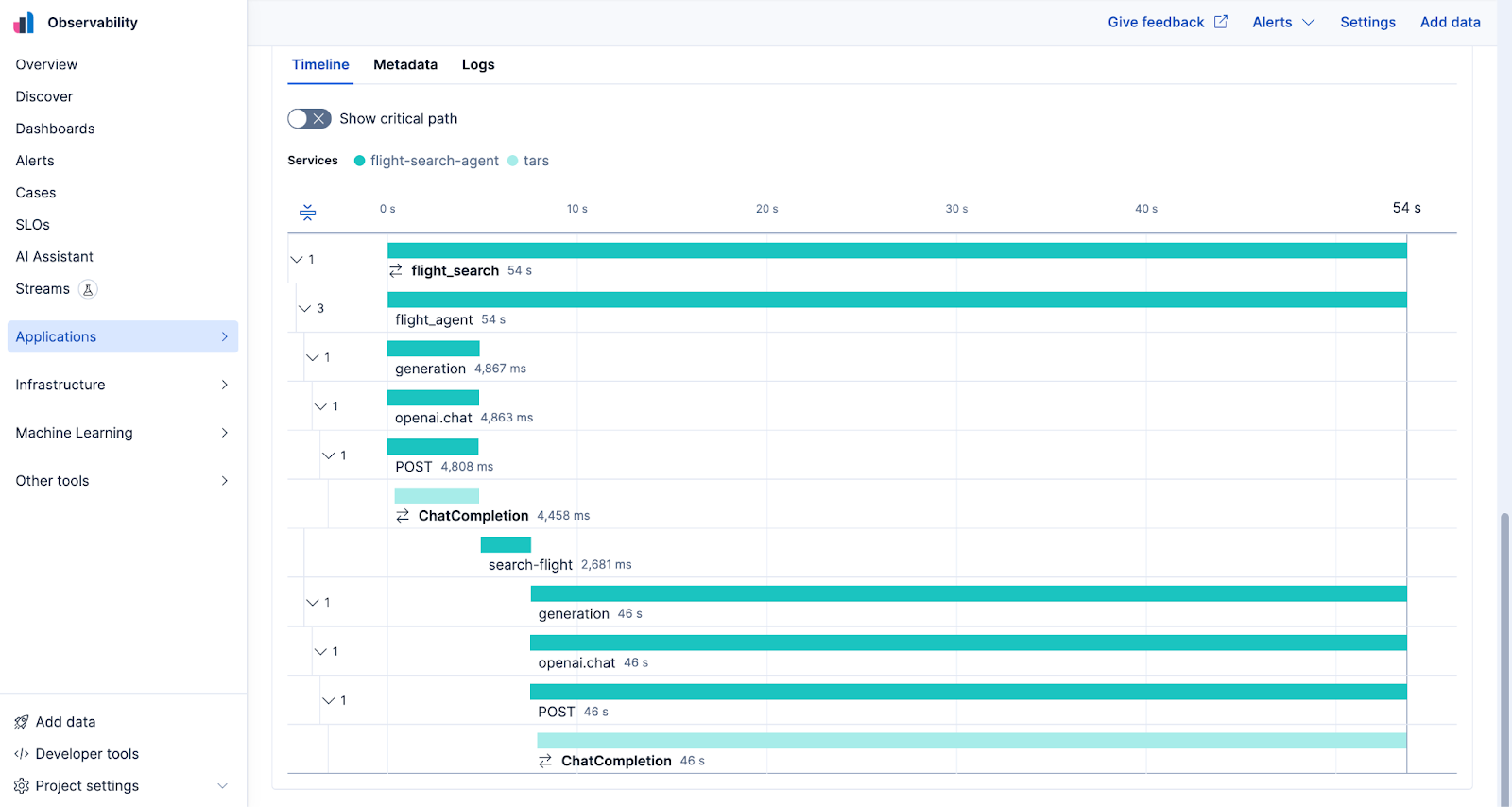

Here’s what this looks like in practice: you’re building an agent that uses Agent Router Service to connect to GitHub’s MCP server. To get full end-to-end visibility, instrument your agent with OpenTelemetry to capture the user request and your application logic. When requests flow through Agent Router Service, it automatically enriches your traces with detailed spans for LLM API calls, MCP server interactions, and individual tool executions.

When the agent is tasked to “analyze recent bug reports and create a summary document,” the agent makes several MCP calls: searching issues, reading their contents, and creating a new documentation file. In your Jaeger, Elastic, or other OpenTelemetry backend, you see the complete trace: your application’s request handling, the LLM request with token counts, the GitHub issue search with response time, subsequent issue reads showing how many were fetched, and the documentation creation call including the generated content. Each span shows duration, success status, and relevant metadata. When something breaks (maybe the GitHub token expired or the LLM produced malformed JSON for the MCP call), the trace pinpoints exactly where and why.

What’s Next

Single-origin AI infrastructure makes taking AI experiments to production much easier. Building it on Envoy AI Gateway provides unmatched enterprise readiness and simplicity for AI engineers. We’re excited to bring MCP, LLM, and OpenInference together in an enterprise-ready, managed package as the latest iteration of Agent Router Service. These features are now generally available.

Sign up for Tetrate Agent Router Service to get started today. You also get a $5 credit when you sign up with your business email.

Contact us to learn how Tetrate can help your journey. Follow us on LinkedIn for latest updates and best practices.