Business Continuity: Multi-Tier Ingress that is Scalable and Flexible

Most applications accept traffic from the internet or from partner networks. That entry path is called ingress. A multi-tier ingress model solves complexity by placing gateways at clear boundaries.

Most applications accept traffic from the internet or from partner networks. That entry path is called ingress. The software component that receives, inspects, and routes that traffic is called a gateway. Many organizations begin with a single shared gateway because it is simple to operate. Over time that simplicity turns into risk as changes pile up, incidents spread across services, and policies drift between clusters.

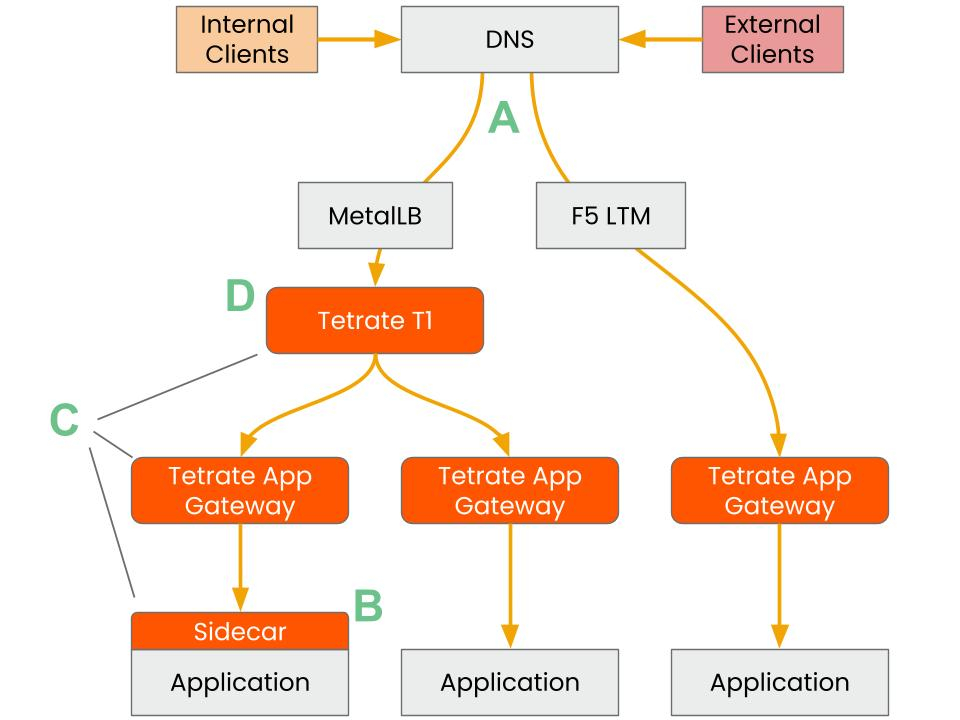

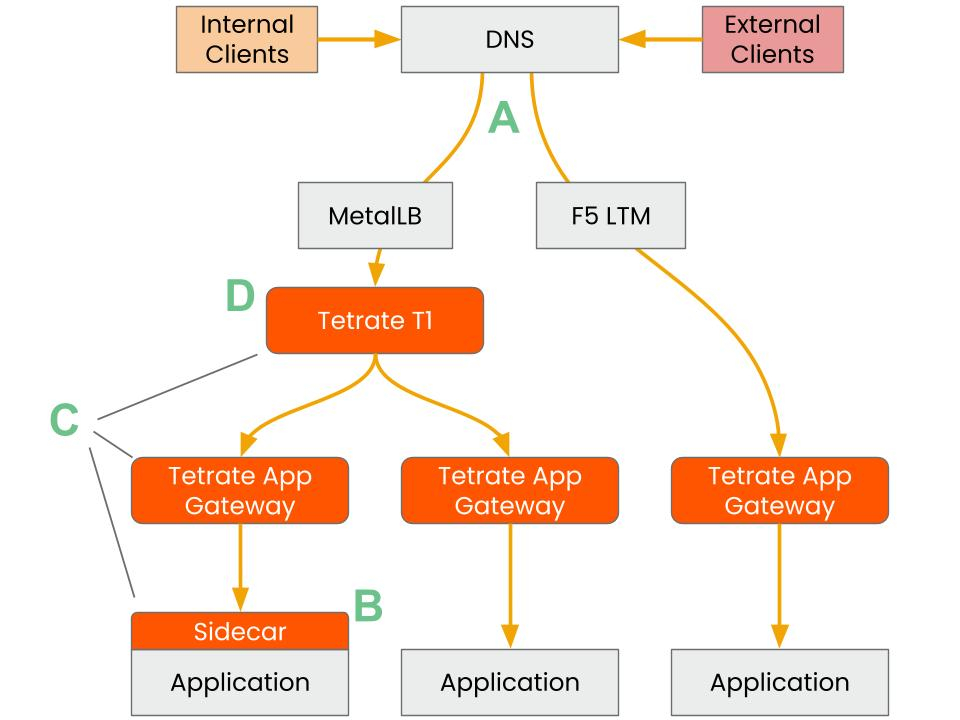

A multi-tier ingress model solves this by placing gateways at clear boundaries. The edge tier handles traffic at the front door. The regional tier steers traffic inside a geography. The cluster tier routes to services and applies fine-grained controls. Defining these tiers creates natural isolation and clear ownership. It also gives teams a predictable way to promote changes without breaking unrelated services. The idea is straightforward, but running the same pattern across regions and clusters is difficult without a common platform. Tetrate Service Bridge helps by turning multi-tier ingress into a standard model that teams can adopt consistently.

What good looks like

Strong multi-tier ingress moves work out of one-off tickets and into a platform model. Platform owners define the tiers and the guardrails that apply at each tier. Application teams publish their own routes inside those guardrails. Everyone uses one place to view gateways, routes, health, and policy so changes move faster and remain safe. The result is less coordination overhead, fewer surprises during rollouts, and a smaller blast radius during incidents.

How to implement this with open source

Two open source projects make multi-tier ingress practical. The Kubernetes Gateway API provides standard resources that separate platform concerns from application routing. Envoy Gateway runs Envoy as a Kubernetes gateway and exposes modern traffic policy. With those definitions in place, you can create distinct gateway classes for edge and cluster scopes, deploy an edge gateway per region, and run shared or namespace-scoped gateways in each cluster. Platform teams own the gateway classes and the gateway instances. Application teams own the routes in their namespaces so they can make changes without cluster-admin rights. Place identity, certificates, allow lists, and coarse rate limits at the edge or regional tier.

At this point it helps to step back from mechanics. The hard part in open source is keeping many gateways and tiers aligned as you add regions and clusters. Who owns each tier, and how do you prevent policy drift between clusters? How will you promote changes across tiers without surprise? Can you test failover regularly and see the same behavior in every place? Can you trace a request end to end and show which rule acted where? If any answer is unclear, operational cost grows and the benefits of tiering begin to erode.

To keep the advantages without carrying that complexity, use a platform that standardizes tiers, policy, and workflow. Tetrate Service Bridge does this by modeling the tiers once, enforcing guardrails, and giving application teams a safe, bounded surface for their routes. The next section explains how.

How to implement this with Tetrate Service Bridge (TSB)

Tetrate Service Bridge is a platform that manages Envoy-based service connectivity across clusters and regions. TSB treats multi-tier ingress as a first-class pattern. You model the edge, regional, and cluster tiers once, assign clear owners, and attach policy to the correct scope so encryption, identity, authorization, and observability remain consistent. Workspaces and role-based access let application teams manage their own routes inside a bounded surface set by the platform. Promotion and rollback are automated with approvals, audit trails, and versioned configurations. Traffic, health, and policy decisions are visible by tier and by team across all clusters. This keeps teams aligned and shortens the time to diagnose and fix issues.

The tradeoff

Latency is the extra time a request spends traveling through the system. A tiered design introduces small processing and network hops at each tier. When tiers sit in the same region and the edge tier only identifies and forwards traffic, this added time is usually modest compared to the time your service spends doing real work. You can keep latency low by keeping the edge thin, forwarding to the nearest region, enforcing detailed policy at the cluster tier near the service, reusing connections so setup costs are not paid on every request, and tracking a simple latency budget per tier to spot regressions early.

The payoff

With the latency question addressed, the benefits come into focus. A layered gateway model reduces tickets and drift because teams work inside clear boundaries. Application teams ship faster because they control routes close to their services. Platform and security owners keep policy consistent across clusters. Operators work from a clear map of gateways, routes, and health, which shortens diagnosis and makes rollbacks safe. The business sees fewer ingress-related incidents and a steadier user experience as the platform grows.

Learn more about Tetrate Service Bridge to see how it can help you implement multi-tier ingress in your environment.

Contact us to learn how Tetrate can help your journey. Follow us on LinkedIn for latest updates and best practices.