Business Continuity: Self-Serve Ingress that is Secure and Consistent

How platform teams can deliver self-serve, policy-driven ingress that is secure and consistent across clusters and environments—improving resilience and speeding delivery.

Business Continuity: Self-Serve Ingress that is Secure and Consistent

If exposing an endpoint means opening a ticket for a gateway update, your ingress has become a bottleneck. Many organizations still run ingress as a central bottleneck with bespoke manifests. Changes move slowly, rules drift between clusters, and each exception adds risk.

Cloud migration and a move to Kubernetes clusters makes the problem worse. Instead of a single load balancer, multiple teams share gateways, routes, certificates, and security controls making granting access more difficult. Specialized teams are brought in to sort out this mess, leading to increased headcounts, bloated processes, and longer wait times for app devs as tickets pile up in the queue.

The solution isn’t to continue increasing headcount to alleviate the bottleneck; the solution is to eliminate the bottleneck altogether. Start with the split in responsibilities. Application teams know their services and want to manage routing for them: which hostnames and paths are exposed, how a canary shifts traffic, and when to roll back. Platform teams want clear ways to protect and secure the edge: they run the gateways, own TLS and authentication, set rate limits, and publish guardrails that keep routes consistent across clusters.

Thankfully, Kubernetes already gives us the tools to make this a reality. The Kubernetes Gateway API separates responsibilities cleanly. With the right setup, platform teams can set uniform guardrails and policies, and application teams can self-serve their own ingress without needing tickets and without worry that they are not meeting platform requirements.

With an Envoy based implementation you get modern Layer 7 features, strong authentication, and traffic controls at the gateway rather than scattered across repositories. That separation makes self-serve ingress possible without giving up control. Kubernetes supports this split so developers can self-serve inside a bounded surface while the platform holds the line on security.

What good looks like: move ingress changes out of tickets and into a platform model where teams self-serve inside guardrails. Platform owners publish standard gateway templates and a bounded configuration surface. Developers add and update routes without cluster admin privileges. Security controls, TLS, and rate limits are consistent across clusters. Everyone works from a single view of gateways, routes, and health so changes are predictable.

How to implement this with open source

You can build this model with the Kubernetes Gateway API and Envoy Gateway. Run a shared gateway per cluster, or dedicate one per namespace when teams need stronger isolation. Use GatewayClasses and templates to standardize listeners and TLS. Let application teams create HTTPRoutes in their namespaces without cluster admin rights. Add external authorization and rate limiting at the gateway. Manage configuration through Git and a workflow such as Argo CD. Run drills to confirm that changes apply predictably across clusters and that rollbacks are safe.

How to implement this with TSB

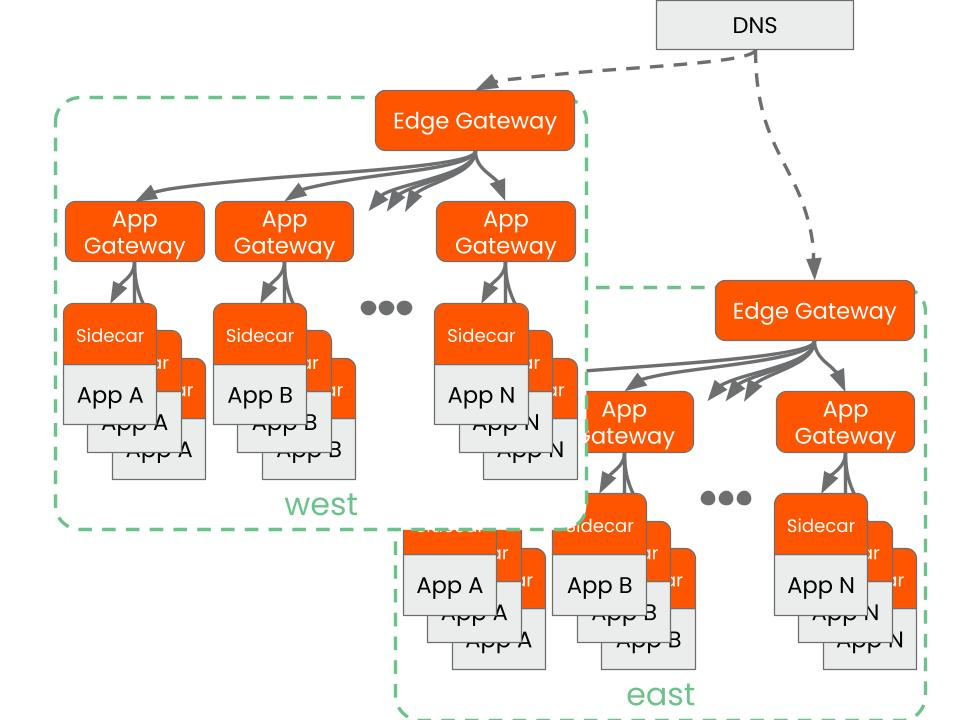

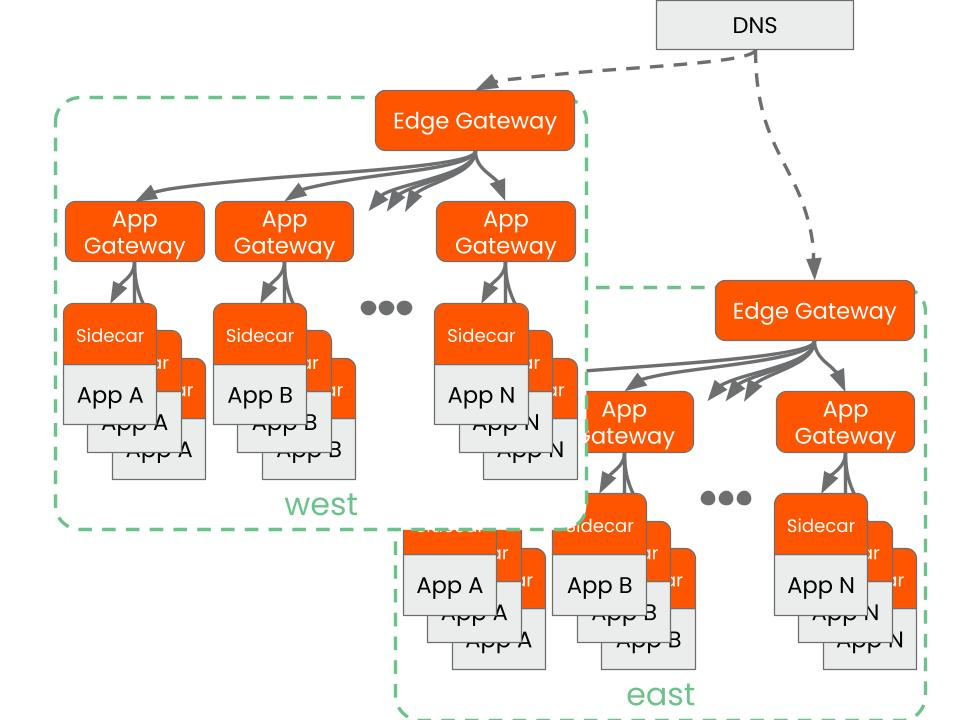

You can reach the same outcome through Tetrate Service Bridge with simple configuration. Tetrate provides runtime discovery and cert rotation to route and secure traffic between a single edge gateway and as many app gateways as you need. Tetrate’s role based access and workspaces let platform owners set boundaries that enable application teams manage their own ingress — without impacting other teams. Standard gateway templates apply at the edge and for east-west traffic enforcing the platform’s security controls, while application ingress lets developers define intent isolated safely in their namespaces. Unified views help you validate drills and rollbacks across clusters. If you want to see this mapped to your regions and SLOs, request a demo.

The Payoff: Reducing tickets and drift produces real and measurable gains. Application teams ship changes faster. Platform and security owners keep controls consistent across clusters. Operations gets a clear map of gateways, routes, and health, which shortens diagnosis and makes rollbacks safe. The business sees fewer ingress related incidents and a steadier user experience.

One of the world’s top financial technology companies partnered with Tetrate to create a secure way for teams to self-serve, eliminating bottlenecks between network and app teams. This resulted in 20x faster deployments, 75% shorter recovery time, and over 80% more production traffic, all achieved without adding headcount.

Learn more about Tetrate Service Bridge to see how it can help you implement fast, predictable failover in your environment.

Contact us to learn how Tetrate can help your journey. Follow us on LinkedIn for latest updates and best practices.