Envoy AI Gateway from Concept to Reality: Tetrate and Bloomberg's Journey to Standardizing LLM Routing

The explosion of GenAI has introduced a new kind of infrastructure challenge: managing LLM traffic across multiple providers, environments, and teams. To meet this challenge, Bloomberg and Tetrate partnered, not behind closed doors, but openly, on a shared goal: to build a scalable, secure, and flexible way to route GenAI traffic. Read about the journey of taking Envoy AI Gateway from concept to reality.

The explosion of GenAI has introduced a new kind of infrastructure challenge: managing LLM traffic across multiple providers, environments, and teams.

To meet this challenge, Bloomberg and Tetrate partnered, not behind closed doors, but openly, on a shared goal: to build a scalable, secure, and flexible way to route GenAI traffic.

The result is Envoy AI Gateway, built as an extension of Envoy Proxy and Envoy Gateway, as a solution within the Envoy Project.

Check out the Reference Architecture Blog Post on the Envoy AI Gateway Blog.

Continue reading to learn more about the journey of taking Envoy AI Gateway from concept to reality.

Solving a Shared Problem, Together

Bloomberg engineers proposed expanding the functionality of Envoy Gateway to tackle the challenges of LLM traffic. As they needed to expose LLMs, hosted both internally and externally to applications, it was a natural choice to engage with the open source community.

Tetrate had been contributing heavily to Envoy Gateway and being part of the maintainer team, welcomed the idea.

Together, we co-developed a solution in the open that could:

- Unify traffic to external and internal model backends

- Handle per-provider auth securely at the edge

- Enforce policies and protect budgets via token-aware rate limiting

- Provide visibility into GenAI usage across teams and providers

This work was shared from the start with the Envoy community to benefit a broad set of adopters.

Built on a Strong Foundation: Envoy + Envoy Gateway

Instead of reinventing the wheel, we extended the tools many teams already use.

- Envoy Proxy: A CNCF graduated project trusted by companies for dealing with high-scale traffic

- Envoy Gateway: A simplification layer that brings Kubernetes-native API Gateway features to Envoy, with a modern control plane and Gateway API support

- Envoy AI Gateway: An extension of Envoy Gateway, purpose-built to handle the unique traffic and governance needs of GenAI platforms

This composable foundation gave us:

- Proven scalability

- Extensibility through filters

- Native support for Kubernetes Gateway API

- A familiar operational model for platform engineers

From Community Talks to Architecture Patterns

Since launching the project, we’ve contributed code, given talks, and worked with early adopters to test and refine the approach.

- 🎤 Keynote at KubeCon introduced the mission and early design

- 🛠️ Releases added upstream auth, token-based rate limiting, OpenTelemetry support, and so much more!

- 📐 Now, we’ve published a Reference Architecture to show you how it all fits together

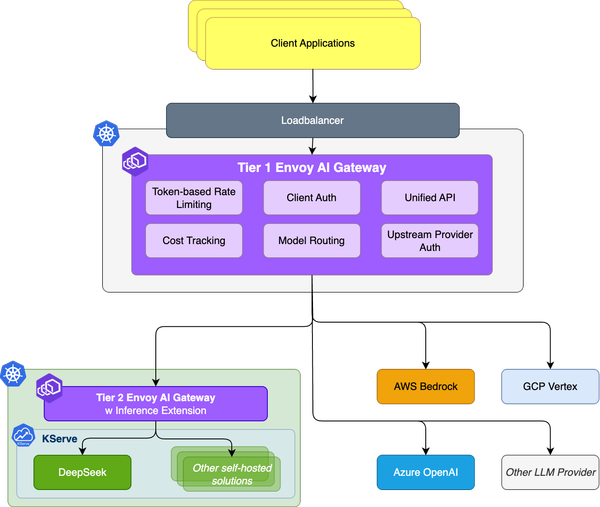

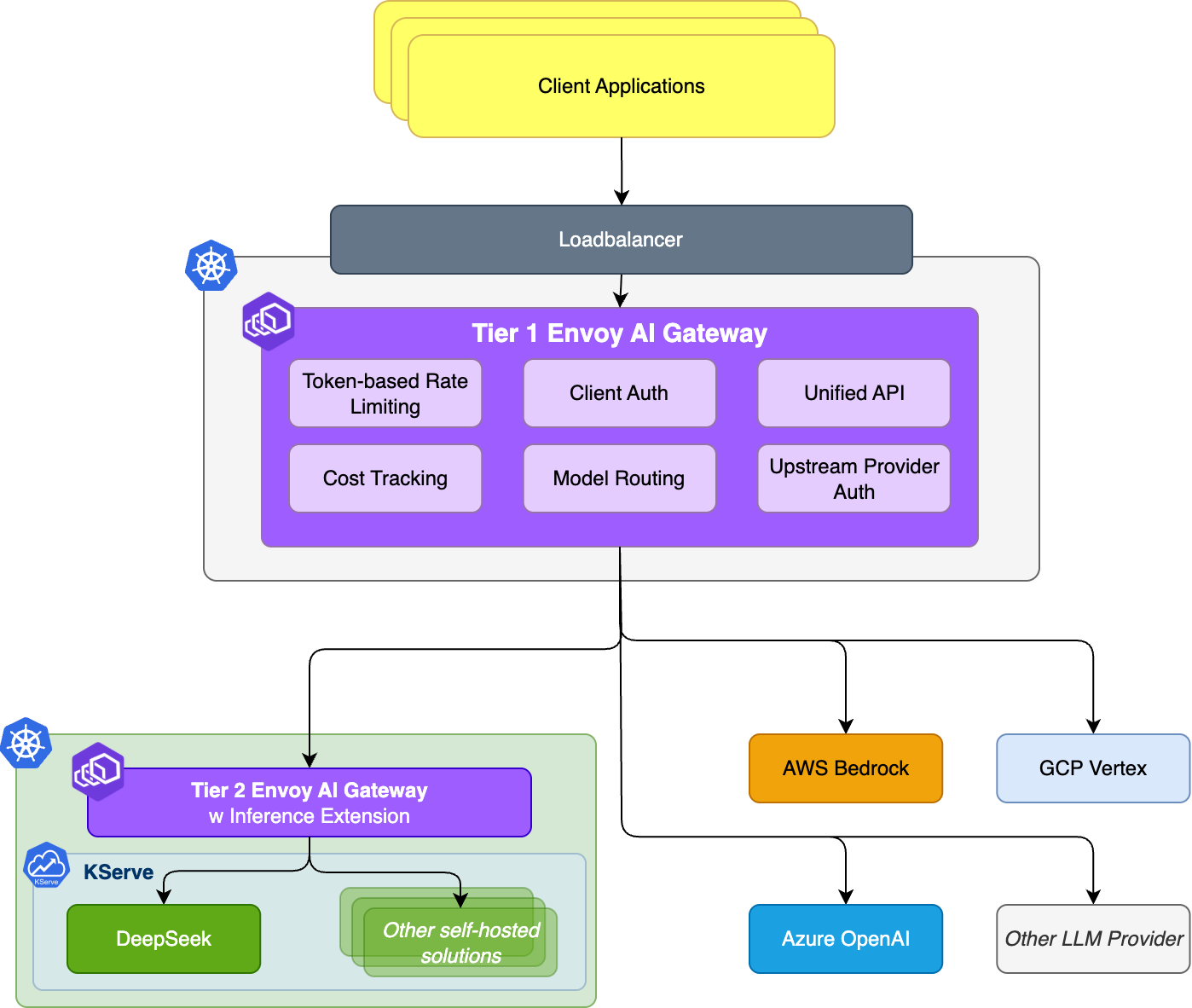

The architecture introduces a Two-Tier Gateway Model:

- Tier 1 Gateway: Unified frontend for external traffic, handling authentication, routing, and cost protection

- Tier 2 Gateway: Internal cluster-level gateway alongside tools like KServe, focused on self-hosted model traffic and fine-grained controls

This pattern provides platform teams with autonomy without compromising control or visibility.

Real Impact: An Enterprise-Ready OSS Foundation

Collaborating with an end user like Bloomberg in this implementation ensures that the architecture caters to the foundational enterprise needs.

A few examples include:

- Support both OpenAI and internal models behind a single API

- Manage provider credentials securely

- Add governance and insight without friction to developers

- Enable model usage confidently across teams

- Enterprise OIDC integrations

The journey demonstrates what’s possible when companies collaborate openly, bringing practical and scalable solutions to the entire ecosystem.

Learn more about how you can use Envoy AI Gateway from various presentations, conversations, and podcasts from the team behind Envoy AI Gateway:

- The Explorer’s Guide To Cloud Native GenAI Platform Engineering - Max Körbächer & Alexa Griffith

- Access AI Models Anywhere: Scaling AI Traffic With Envoy AI Gateway - Dan Sun & Takeshi Yoneda

- Keynote: Platform Alchemy: Transforming Kubernetes Into Generative AI Gold - Alexa Griffith & Mauricio “Salaboy” Salatino

- Kubernetes, AI Gateways, and the Future of MLOps // Alexa Griffith // MLOps Podcast #294

- GenAI Traffic: Why API Infrastructure Must Evolve… Again // Erica Hughberg // MLOps Podcast #296

- Cloud Native Live: Enabling AI adoption at scale

Why It Matters for You

Most platform teams building for GenAI face these problems:

- A long list of LLM providers, each with its quirks

- Credential sprawl and secret rotation overhead

- Inconsistent usage tracking or cost overruns

- Custom integration glue that doesn’t scale

Envoy AI Gateway addresses these challenges.

- 🛡️ Security: Inject upstream credentials at the edge

- 📊 Visibility: Centralize logs and metrics across providers

- 🪪 Governance: Set policies per team, per route, per user.

- 🧩 Flexibility: Deploy in any cloud, use any provider, plug in your own auth.

Start Building with the Reference Architecture

If you’re ready to explore how this can work in your environment, the Reference Architecture provides a complete walkthrough.

It includes guidance on:

- External vs. internal model routing

- Integration with KServe for model serving

- Token-aware policy enforcement

- Production-ready observability and telemetry

Whether you’re starting with an external provider or running your own hosted instances, this architecture grows with you.

Check out the Reference Architecture Blog Post on the Envoy AI Gateway Blog.

Built for the Community, with the Community

At Tetrate, we believe the best infrastructure is built in the open, together.

Envoy AI Gateway exists because of the collaboration between users like Bloomberg, maintainers such as the Envoy Gateway team, and contributors across the cloud-native community.

If you want to simplify your GenAI stack, reduce risk, and accelerate delivery, we invite you to join us.

Let’s build the next generation of AI platforms, together.