Boost Kubernetes Ingress Performance with Tetrate Enterprise Gateway for Envoy and Intel® QAT

The Power of Envoy Gateway with Intel Hardware Acceleration for Kubernetes Ingress Cryptographic operations are among the most compute-intensive and c

The Power of Envoy Gateway with Intel Hardware Acceleration for Kubernetes Ingress

Cryptographic operations are among the most compute-intensive and critical operations in secured connections. In certain cases, such as high-volume ingress gateways that handle large numbers of TLS connections, significant performance and throughput gains can be achieved by offloading some cryptographic operations to specialized hardware.

A prime use case for cryptographic hardware acceleration is TLS termination for the high traffic volumes often found at Kubernetes ingress where speeding up TLS handshakes can make a significant performance impact. In this case study, we’ll use Tetrate Enterprise Gateway for Envoy, the enterprise-ready distribution of Envoy Gateway, the most advanced implementation of the Kubernetes Gateway API.

Gateway API, in contrast to the older Ingress API, offers a more expressive and performant way to manage ingress traffic and its configurations. As a Kubernetes gateway controller, Envoy Gateway brings the power and flexibility of Envoy Proxy to Kubernetes Ingress, making it possible to use Envoy’s proven capabilities, like its private key provider for hardware cryptographic acceleration.

Envoy uses BoringSSL as its default TLS library. BoringSSL supports setting private key methods for offloading private key operations. To facilitate such hardware acceleration, Envoy offers a private key provider framework to access those BoringSSL hooks. To date, there are two private key providers implemented as envoy-contrib extensions:

Both of them are used to accelerate the TLS handshake through the hardware capabilities.

This article covers TLS Termination mode for HTTPS traffic entering Tetrate Enterprise Gateway for Envoy and using the Envoy Private Key Provider to accelerate the TLS handshake by leveraging QAT available on Intel® SPR/EMR Xeon® server platforms (4th Gen Intel® Xeon® Processor Scalable Family, sapphire rapids, Intel® QuickAssist Technology (Intel® QAT) Improves Data Center…).

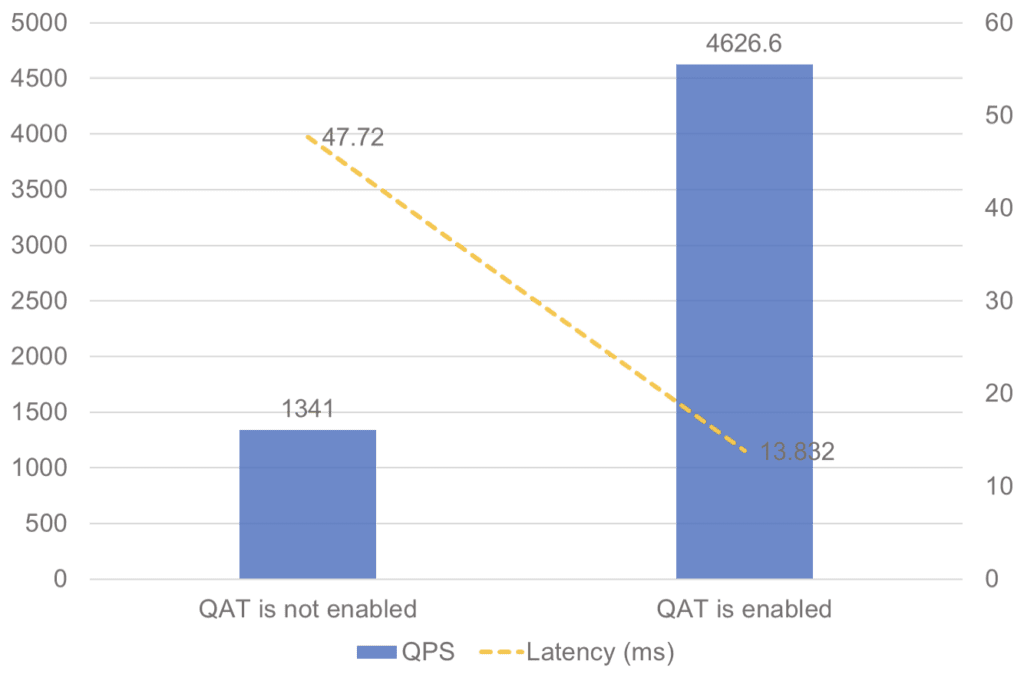

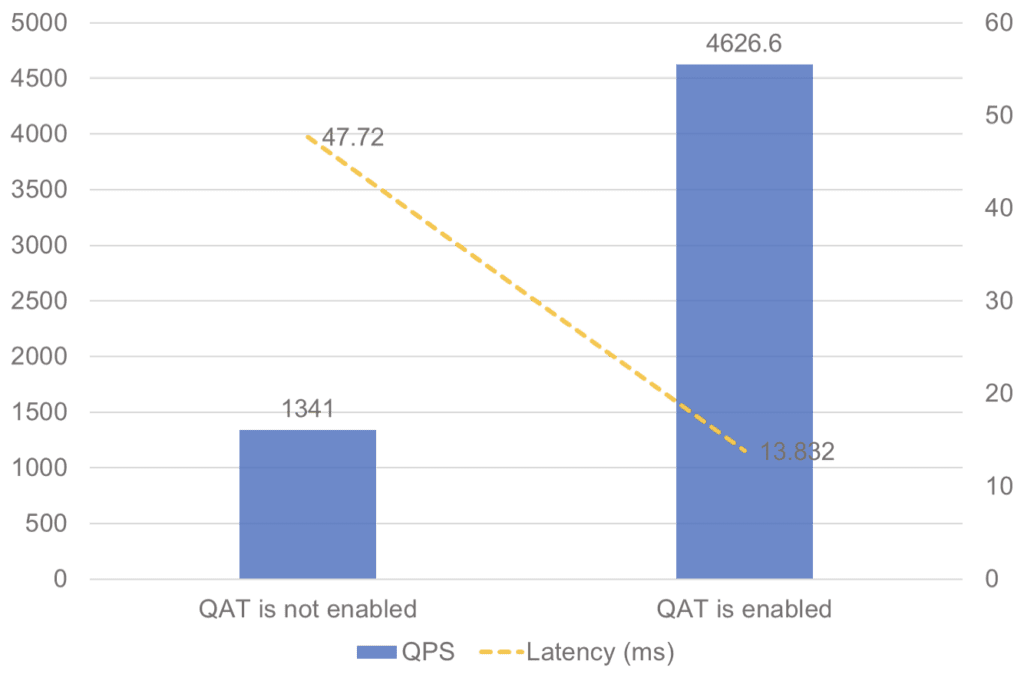

3X Performance Boost with Intel QAT and Envoy Gateway

In our benchmark testing, we observed QPS over three times more with the QAT private key provider enabled than without it (Figure 1).

Test Setup and Procedure

For this demo, we’ll be using Intel® Quick Assist Technology (QAT) available on Intel® SPR/EMR Xeon® servers. QAT, an integrated workload accelerator on Intel® Xeon® Scalable processors, offloads critical data compression and decompression, encrypt and decrypt, and public key data encryption tasks from the CPU cores and accelerates those operations to help improve performance and save valuable compute resources.

QAT is available on AWS with EC2 Metal R7iz Instances (r7iz.metal-16xl and r7iz.metal-32xl) can be used to create your Kubernetes cluster.

QAT Setup Procedure

- Install Linux kernel 5.17 or similar

- Ensure the node has QAT devices by checking the QAT physical function devices presented (see list of supported devices):

echo `(lspci -d 8086:4940 && lspci -d 8086:4941 && lspci -d 8086:4942 && lspci -d 8086:4943 && lspci -d 8086:4946 && lspci -d 8086:4947) | wc -l` supported devices found.- Enable IOMMU from BIOS

- Enable IOMMU for Linux kernel

- Figure out the QAT VF device id:

lspci -d 8086:4941 && lspci -d 8086:4943 && lspci -d 8086:4947- Attach the QAT device to vfio-pci through kernel parameter by the device id from previous command:

cat /etc/default/grub:

GRUB_CMDLINE_LINUX="intel_iommu=on vfio-pci.ids=[QAT device id]"

update-grub

rebootOnce the system is rebooted, check if the IOMMU has been enabled via the following command:

dmesg | grep IOMMU

[ 1.528237] DMAR: IOMMU enabled- Enable virtual function devices for QAT device:

modprobe vfio_pci

rmmod qat_4xxx

modprobe qat_4xxx

qat_device=$(lspci -D -d :[QAT device id] | awk '{print $1}')

for i in $qat_device; do echo 16|sudo tee /sys/bus/pci/devices/$i/sriov_numvfs; done

chmod a+rw /dev/vfio/*- Increase the container runtime memory lock limit (using the containerd as example here)

mkdir /etc/systemd/system/containerd.service.d

cat <<EOF >>/etc/systemd/system/containerd.service.d/memlock.conf

[Service]

LimitMEMLOCK=134217728

EOFRestart the container runtime (for containerd, CRIO has similar concept):

systemctl daemon-reload

systemctl restart containerdkubectl apply -k 'https://github.com/intel/intel-device-plugins-for-kubernetes/deployments/qat_plugin?ref=main'Verification of the plugin deployment and detection of QAT hardware can be confirmed by examining the resource allocations on the nodes:

kubectl get node -o yaml| grep qat.intel.comInstall Tetrate Enterprise Gateway for Envoy

Follow the steps in the Quickstart guide to install TEG.

Change EnvoyProxy Configuration for QAT

Using the envoyproxy image with contrib extensions and add qat resources requesting, ensure the k8s scheduler finds a machine with required resource.

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: EnvoyProxy

metadata:

name: teg-envoy-proxy-config

namespace: envoy-gateway-system

spec:

concurrency: 1

provider:

type: Kubernetes

kubernetes:

envoyService:

type: NodePort

envoyDeployment:

container:

image: envoyproxy/envoy-contrib-dev:latest

resources:

requests:

cpu: 1000m

memory: 4096Mi

qat.intel.com/cy: '1'

limits:

cpu: 1000m

memory: 4096Mi

qat.intel.com/cy: '1'

EOFExpose an Application Using a Gateway and HTTPRoute

- Follow the steps in to expose an Application

- Follow the TLS Termination guide to configure TLS Termination

Apply EnvoyPatchPolicy to Enable Private Key Provider

cat <<EOF | kubectl apply -f -

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: EnvoyPatchPolicy

metadata:

name: key-provider-patch-policy

namespace: httpbin

spec:

targetRef:

group: gateway.networking.k8s.io

kind: Gateway

name: dedicated-gateway

namespace: httpbin

type: JSONPatch

jsonPatches:

- type: "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.Secret"

name: httpbin/example-cert

operation:

op: add

path: "/tls_certificate/private_key_provider"

value:

provider_name: qat

typed_config:

"@type": "type.googleapis.com/envoy.extensions.private_key_providers.qat.v3alpha.QatPrivateKeyMethodConfig"

private_key:

inline_string: |

abcd

poll_delay: 0.001s

fallback: true

- type: "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.Secret"

name: httpbin/example-cert

operation:

op: copy

from: "/tls_certificate/private_key"

path: "/tls_certificate/private_key_provider/typed_config/private_key"

EOFTest Execution

To prevent the httpbin example application from becoming a bottleneck during benchmark execution, increase the number of replicas:

kubectl patch deployment httpbin -p '{"spec":{"replicas":8}}' -n httpbinEnsure the cpu frequency governor is set as performance:

export NUM_CPUS=`lscpu | grep "^CPU(s):"|awk '{print $2}'`

for i in `seq 0 1 $NUM_CPUS`; do sudo cpufreq-set -c $i -g performance; doneUsing the nodeport as the example, fetch the node port from envoy gateway service.

echo "127.0.0.1 www.example.com" >> /etc/hosts

export ENVOY_SERVICE=$(kubectl get svc -n envoy-gateway-system --selector=gateway.envoyproxy.io/owning-gateway-namespace=httpbin,gateway.envoyproxy.io/owning-gateway-name=dedicated-gateway -o jsonpath='{.items[0].metadata.name}')

export NODE_PORT=$(kubectl -n envoy-gateway-system get svc/$ENVOY_SERVICE -o jsonpath='{.spec.ports[1].nodePort}')Run the benchmark separately when QAT is not enabled and QAT is enabled using fortio:

fortio load -c 64 -k -qps 0 -t 30s -keepalive=false https://www.example.com:${NODE_PORT}/httpbin/get