Lateral Movement and the Service Mesh

In January, we announced the general availability of Tetrate Istio Subscription Plus (TIS+) as a hosted Kubernetes network troubleshooting solution fo

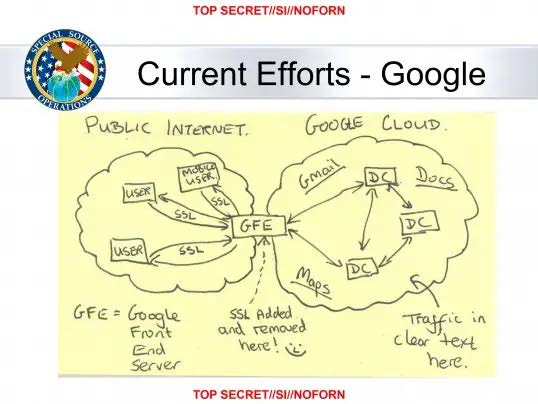

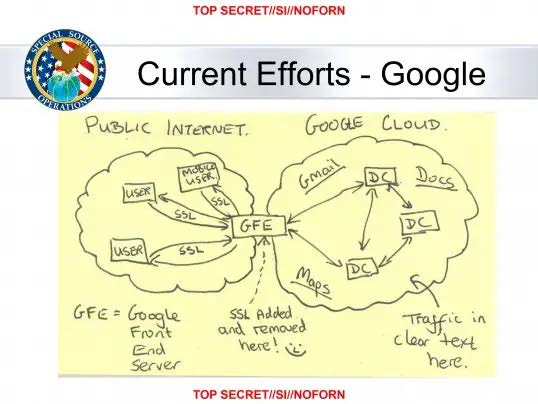

One of the core ideas that motivates the zero trust architecture is the idea that “the attacker is already in the network.” Many of the projects, tools, and techniques we see gaining widespread adoption today for enabling a zero trust architecture were born out of companies that know this first-hand. One seminal event was the Snowden leaks in 2011, which prompted Google to adopt encryption in transit for all communications, even over their own internal network.

Fig 1. Picture of leaked NSA slide depicting Google’s internal architecture, highlighting where encryption is removed and data is communicated in clear text. The leak of this document prompted Google to implement encryption in transit everywhere, as well as follow on projects like LOAS (publicly called ALTS) – the identity scheme that SPIFFE is based on.

Once we assume that the attacker is already in the network, the best we can hope to do is reduce our risk by bounding attacks in space and time – limiting an attacker’s ability to move laterally. Bounding an attack in space means reducing the surface area an attack can operate against – for example, firewalling inbound and outbound traffic. Bounding an attack in time means reducing the time window in which an attack can be executed – for example, requiring users to reauthenticate after a period of inactivity (NIST SP 800-63 §4.1.3, §7.2; SP 800-207 §2.1; SP 800-204, MS-SS-1).

Equally important, we need to put tools, processes, and personnel in place to ensure we can detect when an incident has occurred, or is occurring; respond to and remediate it; and ultimately recover normal business operations.

This document attempts to put the service mesh into context for security-oriented stakeholders by describing a layered security approach incorporating the service mesh to augment existing best practices, forming a modern, flexible security platform for your organization’s applications.

A Layered Approach to Cloud Native Security

Zero trust isn’t some nebulous goal: concretely, you need to identify your organization’s appetite for risk across the entire infrastructure (in-house applications, but also commercial off-the-shelf software, open source software, SaaS services, and so on), and build a set of processes and controls spanning that entire infrastructure. In these sections we’ll focus on how the mesh complements existing security tools and practices at various layers of the OSI model.

Network Layer (L3) Controls

The variety of existing IP layer controls should continue to be used. They remain effective as coarse-grained filters helping to limit the surface area available to an attacker, as well as limiting the impact of some DOS attacks. However, L3 security measures should be augmented by higher-level controls to enable better protection, detection, and response to cybersecurity events.

- Firewalls remain an effective way to provide coarse-grained control over your network, helping to limit the surface area your intranet exposes and making data egress harder for attackers. A key challenge we’ve seen is the slow rate of change for firewalls, often due to the processes involved. This can be a key factor limiting agility for application teams within organizations. We’ve seen Envoy deployed to implement application-level policy, as well as to “canonicalize” traffic so it can pass through existing firewalls more easily. With appropriate controls, we’ve seen this accelerate the rate at which application teams are able to deploy and communicate across on-prem and cloud environments.

- Segmentation, including microsegmentation around VMs/subnets and larger segmentation of the private network, remains effective for reducing the surface area exposed to attackers and their ability to move laterally once inside the network. The core challenge for segmentation is the same as for firewalls: most segmentation tools are built for a previous generation with a slower rate of change than cloud native environments see today. The service mesh can help facilitate communication between segments while providing the opportunity for higher-level policy to be applied. Again, with appropriate controls established by the platform team in partnership with the network team, we’ve seen the use of service mesh accelerate the rate at which application development teams in the organization have been able to deliver features – and value – to their customers.

- Technologies like IPSec and VPNs can be used at this layer to provide encryption in transit and a notion of identity. In our experience, the identity encoded in an IPSec certificate usually does not correspond to an application but to a host, a key difference between encryption at L3 compared to L4/L7 (where we can issue certificates per application). Further, these certificates are typically long-lived (on the order of months to years), so they do not effectively bound attacks in time either. As a result, the impact of L3 encryption is not substantially different than microsegmentation from the perspective of mitigating lateral movement. One of the goals of zero trust is to move away from the idea that “access is authorization,” an idea that pervades modern perimeter-based network security and underpins most VPN usage today.

- Newer tools like Calico (BGP) and Cilium (eBPF – an L4 tool, strictly speaking) provide network-level segmentation that accommodates the rate of change demanded by modern environments, but they tend to only be deployed in the context of Kubernetes. Such tools should be used in the environments they were built for in the same way that microsegmentation should be used for VMs. The notable problem they present is that they work primarily based on labels (which is convenient, and flexible), but Kubernetes does not provide a native way for organizations to govern how labels are used and applied. As a result, projects like OPA Gatekeeper exist to provide fine-grained policy on top of Kubernetes, and these projects, if used, need to be managed as well. The service mesh helps to address this by providing a mechanism to author access policy explicitly, rather than implicitly via labels. Today, CNI and the mesh’s access policies need to be maintained to align with each other. The practice we typically see is “CNI for coarse-grained access, mesh for fine-grained”, following a very similar pattern to the mesh and firewalls.

Collectively, technologies in this layer tend to be slower to change and more brittle, a key pain point for many organizations today). When you start to augment existing controls with the mesh, you’ll need to work with your network security teams to update their security models in light of the additional capabilities the mesh brings. Often we’ll see controls at the IP layer loosened slightly to allow the organization to move faster, with the promise that equivalent controls will be provided up the stack.

Transport Layer (L4) Controls

Moving up the stack gives us more context to use to make access decisions, resulting in the ability to implement finer-grained controls. Many virtues of firewalls discussed above apply at L4 as well, and we should continue to use them. The biggest difference between L3 and L4 is how we implement encryption in transit.

- One of the primary concerns in this layer is TLS at the front door: public certificates. These are foundational to the modern internet, and tools like Let’s Encrypt make it free to implement for organizations of any size; and existing vendors in the space (e.g. Digisign) provide additional features on top. The mesh integrates with OSS tools like Let’s Encrypt to help automate the process of issuing and rotating those certificates. Additionally, the mesh integrates with existing secret stores and hardware security modules (HSMs) to be able to present certificates in your existing infrastructure, if you already have robust solutions for PKI and secrets management.

- Within your infrastructure, all traffic should also be encrypted in transit – gone are the days of cleartext inside. It simply doesn’t align with the zero trust model’s assumption that “the attacker is already in the network”. Encryption and certificates provide three important properties for our system’s security: message authentication, eavesdropping prevention, and an authenticatable identity we can use for authorization. These are necessary, especially as we extend the footprint of our infrastructure outside of data centers we own and into clouds, customer data centers, and – increasingly – to the edge. Unlike the last generation of certificate infrastructure, though, we should be aggressively rotating certificates used for encryption in transit as a means of bounding attacks in time. The mesh implements certificate rotation automatically, and therefore can issue certificates with very short lifetimes – on the order of a few hours. Finally, your PKI can be used to help bound attacks in space by preventing communication, much like microsegmentation. However, the overhead of managing access in this way is large and the policy is very coarse, so we typically recommend a single internal PKI tree (possibly one per environment), augmented by runtime authorization policy to control access instead.

- Intrusion prevention systems (IPS) should continue to be deployed to help monitor and control traffic as these tend to cover protocols that the service mesh does not address today (such as FTP, SSH, or SMTP).

- Technologies like eBPF straddle the L4/L7 line; today, most technology solutions leveraging it are oriented towards Kubernetes environments, often implementing the Container Network Interface (CNI). We discuss technologies like Cilium alongside IP-based CNI above, but, in short, these are good technologies that should be applied and augmented with the mesh, in the same way microsegmentation should be applied to VMs and augmented with the mesh.

At layer four, we need to be moving to encrypt all of our network traffic, not just external traffic. This helps bound attacks in space (PKI-based trust) and time (certificate time-to-live). To facilitate this across your entire infrastructure, the mesh can be used to issue certificates to all workloads, rotate those certificates, and validate them at runtime, without application involvement.

Application Layer (L7) Controls

Traditionally, L7 controls have lived in a few places: at the edge of your network, in technology like WAFs and API Gateways, or in the applications themselves. The service mesh offers us another option: to augment many of those existing controls by enforcing them between all communications in the organization, not just at the edge.

- Web Application Firewalls (WAF) should still be deployed to prevent malicious payloads and other types of attacks. One of the service mesh’s newest capabilities is the ability to enforce ModSecurity-style WAF rules in the Envoy sidecar itself, meaning you can implement WAF controls across all traffic in your infrastructure, not just at the edge.

- The mesh extends encryption in transit to include application identity. More than just enabling mTLS, the service mesh also encodes an application identity into the certificates it issues to workloads (via SPIFFE). When two workloads in the mesh communicate, they perform mutual TLS – where both parties exchange certificates – so that both client and server can authenticate one another’s identity. This enables us to perform application-to-application authorization, in addition to the aforementioned benefits of TLS.

- Application-to-application authorization policy we write can include information like HTTP endpoints and verbs, not just the (IP address, port) tuple, because the mesh is application-aware. This dramatically reduces the surface area of an exposed application. We recommend (and support) generating mesh routing and authorization policy conforming to an Open API Spec to ensure only the intended application surface area is exposed, and that exposure extent is enforced and ensured by the mesh such that nothing more and nothing less is exposed than what’s asserted in the API specification.

- Common security requirements like authenticating end-user credentials can be pulled out of applications and into the mesh, allowing a central team to manage the integration (as opposed to every team handling integration themselves). For example, some organizations we work with use the service mesh to perform single sign-on (SSO) for applications deployed in the mesh, including commercial off-the-shelf software they cannot change. When using the mesh for authentication, we can start to write policies that describe application-to-application access only in the presence of a valid user credential with the correct claims. In other words, we can write policies combining both application and user identity together. For example, we can say “the Backend is allowed to call the Database only in the presence of a valid user credential carrying the READ claim.” This provides a level of control only previously possible with a close integration between the application and your user identity system. Now it can be delegated to the mesh and the application can receive a simple, standard, authenticated end user credential they can base application-specific policy on. This, combined with the mesh’s ability to enforce L7 policy (described in the previous bullet), dramatically reduces the surface area available to an attacker and bounds their attack by the TTL of both the application identity as well as the end-user credential. This means that to execute a long-running attack, they need to continually re-steal credentials, giving more opportunities to detect the intrusion.

- The mesh provides a huge amount of telemetry about applications deployed in it, including what they’re communicating with, how much they’re communicating, and how frequently. These signals can be combined with existing tools, and the data can be fed into existing runtime threat detection and risk detection systems. For example, Tetrate partners with Cequence, which provides deep insights into API traffic security, and augment their capabilities by showing those API calls in context of the larger deployment, as well as mapping the organization to allow teams to easily understand ownership (who do you go talk to because Cequence said something was wrong?)

- Finally, the last big class of policy we see at the application level are business-specific policies that are implemented and enforced in application libraries or other (non-mesh) sidecars. If these policies are already embedded in the application, it often makes sense to keep them there. However, the mesh provides extensibility points like Wasm that allow you to implement custom policy anywhere in the mesh: at the front door like a traditional gateway, at egress, or in sidecars beside applications. We’ve seen big wins for security teams’ ability to administer and update policy quickly by migrating it out of applications and into the mesh.

There is less standardization in the implementation and enforcement of application-level policy today compared to lower layers of the stack, because the nature of the policy tends to be inherently business-related. Part of the mesh’s attraction for many organizations is that it provides a common baseline on which to build and implement a security model that is application-aware, unlike previous solutions targeting L3 or L4.

Putting it Together

It’s important to utilize controls at every layer to build defense in depth. It can be helpful to think of these technologies as concentric rings: those at the outside provide the most coarse-grained control, but are cheap to implement and operate. As you move in, you gain more application context, and you get the ability to enforce more fine-grained policy and provide more safety to the application, but the policies become more expensive to author and maintain. For example, one team can maintain firewall rules for the org; every team needs to author service-to-service access policies). The mesh makes these fine-grained controls much easier to create, maintain, and run. Maintaining this layered defense helps keep the business on the best possible cybersecurity footing and does the most to reduce the surface area exposed to attackers.

Case Study: DMZ Gateway

To help drive this layered approach home, we’ll look at a case study we’ve repeated across many of our customers: inserting the mesh into an existing on-prem deployment with established security practices, and augmenting the existing practices with the capabilities the mesh provides. The result is a system that is able to cope with the rate of change of modern architectures while providing a stronger security baseline for all applications taking part in the mesh than the organization had previously.

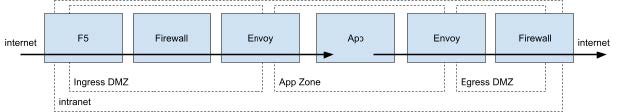

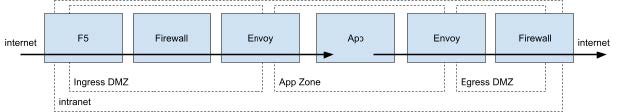

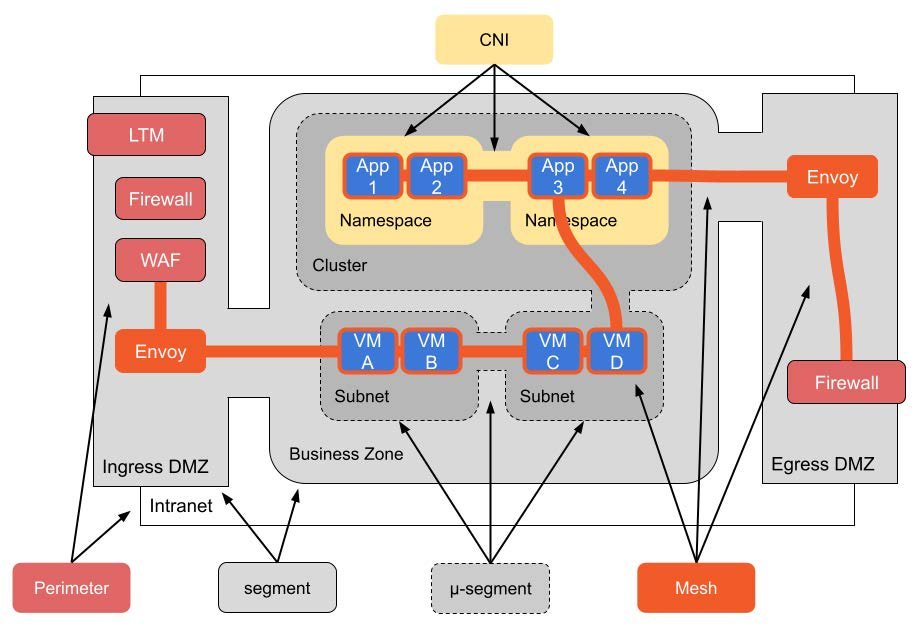

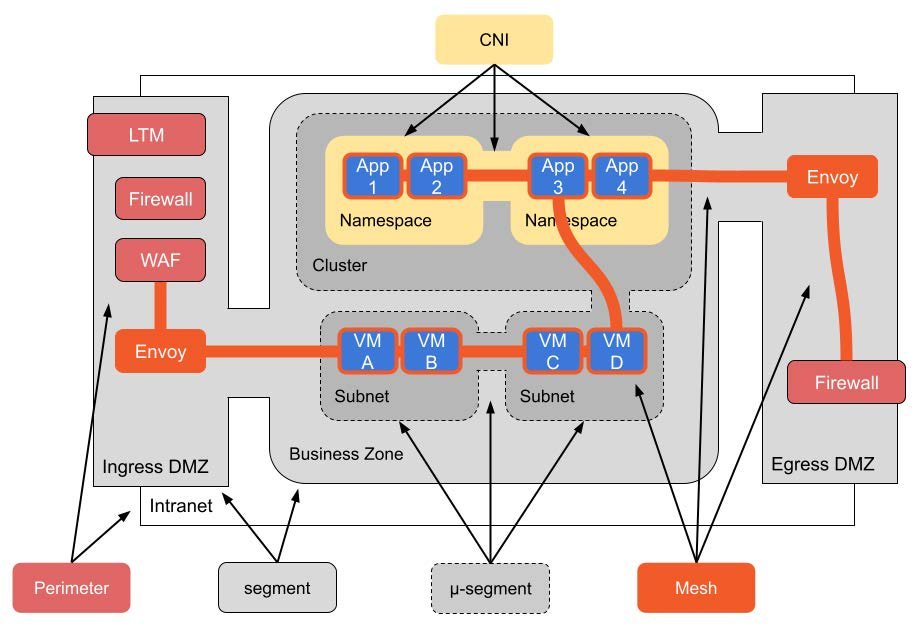

We start with a fairly typical deployment we see our customers using: to ingress traffic, a Local Traffic Management (LTM) system – like F5 – will accept connections and terminate external TLS. It will then forward that traffic through a variety of appliances, like a WAF or API Gateway (often F5 modules, but sometimes software or hardware deployed in the DMZ as well). Assuming the traffic passes whatever checks and policies are deployed, it will be forwarded on to an application in the business zone. Firewall-based coarse grain segmentation establishes these zones – usually a DMZ, business zone, and data zone – and typically the firewall rules are fairly broad (allowing classes of traffic to access entire subnets).

The application that receives the traffic in the business zone is itself in a subnet with limited connectivity, isolated by SDN to create microsegmentation. It will freely communicate with any other applications with its own subnet, and typically rules for traffic between subnets are fairly loose too (allowing any host in the subnet to talk to any host in the peered subnet). Kubernetes clusters are typically deployed into a single subnet themselves, and so network rules usually allow anything running in the Kubernetes cluster to access entire subnets containing applications needed by some parts of the Kubernetes cluster. Within Kubernetes itself, CNI is used to isolate various workloads. Again, this is most frequently done at the namespace level, and rules allow any workload in a namespace to communicate with any workload in a peered namespace.

To communicate with services outside of the intranet, they’ll forward traffic to an egress gateway (often something like Squid) which ultimately forwards traffic through an outbound firewall to the internet.

In a scenario like this, we’ll typically deploy a set of Envoy gateways in the ingress DMZ, behind the inbound firewall, as the entry point to the mesh. We’ll deploy the mesh to as much of the internal infrastructure as we can (which will never be 100% of the infrastructure) to manage internal communications. Finally, we’ll deploy an Envoy gateway in the egress DMZ to help manage outbound traffic.

There are several points of friction in this scenario that the service mesh helps address, in addition to reducing the overall surface area of available for attack:

- Inbound firewall rules are brittle and slow to change.

- Firewall rules between segments tend to be overly broad and poorly understood (why does the rule exist, and for which apps?)

- Outbound firewall rules tend to be overly restrictive and slow to change, slowing the rate at which the organization can move into the cloud or use third-party SaaS.

- Subnet peering rules tend to be overly broad, allowing any host in the subnet to talk to any other host in the destination subnet, even if they’re running mixes of different applications on each host.

In short: segmentation isolates broad swathes of the network from each other. Microsegmentation and CNI layers on top to further refine access to sets of hosts in the network. Finally the service mesh sits on top of all the others, providing encryption in transit, application identity, and application layer access control for services in the mesh. Because the service mesh is configuration driven and rapid to change, it fits well as the layer closest to applications, on top of (and hiding) the underlying security layers which tend to be slower to change. Together, you get a robust system that can adapt to the needs of application developers while maintaining a safe baseline for the entire organization.