OpenTelemetry Tracing Arrives in Envoy AI Gateway

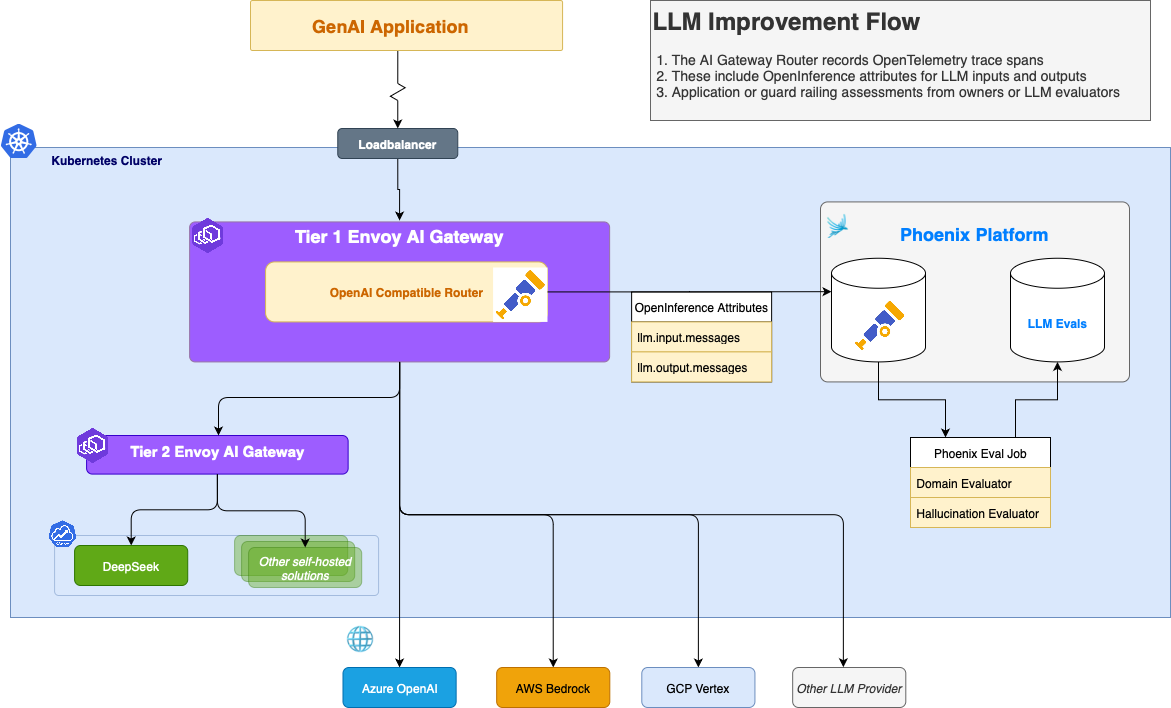

Envoy AI Gateway v0.3 adds OpenTelemetry tracing with OpenInference conventions, capturing LLM requests, responses, and moments like Time-To-First-Token in distributed traces. Export OTEL traces without code changes to traditional observability tools like Jaeger or GenAI eval platforms like Arize Phoenix.

Envoy AI Gateway v0.3 introduces GenAI OpenTelemetry tracing with OpenInference semantic conventions.

These LLM traces include data application owners and subject-matter experts need to improve applications, set guardrails, and evaluate LLM behavior.

Observability Challenges in AI Applications

Traditional observability focuses on request latency, throughput, and error rates—metrics that work well for stateless HTTP services but fall short for AI applications. LLM requests involve complex cost models based on token consumption, variable response patterns with streaming outputs, and semantic failures that don’t manifest as HTTP errors.

GenAI observability must include metrics like Time To First Token (TTFT), but that isn’t enough. LLM requests and responses dictate application improvement and guard railing needs.

The key intersection of Traditional Observability and GenAI observability is distributed tracing. By attaching key request and response data to trace spans, we arm application owners, subject-matter experts, or even LLM-as-a-Judge processes with the data they need in the context of the overall application.

OpenInference Semantic Conventions

Rather than creating proprietary trace formats, Envoy AI Gateway adopts OpenInference—an OpenTelemetry-compatible specification designed for AI applications and adopted by many frameworks including BeeAI and HuggingFace SmolAgents. OpenInference defines standardized attributes for LLM interactions, including prompts, model parameters, token usage, responses, and key moments like time-to-first-token as span events.

This OpenTelemetry span-only approach ensures compatibility with widely deployed tracing systems. For instance, configure Envoy AI Gateway to export traces to Jaeger or specialized systems like Arize Phoenix that natively understand OpenInference. Redaction controls are available from day one, allowing you to balance the needs of your eval system with trace volume.

Enabling LLM Evaluation Through Tracing

Tracing data isn’t only for in-the-moment troubleshooting; this data is key to optimizing your AI system. LLM evaluation analyzes the LLM inputs and outputs in terms of domain specific ways, or off-the-shelf metrics such as correctness or hallucination.

Most importantly, this evaluation of requests can be performed without affecting application performance. With OpenInference compatible systems like Arize Phoenix, you can evaluate requests of interest or even capture them into your training data sets!

Zero-Application-Change Integration

Envoy AI Gateway auto-generates OpenInference traces for all OpenAI chat

requests—no app changes needed. Configuring the gateway with the standard

OpenTelemetry environment variable OTEL_EXPORTER_OTLP_ENDPOINT is enough to

get started.

For applications already instrumented with OpenTelemetry, client spans

automatically join the same distributed trace as gateway spans, providing

end-to-end visibility (e.g. via W3C traceparent or B3 headers). This means

your LLM traces can include everything else your application may use, such as

a normal or vector database, cloud APIs or authorization services.

Here’s an example of a simple trace that includes both application and gateway spans, shown in Arize Phoenix.

This example is a part of the Envoy AI Gateway CLI quickstart, showcasing the non-Kubernetes standalone mode by running the gateway in Docker.

Looking Ahead

This tracing capability launches in the upcoming Envoy AI Gateway release. See the tracing documentation for details. As AI evolves, OpenTelemetry tracing with OpenInference provides the foundation for reliable, observable systems. Join the Envoy AI Gateway and Arize Phoenix communities—we’re co-evolving tools for AI engineers and developers.