Here’s How to Use Istio with Other Nginx Ingress Proxies

Can I Use Istio with Other Ingress Proxies? It’s been a common problem that we’ve been asked to address, and something that pops up frequently. Can I

Can I Use Istio with Other Ingress Proxies?

It’s been a common problem that we’ve been asked to address, and something that pops up frequently. Can I use Istio with other ingress proxies? In a word? Yes.

A persistent issue for many engineers wanting to adopt Istio has been that they want to make use of the numerous benefits that it can provide, including its ability to solve telemetry issues, security and transport problems, as well as policy concerns all in one place, but redesigning their entire service to fit with their hopes for the mesh, they need aspects of the mesh to fit in with them.

In this case it’s specific to mutual TLS (mTLS), to make use of the encrypted communications/ security it provides between apps. mTLS requires both sides to prove their identity, and therefore provides increased security so it’s no surprise that engineers want to use it. But, if there are already established Ingress Proxies, for example NGINX or HAProxy, that they wish to keep without provisioning their ingress certificates from Citadel (which, while customizable in many ways, Citadel has yet to provide an easy way to do this specifically), but it can be done.

Tetrate offers enterprise-ready, 100% upstream distributions of Istio and Envoy Gateway, the easiest way to implement mTLS for cloud-native applications. Get access now ›

Three Common Ways to Deploy NGINX Proxy in an Istio Service Mesh

- Use dedicated ingress instances per team (in Kubernetes, a set of ingress instances per namespace)

- Use a set of ingress instances shared across several teams (namespaces)

- Use a mix, where a team has a dedicated ingress that spans multiple namespaces

The most common method is to run the ingress proxy with an Istio sidecar, which can handle certificates/identity from Citadel and perform mTLS into the mesh.

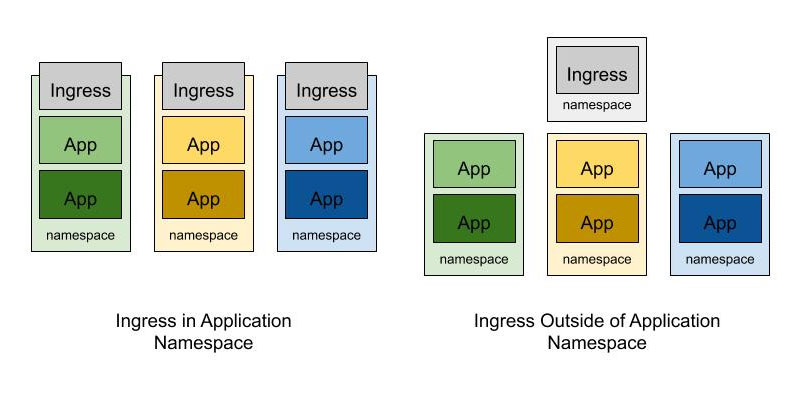

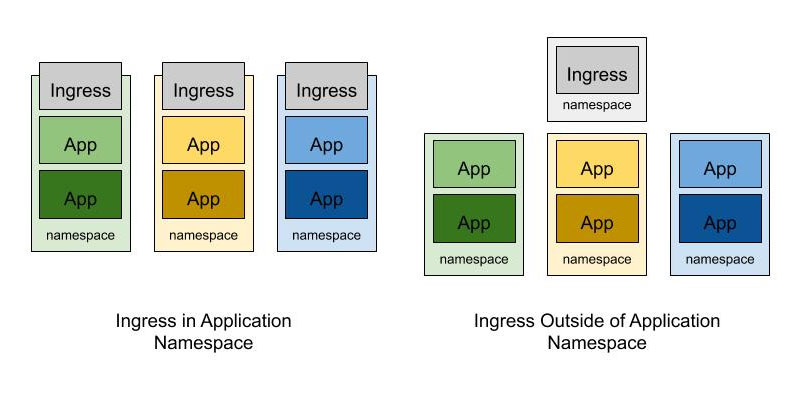

There’s a lot of confusion about how to configure this properly, but it can actually be done simply. What set configuration you need depends on how your ingress is deployed. There are three common ways in which ingress proxies are deployed in Kubernetes. The first is running dedicated ingress instances per team (in Kubernetes, a set of ingress instances per namespace). The second is a set of ingress instances shared across several teams (namespaces). The third is a mix, where a team has a dedicated ingress that spans multiple namespaces they own, or the organization has a mix of teams with dedicated ingress and teams that use a shared ingress, or a mix of all of the above. These three cases reduce to two scenarios we’ll need to configure in Istio:

No matter what architecture the ingress is deployed with, we’re going to use the same set of tools to solve the problem. We need to:

Deployment Pattern: Use Dedicated Ingress Instances per Team

Deploy the ingress proxy with an Envoy sidecar. Annotate ingress deployment with:

sidecar.istio.io/inject: 'true'Exempt inbound traffic from going through the sidecar, since we want the ingress proxy to handle it.Annotate the deployment with:

traffic.sidecar.istio.io/includeInboundPorts: ""

traffic.sidecar.istio.io/excludeInboundPorts: "80,443"Note: substitute the ports your ingress is exposing in your own deployment.

If your ingress proxy needs to talk to the Kubernetes API server (e.g. because the ingress controller is embedded into the ingress pod, like with NGINX ingress) then you’ll need to allow it to call the Kubernetes API server without the sidecar interfering.Annotate the deployment with:

traffic.sidecar.istio.io/excludeOutboundIPRanges: "1.1.1.1/24,2.2.2.2/16,3.3.3.3/20"Note: substitute your own Kubernetes API server IP ranges. You can find the IP address by:

kubectl get svc kubernetes -o jsonpath='{.spec.clusterIP}'Instead of routing the outbound traffic to the list of endpoints in the NGINX upstream configuration, you should configure the nginx ingress to route to a single upstream service, so that the outbound traffic will be intercepted by the istio sidecar. Add the following annotation to each Ingress resource:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/service-upstream: "true"

nginx.ingress.kubernetes.io/upstream-vhost: httpbin.default.svc.cluster.localConfigure the ingress’s sidecar to route traffic to services in the mesh. This is the key piece that changes per deployment type.For ingresses in the same namespace as services they’re forwarding traffic to, no extra configuration is required. A sidecar in a namespace automatically knows how to route traffic to services in the same namespace.For ingresses in a namespace different from the services they’re forwarding traffic to, you need to author an Istio Sidecar API object in the ingress’s namespace which allows Egress to the services your ingress is routing to.

apiVersion: networking.istio.io/v1alpha3

kind: Sidecar

metadata:

name: ingress

namespace: ingress-namespace

spec:

egress:

- hosts:

# only the frontend service in the prod-us1 namespace

- "prod-us1/frontend.prod-us1.svc.cluster.local"

# any service in the prod-apis namespace

- "prod-apis/\*"

# tripping hazard: make sure you include istio-system!

- "istio-system/\*"Note: Substitute the services/namespaces your ingress is sending traffic to. Make sure to always include “istio-system/*” or the sidecar won’t be able to talk to the control plane. (This is a temporary requirement as of 1.4.x that should be fixed in future versions of Istio.)

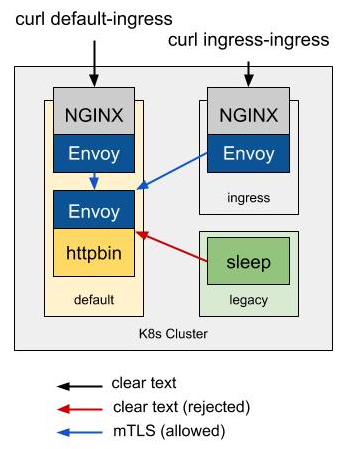

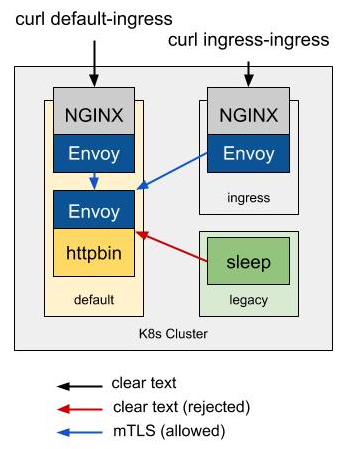

After applying all the configs to Kubernetes (see the full example below) we have a deployment pictured below. We can use curl connect to services in the cluster to verify traffic flows through Envoy and mTLS is enforced.

Deployment Pattern: Use Ingress Instances Shared across Several Teams

curl via the ingress in the same namespace as the app:

curl $(kubectl get svc -n default ingress-nginx -o jsonpath='{.status.loadBalancer.ingress[0].ip}')/ip -vcurl via the ingress in a different namespace than the app:

curl $(kubectl get svc -n ingress ingress-nginx -o jsonpath='{.status.loadBalancer.ingress[0].ip}')/ip -vcurl via a pod in another namespace that does not have a sidecar:

kubectl exec -it$(kubectl get pod -n legacy -l app=sleep -o

jsonpath='{.items[0].metadata.name}') -n legacy -- curl httpbin.default.svc.cluster.local:8000/ip -vDeployment Pattern: Dedicated Ingress that Spans Multiple Namespaces

Create cluster:

gcloud container clusters create -m n1-standard-2 ingress-testDownload istio 1.4.2

export ISTIO_VERSION=1.4.2; curl -L https://istio.io/downloadIstio | sh -Deploy Istio (demo deployment with mTLS):

./istio-1.4.2/bin/istioctl manifest apply \

--set values.global.mtls.enabled=true \

--set values.global.controlPlaneSecurityEnabled=trueLabel default namespace for automatic sidecar injection, deploy httpbin:

kubectl label namespace default istio-injection=enabled

kubectl apply -f ./istio-1.4.2/samples/httpbin/httpbin.yamlDeploy NGINX ingress controller and config into default namespace:

export KUBE_API_SERVER_IP=$(kubectl get svc kubernetes -o jsonpath='{.spec.clusterIP}')/32

sed "s#__KUBE_API_SERVER_IP__#${KUBE_API_SERVER_IP}#" nginx-default-ns.yaml | kubectl apply -f -This is the nginx configuration:

\# nginx-default-ns.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

namespace: default

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/service-upstream: "true"

nginx.ingress.kubernetes.io/upstream-vhost: httpbin.default.svc.cluster.local

spec:

backend:

serviceName: httpbin

servicePort: 8000

---

# Deployment: nginx-ingress-controller

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

# Do not redirect inbound traffic to Envoy.

traffic.sidecar.istio.io/includeInboundPorts: ""

traffic.sidecar.istio.io/excludeInboundPorts: "80,443"

# Exclude outbound traffic to kubernetes master from redirection.

traffic.sidecar.istio.io/excludeOutboundIPRanges: __KUBE_API_SERVER_IP__

sidecar.istio.io/inject: 'true'

spec:

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.12.0

securityContext:

runAsUser: 0

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/nginx-default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/nginx-tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/nginx-udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

- --v=10

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 8

initialDelaySeconds: 15

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

httpGet:

path: /healthz

port: 10254

scheme: HTTP

readinessProbe:

failureThreshold: 8

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

httpGet:

path: /healthz

port: 10254

scheme: HTTP

---

# Service: ingress-nginx

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: default

labels:

app: ingress-nginx

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configuration

namespace: default

labels:

app: ingress-nginx

data:

ssl-redirect: "false"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-tcp-services

namespace: default

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-udp-services

namespace: default

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: default

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

namespace: default

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: default

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: default

---

# Deployment: nginx-default-http-backend

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-default-http-backend

namespace: default

labels:

app: nginx-default-http-backend

spec:

replicas: 1

selector:

matchLabels:

app: nginx-default-http-backend

template:

metadata:

labels:

app: nginx-default-http-backend

# rewrite kubelet's probe request to pilot agent to prevent health check failure under mtls

annotations: sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

terminationGracePeriodSeconds: 60

containers:

- name: backend

# Any image is permissible as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: gcr.io/google_containers/defaultbackend:1.4

securityContext:

runAsUser: 0

ports:

- name: http

containerPort: 8080

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

# Service: nginx-default-http-backend

apiVersion: v1

kind: Service

metadata:

name: nginx-default-http-backend

namespace: default

labels:

app: nginx-default-http-backend

spec:

ports:

- name: http

port: 80

targetPort: http

selector:

app: nginx-default-http-backend

---Deploy NGINX ingress controller and config into ingress namespace:

kubectl create namespace ingress

kubectl label namespace ingress istio-injection=enabled

sed "s#__KUBE_API_SERVER_IP__#${KUBE_API_SERVER_IP}#" nginx-ingress-ns.yaml | kubectl apply -n ingress -f -Ingress configuration file:

\# nginx-ingress-ns.yaml

# Deployment: nginx-ingress-controller

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress

spec:

replicas: 1

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

# Do not redirect inbound traffic to Envoy.

traffic.sidecar.istio.io/includeInboundPorts: ""

traffic.sidecar.istio.io/excludeInboundPorts: "80,443"

# Exclude outbound traffic to kubernetes master from redirection.

traffic.sidecar.istio.io/excludeOutboundIPRanges: __KUBE_API_SERVER_IP__

sidecar.istio.io/inject: 'true'

spec:

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.12.0

securityContext:

runAsUser: 0

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/nginx-default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/nginx-tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/nginx-udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

- --v=10

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 8

initialDelaySeconds: 15

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

httpGet:

path: /healthz

port: 10254

scheme: HTTP

readinessProbe:

failureThreshold: 8

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

httpGet:

path: /healthz

port: 10254

scheme: HTTP

---

# Service: ingress-nginx

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress

labels:

app: ingress-nginx

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- name: http

port: 80

targetPort: http

- name: https

port: 443

targetPort: https

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configuration

namespace: ingress

labels:

app: ingress-nginx

data:

ssl-redirect: "false"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-tcp-services

namespace: ingress

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-udp-services

namespace: ingress

labels:

app: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

namespace: ingress

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

namespace: ingress

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress

---

# Deployment: nginx-default-http-backend

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-default-http-backend

namespace: ingress

labels:

app: nginx-default-http-backend

spec:

replicas: 1

selector:

matchLabels:

app: nginx-default-http-backend

template:

metadata:

labels:

app: nginx-default-http-backend

# rewrite kubelet's probe request to pilot agent to prevent health check failure under mtls

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

terminationGracePeriodSeconds: 60

containers:

- name: backend

# Any image is permissible as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: gcr.io/google_containers/defaultbackend:1.4

securityContext:

runAsUser: 0

ports:

- name: http

containerPort: 8080

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

# Service: nginx-default-http-backend

apiVersion: v1

kind: Service

metadata:

name: nginx-default-http-backend

namespace: ingress

labels:

app: nginx-default-http-backend

spec:

ports:

- name: http

port: 80

targetPort: http

selector:

app: nginx-default-http-backend

---Create the Ingress resource routing to httpbin:

kubectl apply -f ingress-ingress-ns.yaml\# ingress-ingress-ns.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

namespace: ingress

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/service-upstream: "true"

nginx.ingress.kubernetes.io/upstream-vhost: httpbin.default.svc.cluster.local

spec:

backend:

serviceName: httpbin

servicePort: 8000Create the sidecar resource that allows traffic from the ingress namespace to default:

kubectl apply -f sidecar-ingress-ns.yaml\# sidecar-ingress-ns.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Sidecar

metadata:

name: ingress

namespace: ingress

spec:

egress:

- hosts:

- "default/\*"

# tripping hazard: make sure you include istio-system!

- "istio-system/\*"Verify that external traffic can be routed to the httpbin service via nginx ingress in both default and ingress namespaces:a. Verify traffic into the nginx ingress from the default namespace:

curl $(kubectl get svc -n default ingress-nginx -o jsonpath='{.status.loadBalancer.ingress[0].ip}')/ip -vb. Verify traffic into the nginx ingress from the ingress namespace:

curl $(kubectl get svc -n ingress ingress-nginx -o

jsonpath='{.status.loadBalancer.ingress[0].ip}')/ipc. Expected response for both requests should look something like this:

\* Trying 34.83.167.92...

\* TCP_NODELAY set

\* Connected to 34.83.167.92 (34.83.167.92) port 80 (#0)

> GET /ip HTTP/1.1

> Host: 34.83.167.92

> User-Agent: curl/7.58.0

> Accept: \*/\*

>

< HTTP/1.1 200 OK

< Server: nginx/1.13.9

< Date: Mon, 17 Feb 2020 21:06:18 GMT

< Content-Type: application/json

< Content-Length: 30

< Connection: keep-alive

< access-control-allow-origin: \*

< access-control-allow-credentials: true

< x-envoy-upstream-service-time: 2

<

{

"origin": "10.138.0.13"

}

\* Connection #0 to host 34.83.167.92 left intactVerify that the httpbin service does not receive traffic in plaintext:a. Execute the following in shell

kubectl create namespace legacy

kubectl apply -f ./istio-1.4.2/samples/sleep/sleep.yaml -n legacy

kubectl exec -it $(kubectl get pod -n legacy -l app=sleep -o

jsonpath='{.items[0].metadata.name}') -n legacy -- curl

httpbin.default.svc.cluster.local:8000/ip -vb. The following output is expected

\* Expire in 0 ms for 6 (transfer 0x55d92c811680)

\* Expire in 15 ms for 1 (transfer 0x55dc9cca6680)

\* Trying 10.15.247.45...

\* TCP_NODELAY set

\* Expire in 200 ms for 4 (transfer 0x55dc9cca6680)

\* Connected to httpbin.default.svc.cluster.local (10.15.247.45) port 8000 (#0)

> GET /ip HTTP/1.1

> Host: httpbin.default.svc.cluster.local:8000

> User-Agent: curl/7.64.0

> Accept: \*/\*

>

\* Recv failure: Connection reset by peer

\* Closing connection 0

curl: (56) Recv failure: Connection reset by peer

command terminated with exit code 56Verify that the connection between nginx-ingress-controller and httpbin service are mtls enableda. Use istioctl cli to verify the authentication policy

./istio-1.4.2/bin/istioctl authn tls-check $(kubectl get pod -n default -l app=ingress-nginx -o jsonpath='{.items[0].metadata.name}') httpbin.default.svc.cluster.local

./istio-1.4.2/bin/istioctl authn tls-check -n ingress $(kubectl get pod -n ingress -l app=ingress-nginx -o jsonpath='{.items[0].metadata.name}') httpbin.default.svc.cluster.localb. Expected output log for both nginx ingress

HOST:PORT STATUS SERVER CLIENT AUTHN POLICY DESTINATION RULE

httpbin.default.svc.cluster.local:8000 OK STRICT ISTIO_MUTUAL /default istio-system/default