What Mesh Failure Revealed at a Top 70 Bank and Why a Top 50 Financial Institution Chose to Act Sooner

In large-scale systems, small architectural flaws quickly escalate. Misconfigured or poorly designed service meshes reduce the margin for error. This has been evident in real-world cases - two major financial institutions took different approaches—one responded after a failure, the other acted early and avoided it.

The Tale of Two Financial Firms

In large-scale systems, small architectural flaws do not remain small for long. When a service mesh is misconfigured or poorly designed, the margin for error shrinks rapidly. We have seen this play out across real environments. Two of the largest financial institutions in the country, each operating at significant scale, chose very different paths. One responded after failure. The other acted early and avoided it.

A Note on Anonymity

The institutions discussed in this paper are not named. This is not to obscure the facts, but to reflect the reality of operating in regulated sectors where disclosure carries risk. What matters is not the name, but the architecture, the sequence of events, and the consequences that followed. Those working at scale will recognize the pattern.

The Recovery Case

A Fortune 70 global financial institution approached us after experiencing structural instability in their mesh. The architecture they had chosen, a peer to peer model from a commercial Istio vendor, appeared functional at first. As traffic grew, so did systemic strain. Configuration changes began to queue. Propagation delays reached 30 minutes. Localized outages appeared. Trust between application and platform teams broke down and teams stopped using the system and the platform team returned to the drawing board, tasked with rebuilding an architecture that could scale without downtime. Not an easy feat.

The Early Mover

Contrast that with a Fortune 50 financial institution that sits at the heart of America’s mortgage infrastructure. They recognized the limits of a peer to peer model before it impacted operations. They chose Tetrate Service Bridge, a more secure and scalable model, after seeing early signs of synchronization load and security risks in smaller, peer-based pilots - the very issues that would later cripple the Fortune 70 provider.

Instead of retrofitting later, they designed for scale from the outset. They adopted a hub and spoke mesh, TSB (Tetrate Service Bridge), capable of supporting over 100 federated clusters, 2,000 applications, and 10,000 gateways across OpenShift and EKS.

Configuration changes now propagate in seconds. Teams operate without contention. The mesh scales without overwhelming the platform. The difference is not the tool, but the architectural foresight.

Why Peer-to-Peer Architectures Fail at Scale

Istio, in its upstream form, is a powerful construct. It offers a programmable layer for service-to-service communication, and it solves meaningful problems in observability, security, and control. For many environments particularly those operating within a single cluster - it works as intended, with minimal friction.

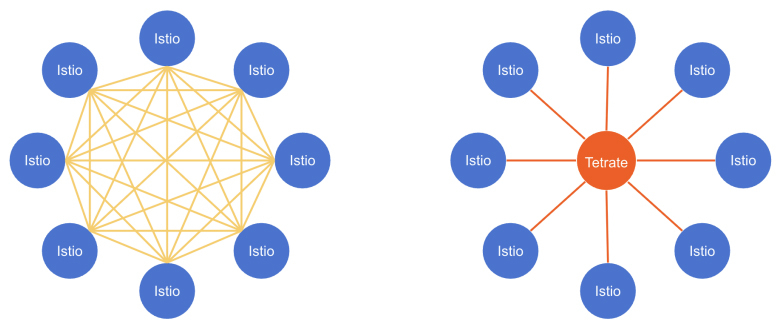

The issue is not that Istio is flawed. It is that its default approach reflects a set of assumptions about scale, coordination, and coupling that begin to break down in large, federated systems. What works in a confined topology becomes increasingly unstable when replicated across hundreds of clusters, each operating with its own pace, policies and teams. At the heart of upstream Istio’s multi-cluster model is a peer to peer mesh. Every cluster forms direct trust and communication with every other. The result is a flat topology: no central coordination, no boundaries, and no hierarchy of control.

The design may work cleanly at a small scale, but at enterprise scale, it shows strain. Each new cluster brings dozens of relationships. Trust becomes harder to enforce, policies take longer to propagate and discovery floods the mesh with unnecessary data.

We have seen what happens when this model is pushed too far. At the Fortune 70 global financial institution, mentioned earlier, these limits became painfully visible: propagation delays reached thirty minutes. Configuration drift spread silently. Outages became routine. What began as a connectivity model evolved into a systemic failure of synchronization, trust and control.

The model was never designed to carry the operational weight placed upon it. It did not fail because it was poorly built. It failed because the system it was asked to support exceeded the assumptions baked into its architecture.

The lesson is simple. Systems must be designed not for ideal conditions, but for the conditions they are likely to encounter. Peer to peer meshes, for all their appeal, do not scale gracefully when those conditions become complex.

A System Designed for Systemic Complexity: Tetrate Service Bridge

Where the Fortune 70 provider struggled to contain policy drift, the Fortune 50 mortgage infrastructure firm saw these risks early and structured their mesh to contain them. In large systems, failure is often not the result of one broken component, but of excess coordination between components that change too often. This is the fundamental scaling challenge in multicluster Istio deployments.

Upstream Istio assumes a tightly coupled mesh where every control plane must synchronize with every other. As clusters are added especially across regions, this introduces high control-plane load, inter-cluster traffic spikes, and latency-sensitive synchronization overhead. Teams begin to experience cascading delays and outages, not because the mesh is broken, but because it is overloaded.

Tetrate Service Bridge (TSB) architecture breaks this coupling

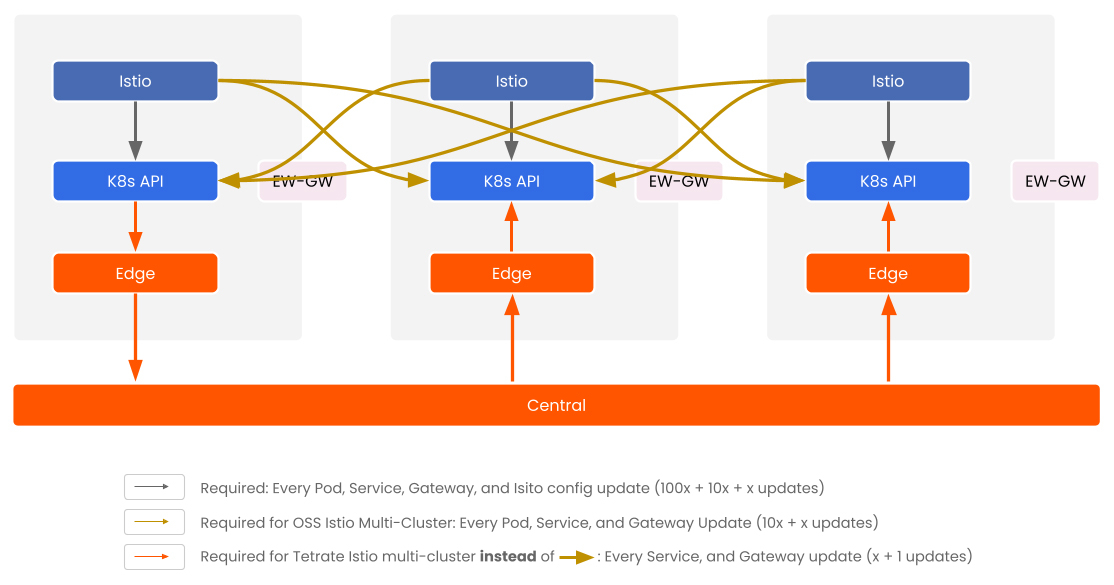

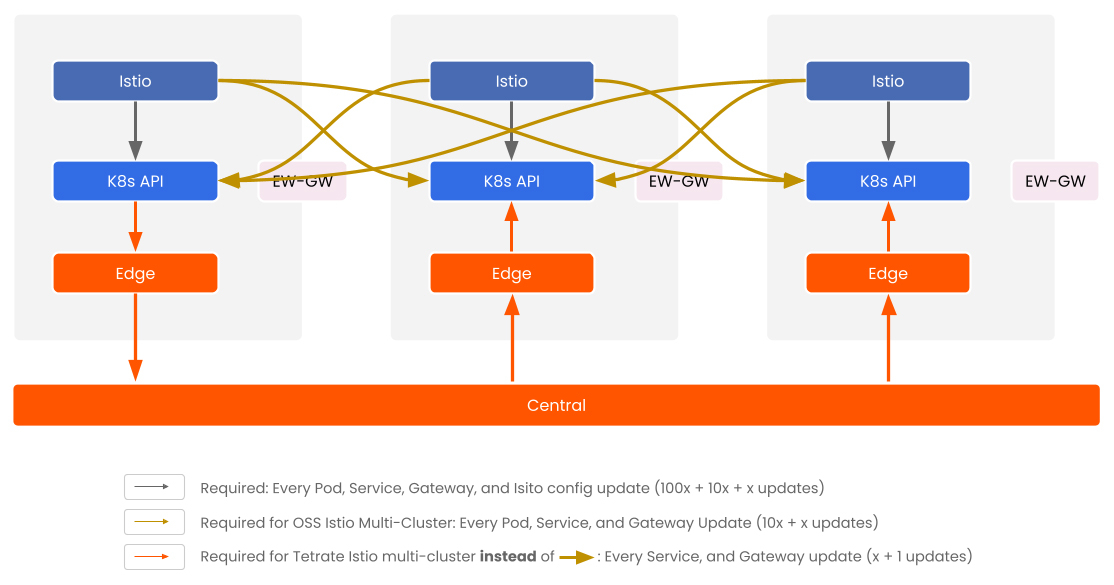

In TSB, each cluster runs its own Istio control plane alongside a local TSB component (xcp-edge), which manages local workloads and configuration. Tetrate global control plane component, xcp-central, handles only what must be shared globally: stable metadata like service discovery and gateway routing.

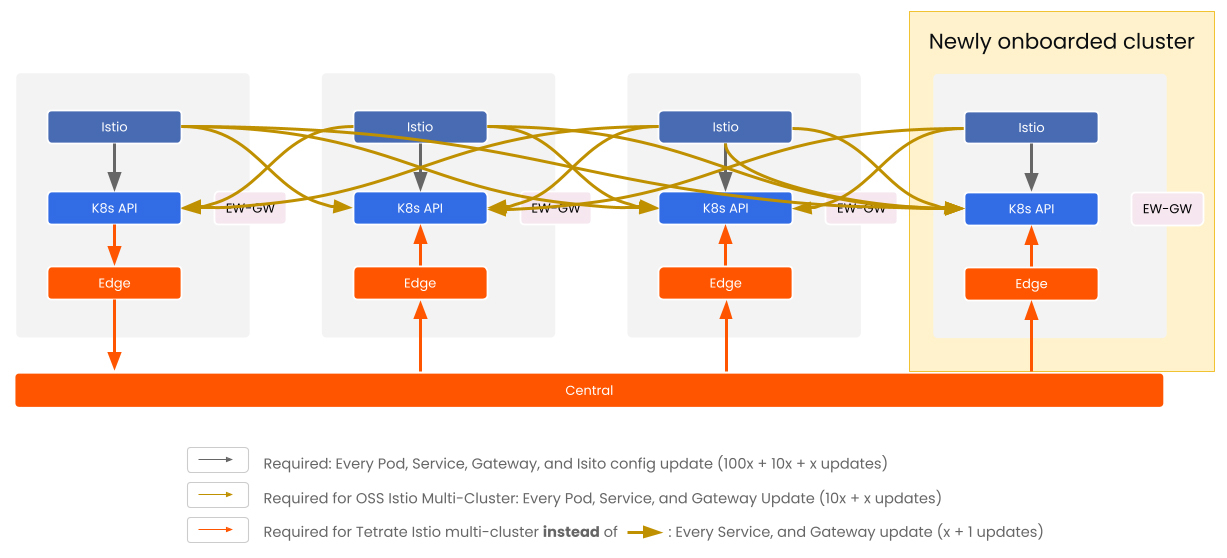

Our operational insight is this: not all data in the mesh changes at the same rate, and treating it all the same is what causes the scaling issue. This decoupling reflects a core operational insight: In practice:

- Pods change most frequently — ~100×

- Istio configuration changes frequently — ~10×

- Services change occasionally — ~1×

- Gateways change rarely — Gateway deployments typically remain stable, with aspects like listening ports or CPU/memory resources changing infrequently. In contrast, configurations such as routes, RBAC policies, and other logical settings are updated more often to adapt to evolving requirements without altering the underlying physical deployment.

By keeping high-frequency updates (like pod and Istio config changes) within the cluster, and limiting cross-cluster communication to low-churn metadata, Tetrate avoids the exponential overhead of peer-based synchronization.

In the deployment for the Fortune 70 financial services firm secured east-west traffic across hundreds of federated clusters spanning OpenShift and public cloud environments. The TSB (xcp-central and xcp-edge) reduced configuration propagation time from over twenty minutes to seconds. More critically, the system achieved a 75 percent reduction in propagation errors across the mesh. Application teams gained direct ownership of their cluster environments, minimizing dependence on centralized network teams. Firewall change windows, once a multi-week dependency, were rendered unnecessary for most routine changes.

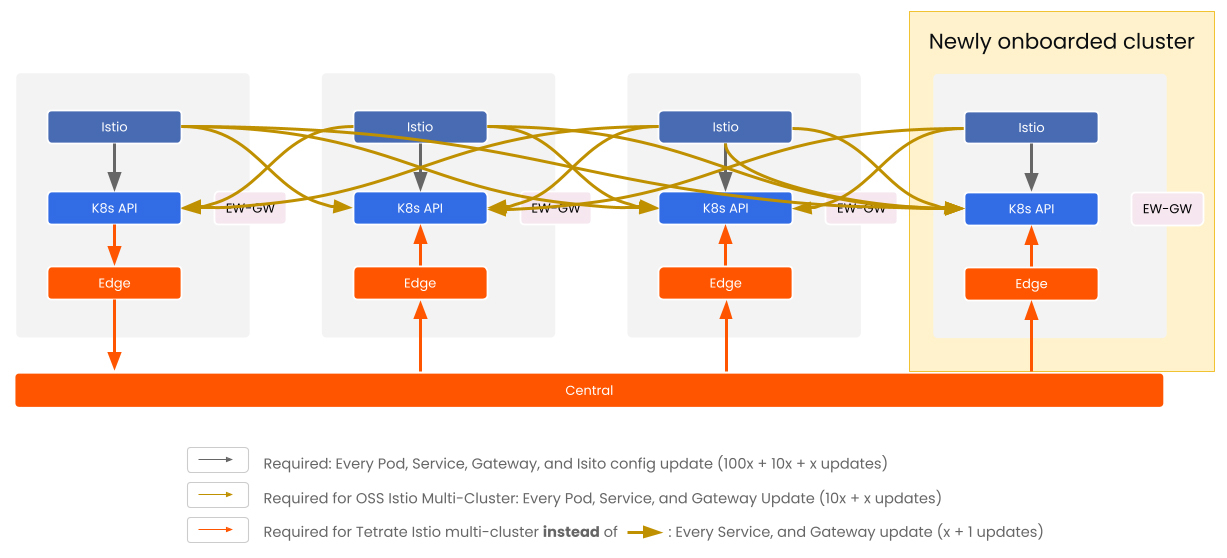

When onboarding a new cluster, xcp-central only updates that cluster’s metadata and communicates with its corresponding xcp-edge. No global reprocessing is required. The result is a control plane that scales with what actually changes and not just with what exists.

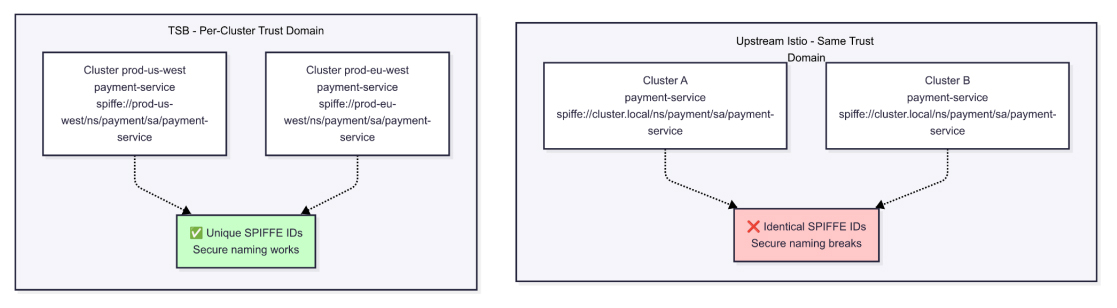

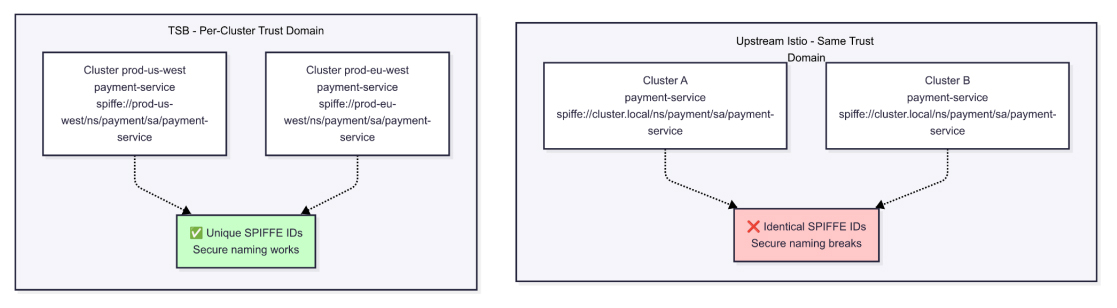

TSB’s Secure Naming across clusters

In distributed systems, identity must be both verifiable and unambiguous. Without that, trust breaks down across layers, and no amount of encryption compensates for confusion in who is communicating with whom.

Istio relies on mutual TLS to establish trust between services. This trust is validated through the Subject Alternative Name field in each service’s certificate. That identity is expressed as a SPIFFE ID, composed of three elements: trust domain, namespace, and service account.

For example: spiffe://cluster.local/ns/payment/sa/payment-service

This model works within a single cluster where the trust domain is bounded. The problem emerges in multicluster deployments where upstream Istio reuses the same trust domain—cluster.local—across all environments. Namespaces and service accounts often follow shared naming conventions, which results in services across clusters presenting identical SPIFFE IDs.

When two services in separate clusters claim the same identity, clients can no longer distinguish one from the other. This breaks secure naming, opening the door to unintentional privilege escalation and DNS spoofing. The failure is subtle at first, but the implications compound quickly.

Tetrate Service Bridge resolves this by assigning each cluster a distinct trust domain. A service running in prod-us-west is issued a SPIFFE ID that reflects that locality. One running in prod-eu-west receives a different, unique identity.

For example:

- spiffe://prod-us-west/ns/payment/sa/payment-service

- spiffe://prod-eu-west/ns/payment/sa/payment-service

This adjustment seems minor but is foundational. With unique trust domains, every service identity becomes globally distinct. Secure naming works across clusters as reliably as it does within them. Clients only accept connections (TLS certificates) from services that match the expected domain, namespace, and service account combination.

At enterprise scale, this is not just a best practice. It is a requirement for maintaining trust across a federated mesh.

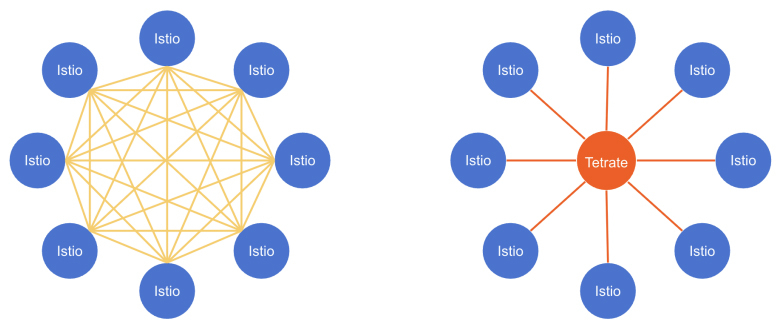

TSB’s Hub and Spoke Architecture

As service mesh footprints grow, especially across clusters and teams, the topology used to connect and manage those clusters plays a major role in operational scalability.

TSB architecture reflects how enterprises actually operate. It enforces mutual TLS and identity-aware routing by default, limiting exposure to only the services and namespaces required. Kubernetes APIs remain isolated between clusters, reducing the risk of lateral movement and satisfying common InfoSec constraints. Gateways provide regional failover without application rework. Policy and observability remain consistent across OpenShift, EKS, and bare metal.

This model has already been proven at scale, securing traffic and policy coordination across more than 500 federated clusters without the configuration sprawl or operational fragility seen in peer-based approaches.

By contrast, open source and vendor models that rely on peer to peer or multi-hub topologies become increasingly difficult to manage. Peer-based and multi-hub models introduce new trust relationships and policy dependencies with every cluster. In early customer deployments, propagation delays frequently exceeded 20 minutes. These delays disrupted rollouts, caused friction across teams, and increased audit overhead. Tetrate’s hub-and-spoke design eliminates these bottlenecks, bringing propagation times down to seconds and restoring autonomy to platform and application teams.

Centralized, hub-and-spoke architectures reduce that propagation time to seconds while minimizing inter-team dependencies and audit complexity. The result is a mesh that scales predictably while maintaining control plane simplicity and minimizing configuration sprawl.

Each cluster runs its own Istio control plane for local control. Cross-cluster traffic flows through east-west gateways no pod-to-pod networking. Only essential metadata is shared, keeping config lean and discovery fast. Scales cleanly, supports FedRAMP and keeps developers and operators out of each other’s way.

Figure 1: TSB Multi-Cluster architecture

Figure 2: Onboarding a new cluster into the mesh needs only new Edge details to be updated

Analogy of Internet Scale

A very good analogy to describe this architectural flow is the BGP and the Internet. You have total knowledge of your local network, and to get from your machine to hosts on other networks you use routes published by BGP. These routes tell us what local gateway to forward traffic to reach a remote address. In our setup, each Edge instance has total knowledge of its local cluster. The Central fills in the role of BGP, publishing “layer 7 routes” that tell Istio which remote Gateways (other Istio clusters in the mesh) to forward traffic to reach a remote service. This gives platform teams the control and efficiency they need to operate secure, resilient service meshes at scale.

The Hard-Won Lesson: Earning Trust at Enterprise Scale

The most valuable lesson from these two institutions is that trust isn’t earned by selling easy answers. It’s built on a foundation of inconvenient truths. The simple-sounding peer-to-peer architecture is often the most complex and fragile in practice. We earned the trust of these large institutions not by dumbing down this reality, but by confronting it head-on with an architecture designed for the true complexities of enterprise scale.

One waited for failure and paid the price in outages, in team breakdowns, in lost velocity. The other acted early and never needed to recover. Both ended up at the same architectural decision but only one had to unlearn the hard way. They chose foresight over convenience, building a hub-and-spoke mesh that absorbed complexity and set them up to scale rather than starting with an easy but brittle architecture. Both institutions ultimately converged on the same solution.

If there’s a lesson to be drawn from these two institutions, it is this: trust is not earned by offering easy answers, nor by underestimating the intelligence of those we serve. The systems we support clear global financial transactions and secure national infrastructure. Assumptions get tested fast, often ruthlessly. We don’t downplay complexity nor do we obscure the trade-offs. We surface them early because deferring them only compounds the risk. That discipline has earned us the confidence of institutions where the cost of failure is measured in far more than downtime.