Add AI Features to Your App with Ease and Flexibility

Tetrate Agent Router Service makes it easy to add AI features into your applications by providing a single, OpenAI-compatible API to connect with various LLMs. A router makes it easy to test and experiment with different models to find the best mix of speed, context window size, and “intelligence” for your specific needs. By keeping API keys separate for different environments and securing calls on the server side, you can effectively manage cost and monitor your AI features.

Add AI Features to Your App with Ease and Flexibility: Powered by the Tetrate Agent Router Service

Adding AI to your app doesn’t have to mean building a chatbot. AI features can show up as intelligent search, personalized suggestions, summarization, or contextual automation that quietly makes your app feel smarter and more intuitive.

Building AI features today is easier than ever. You can experiment with models, try different providers, and find what delivers the best balance of cost, speed, and quality, all without managing complex infrastructure.

This blog post will share some key things to think about when picking the model for your AI features, how to leverage the Tetrate Agent Router Service best to power your application’s AI features, and give you some ideas to get started.

Now Available

MCP Catalog with verified first-party servers, profile-based configuration, and OpenInference observability are now generally available in Tetrate Agent Router Service. Start building production AI agents today with $5 free credit.

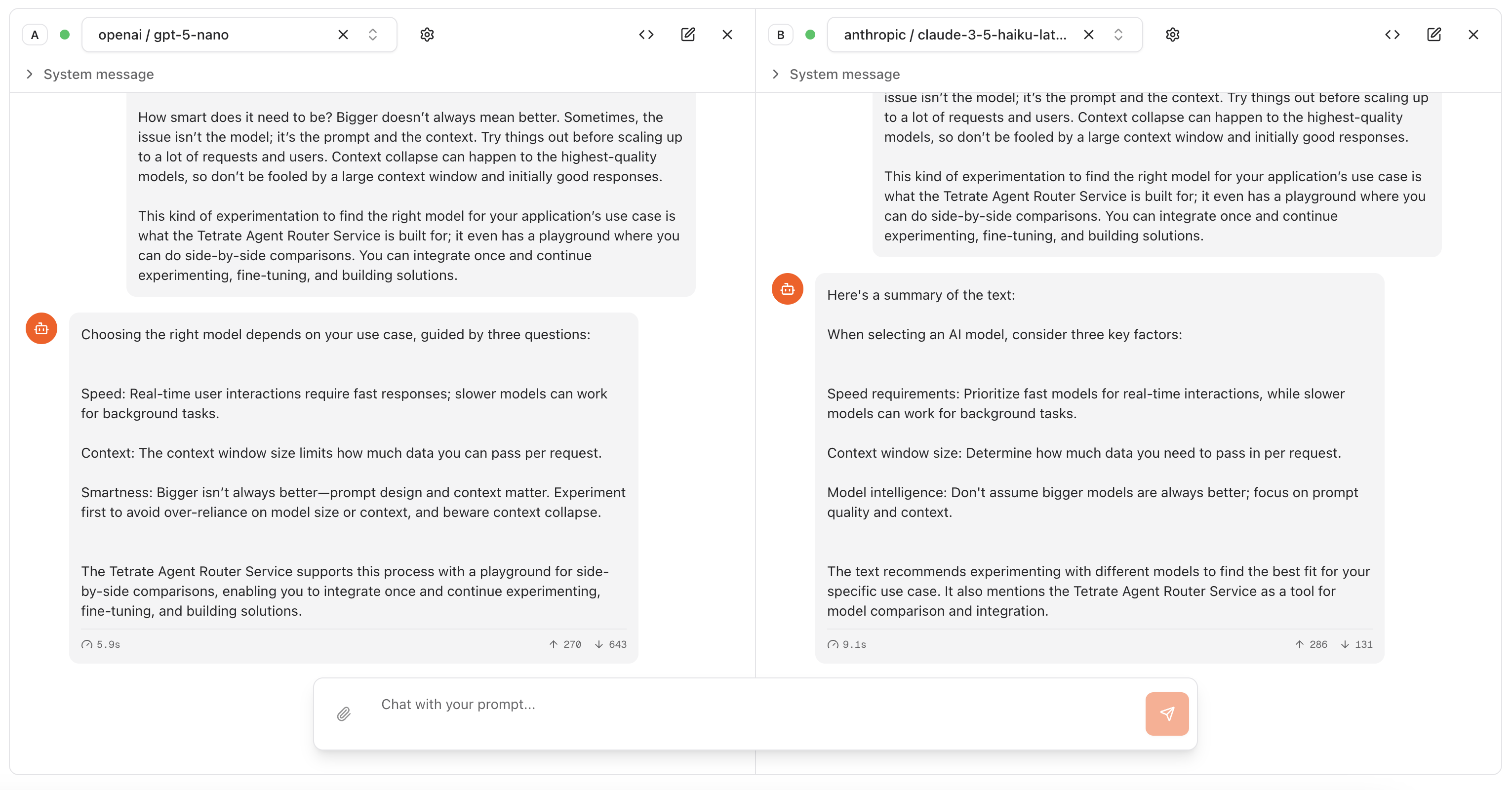

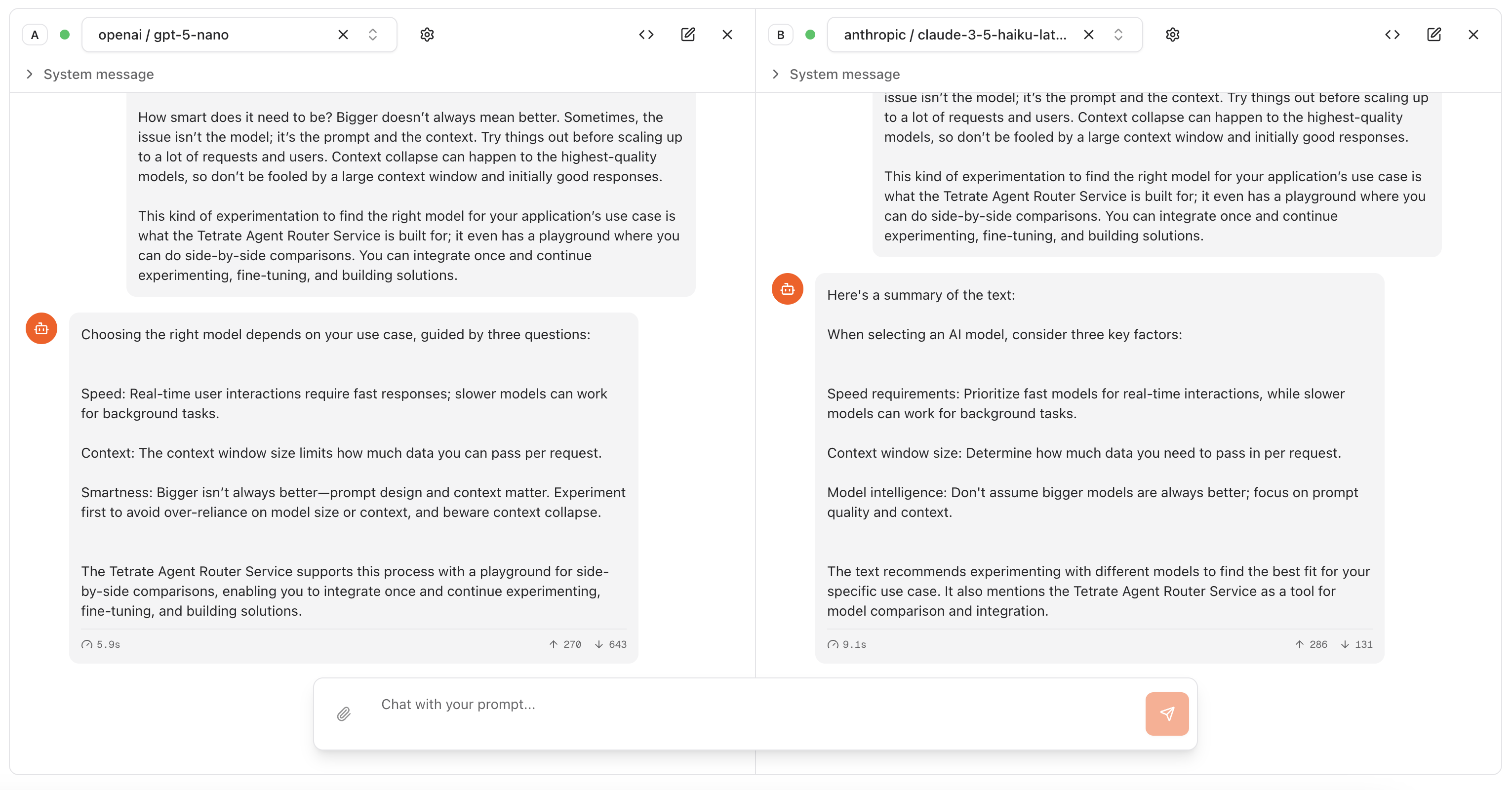

How to Choose the Right Model

Picking the right model starts with understanding your use case. Here are three questions to guide you:

-

How fast does it need to be? If you’re building something users interact with in real-time, like AI search or autocompletion, speed is everything. For background tasks, like daily summaries or recommendations, you can afford to use slower models.

-

How much context does it need? The size of the context window determines how much data you can pass in per request. Are you passing in a 100k-word novel or a social media post in your prompt, or somewhere in between?

-

How smart does it need to be? Bigger doesn’t always mean better. Sometimes, the issue isn’t the model; it’s the prompt and the context. Try things out before scaling up to a lot of requests and users. Context collapse can happen to the highest-quality models, so don’t be fooled by a large context window and initially good responses.

This kind of experimentation to find the right model for your application’s use case is what the Tetrate Agent Router Service is built for; it even has a playground where you can do side-by-side comparisons. You can integrate once and continue experimenting, fine-tuning, and building solutions.

Route AI Traffic to LLMs from Your App Without the Headache

The Tetrate Agent Router Service helps you integrate, test, and manage calls to multiple model providers through one unified API. It’s OpenAI-compatible, meaning you can use your OpenAI API compatible SDKs, and it’s built with real app environments in mind.

Here’s how to use it effectively:

1. First, Keep Your Environments Separated for better control

Set up API Keys for your different environments. Separate keys for each environment to keep your observability and cost control clear:

- Development: for local testing

- Coding Tools: for your AI-assisted IDEs

- Non-Prod / CI / Production: for deployed environments

This separation makes it easy to trace usage, view logs, control spend, and understand how your features behave in each environment.

2. Make Sure Your Integration is Secure

Never expose your API key in the browser. Your application’s calls to the Agent Router Service should be made from the server side.

If your app doesn’t already have a backend, don’t worry—modern platforms make it easy to run lightweight server code with:

- Netlify Edge Functions

- Supabase Edge Functions

- or similar serverless runtimes

These let you securely call the Agent Router Service API and return results to your frontend—without maintaining a full server.

3. Get Building Fast with SDKs

Since the Agent Router Service is OpenAI-compatible, you can use your favorite tools:

- OpenAI SDK for JavaScript: perfect for server-side integrations

- Vercel AI SDK: ideal for React apps; supports streaming responses for a snappy UX

No need to learn a new API. Just switch your endpoint to the Agent Router Service and start experimenting.

Building Inspiration: Try Building These 3 AI Features

Here are a few simple ideas to try out and prototype, which will teach you the core skills for integrating AI into an application.

- Knowledge Base Summarizer: Add a short AI-generated summary at the top of each article to help users understand content instantly.

- Response Suggester Plugin: Build a Chrome extension that drafts responses automatically to save time in communication-heavy workflows.

- GitHub Activity Summarizer: Pull recent GitHub events, summarize them, and display updates in a clean dashboard.

Summary

The Tetrate Agent Router Service makes it easy to add AI features into your applications by providing a single, OpenAI-compatible API to connect with various LLMs. A router makes it easy to test and experiment with different models to find the best mix of speed, context window size, and “intelligence” for your specific needs. By keeping API keys separate for different environments and securing calls on the server side, you can effectively manage cost and monitor your AI features.

The service also works with familiar SDKs, allowing you to use existing tools while taking advantage of features like streaming responses. These capabilities make it simple to create AI features such as knowledge base summarizers, response suggesters, and activity summaries, improving your app’s user experience.

Start Building Today

Adding AI to your app isn’t about chasing hype; it’s about adding value to your users’ journey. With Tetrate Agent Router Service, you can connect to multiple models, test ideas quickly, and scale what works, all through one API, and track spending in one platform.

Sign up for Tetrate Agent Router Service to get started today. You also get a $5 credit when you sign up with your business email.

Contact us to learn how Tetrate can help your journey. Follow us on LinkedIn for latest updates and best practices.