LLM Gateway Use Cases

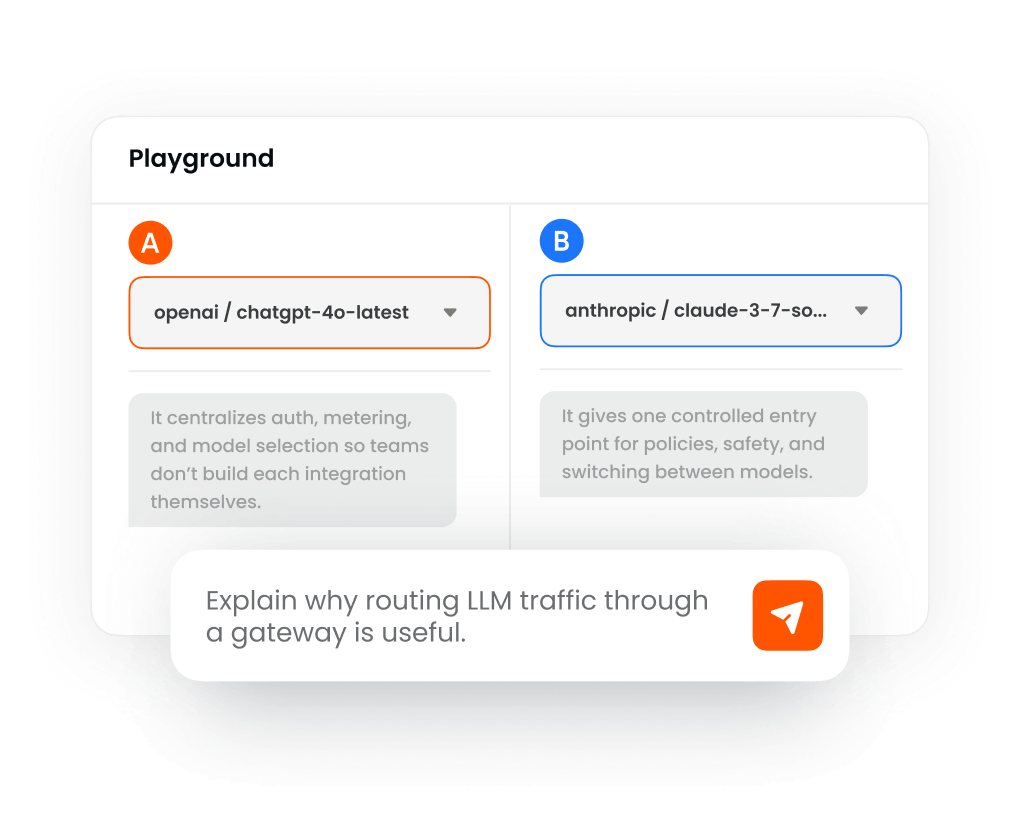

Unified Model Access

Use a single key to access every LLM.

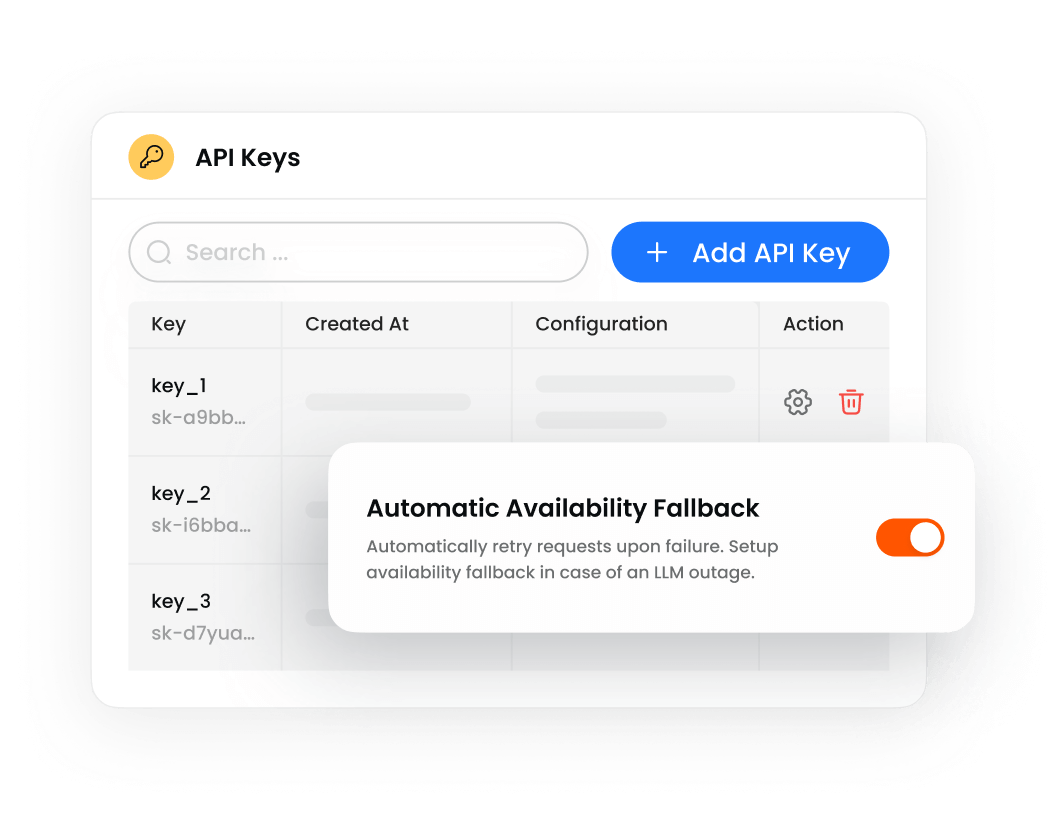

Automatic Fallback

Retry or failover automatically in case of an LLM outage.

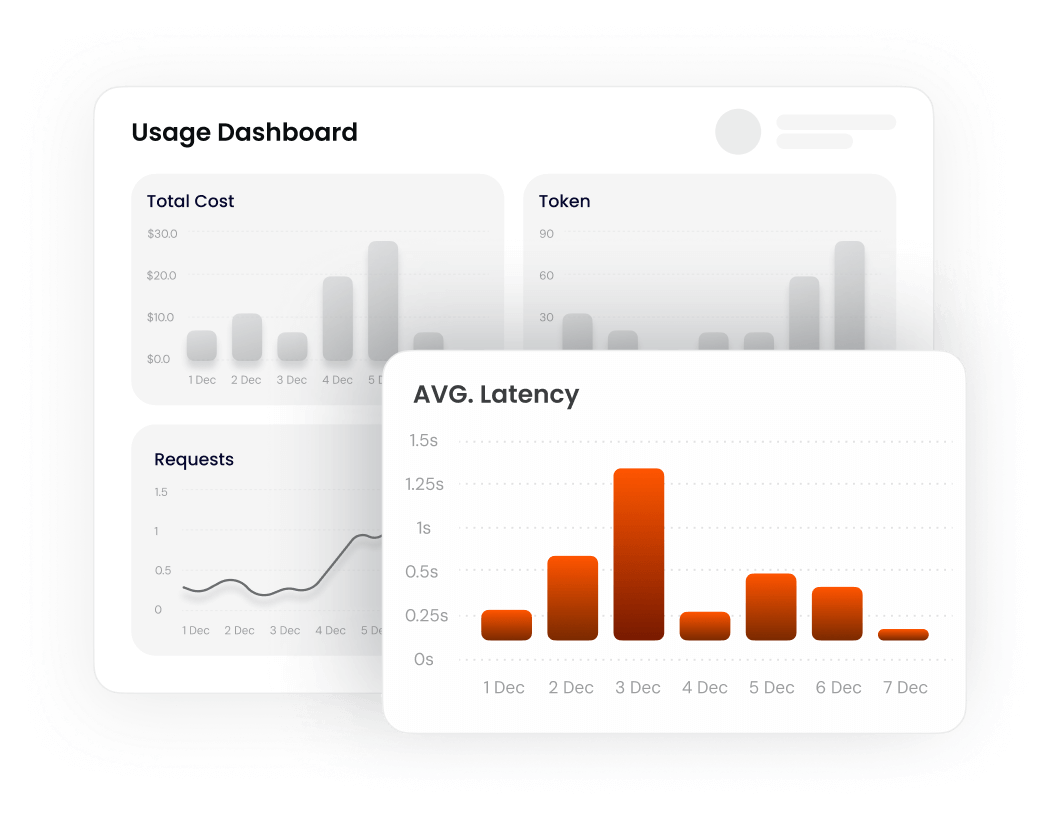

Cost Management

Optimize costs with intelligent request routing.

Get an approved LLM catalog, unified model access, automatic fallback, & cost management

Use a single key to access every LLM.

Retry or failover automatically in case of an LLM outage.

Optimize costs with intelligent request routing.

Get access to an extensive library of LLMs. Curate your catalog with an approved list of LLMs of your choice.

Set rules for automatic retry or failover in case of response failure. Set routing rules based on token limits or request types.

Gain insight on token usage and app consumption. Optimize for costs, performance, and/or speed.

Experiment with outputs from alternative LLMs to rapidly test and compare model response quality.