Supercharge your AI Features with Embeddings using Tetrate Agent Router Service

Embeddings are one of the most powerful, yet most underestimated, tools you can use to supercharge your AI features. This blog post gives you an introductory understanding of why embeddings are powerful, how they work, tips for using them, and some ideas to kickstart your projects.

Supercharge your AI Features with Embeddings using Tetrate Agent Router Service

Embeddings are one of the most powerful, yet most underestimated, tools you can use to supercharge your AI features. This blog post gives you an introductory understanding of why embeddings are powerful, how they work, tips for using them, and some ideas to kickstart your projects.

Prompting without context, filters, or control can create low-quality results; you can do better. If you’re building AI-powered applications, embeddings are one of the most powerful, but most underestimated, tools you can use.

Embeddings let your app understand meaning. They enable everything from more intelligent search and personalized suggestions to automatic content tagging and context-aware chat.

Their use cases range from simple to complex, and your creativity is the only limit. This blog post will give you a basic understanding of why embeddings are powerful, how they work, tips for using them, and some ideas to kickstart your projects.

Now Available

MCP Catalog with verified first-party servers, profile-based configuration, and OpenInference observability are now generally available in Tetrate Agent Router Service. Start building production AI agents today with $5 free credit.

Why Embeddings Are Powerful

Embeddings unlock a superpower for developers: they enable computers to reason about language mathematically.

By turning words, sentences, or paragraphs into numerical representations (vectors), you can measure how similar the meaning of two pieces of text is, not just how they look.

For example, the words “cat” and “dog” are closer together in vector space than “cat” and “giraffe”, because their meanings are more similar. Because “cat” and “dog” are both domesticated pets, whereas the “giraffe” isn’t.

This ability extends beyond words. You can compare entire sentences or paragraphs to find semantically similar ideas.

That means you can build features like:

- Context filters – Only allow inputs that are semantically related to approved topics.

- Automated categorization – Automatically label new issues, articles, or messages based on existing examples.

- Relevant recommendations – Suggest related content or next actions based on meaning, not just keywords.

How Embeddings Work

The clue is in the name: embeddings embed meaning into numbers.

When you call an Embedding API, the model maps your text into a high-dimensional space—a vector that represents the meaning of that text.

You can store these vectors in a vector database, alongside the original text. That means your app knows both:

- the semantic representation (the numbers), and

- the human-readable meaning (the text).

This combination lets you perform semantic searches, similarity comparisons, and clustering—all mathematically.

Transforming text into embeddings by using a model to turn text into a vector representing the meaning of the text through a location in a high-dimensional space.

Tools for Storing and Querying Embeddings

You’ll need a database that supports vector operations to efficiently store and query embeddings. Here are some great options to start with:

- Postgres + pgvector → Easy to set up, especially on Supabase.

- Qdrant → Lightweight and developer-friendly.

- Elasticsearch or OpenSearch → Great if you already use them for text search.

Cloud providers also offer managed vector search options, so check your stack before adding new dependencies.

Tips for Embedding Content

When embedding larger documents, don’t embed the entire document as a single chunk. Break it down into smaller, meaningful pieces, typically paragraphs, with some sentence overlap between them.

That overlap helps preserve context and ensures your embeddings capture the flow of ideas.

For example:

- End a chunk with the last sentence of one paragraph.

- Start the next with the first sentence of the following paragraph.

This “semantic overlap” helps your search results feel cohesive and accurate.

Chunking a document into smaller, meaningful pieces with semantic overlap enhances results compared to embedding large chunks with no overlap.

Querying Your Embeddings

Once your data is embedded and stored, you can query it to find semantically similar content.

To do this:

- Convert the user’s query into an embedding (using the same model as your data).

- Compare it against your stored embeddings in the database.

- Retrieve the most similar ones.

A few key points:

- Always use the same embedding model for indexing and querying.

- Experiment with similarity thresholds and distance metrics; they affect accuracy.

- If you re-embed using a new model, retune your thresholds and test again.

Getting this right is part science, part art—but that’s also what makes it fun.

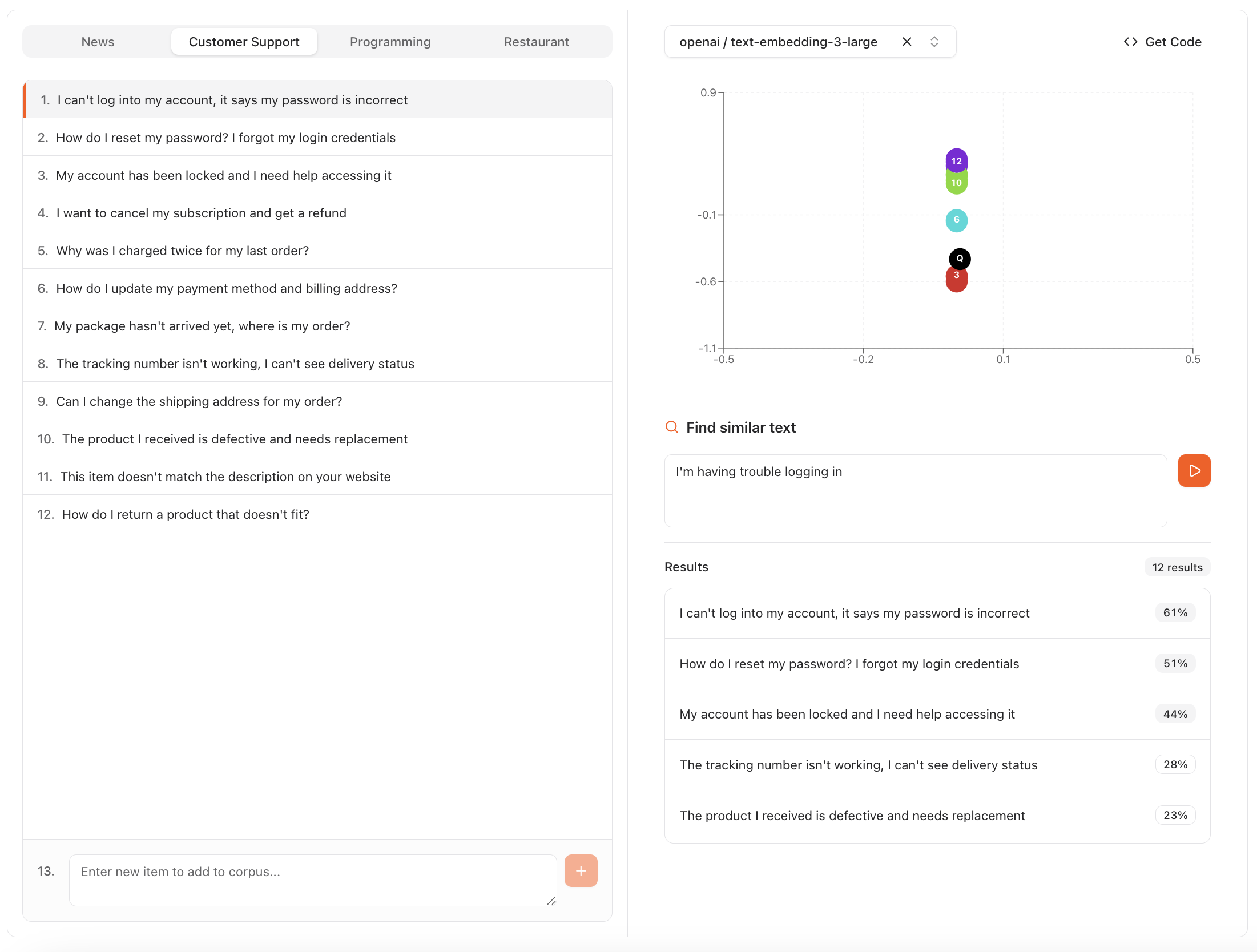

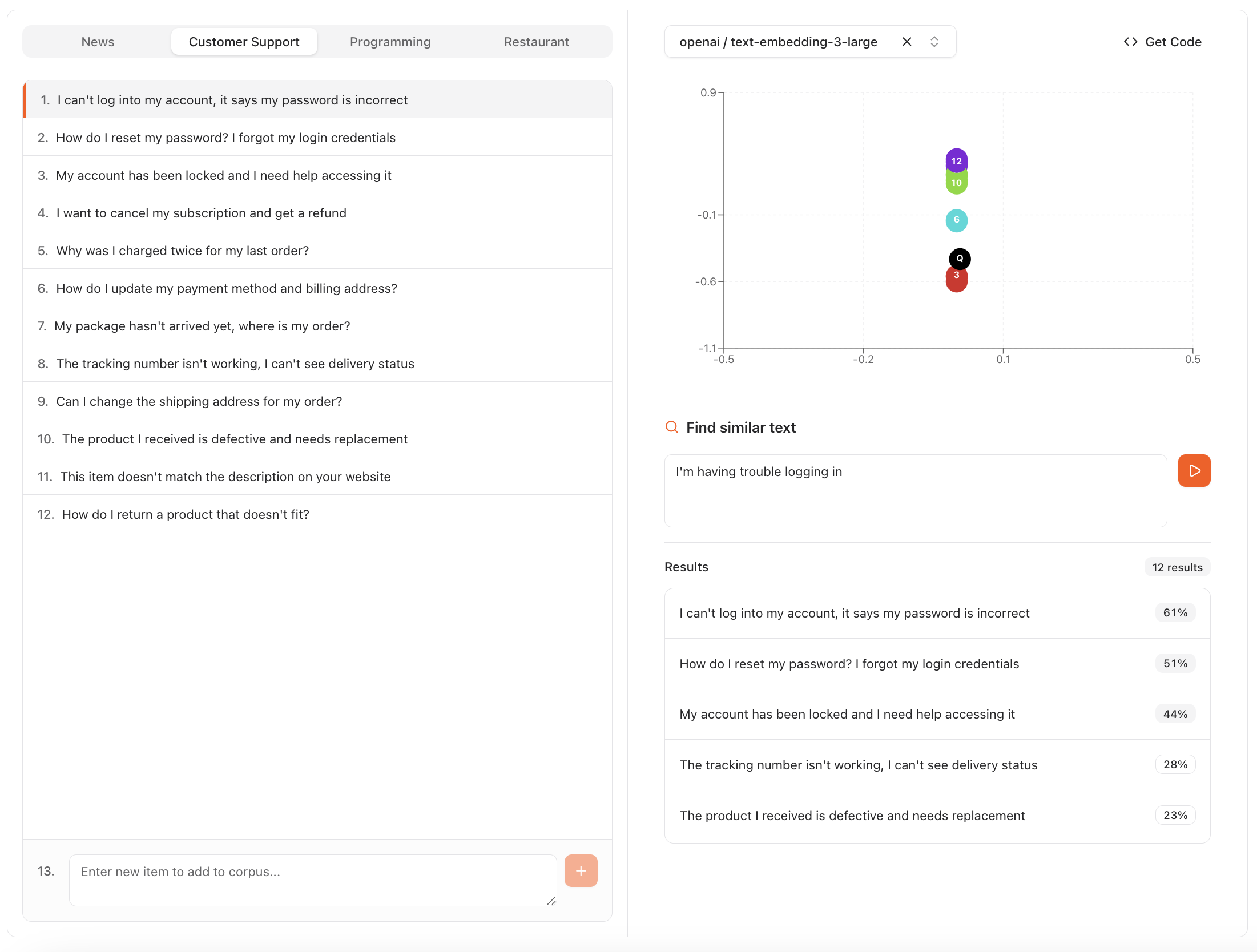

Try Embeddings Yourself

The easiest way to start experimenting is through the Tetrate Agent Router Service. You can create and query embeddings, just like with OpenAI, but with flexible routing and governance built in.

Sign up at router.tetrate.ai to get started. You can even test embeddings right away in the Embeddings Playground.

Builder Project Ideas

Here are some project ideas to help you learn embeddings by building with them:

Input Filter

Create a semantic “allow list” to approve only inputs that are meaningfully related to specific topics. Perfect for chatbots or form input validation.

Issue Label Suggester

Automatically suggest labels for new GitHub or Jira tickets by comparing them to existing ones. Tip: Use an LLM to summarize each issue before embedding it for better accuracy.

Document Search

Embed long documents in smaller chunks and store them in a vector database. Then build a semantic search that retrieves the most relevant sections. Tip: Tune your similarity thresholds to improve precision over time.

Knowledge Base Finder

Match user questions to the most relevant help articles. You can even use an LLM to extract questions answered by each article, embed those, and use them for smarter mapping between queries and answers.

Conclusion: Start Embedding, Start Building

As we convert language into a form a computer can understand, these embeddings enable the development of AI features. We can now advance our applications beyond simple keyword matching to compare meaning within context. By understanding and using embeddings in your application design, you can create context-aware, smarter features that deliver relevant experiences.

Embeddings are the hidden engine behind many of today’s most innovative applications. They power personalization, discovery, and context awareness within your AI-powered applications.

Whether you’re building a chatbot, a search feature, or an intelligent dashboard, embeddings give your app understanding.

Now Available

MCP Catalog with verified first-party servers, profile-based configuration, and OpenInference observability are now generally available in Tetrate Agent Router Service. Start building production AI agents today with $5 free credit.